Requirements Engineering manuscript No. (will be inserted by the editor) Specifying and Ana

- 格式:pdf

- 大小:197.24 KB

- 文档页数:18

Requirements Engineering in the Year 00: A Research PerspectiveAxel van LamsweerdeDépartement d’Ingénierie InformatiqueUniversité catholique de LouvainB-1348 Louvain-la-Neuve (Belgium)avl@info.ucl.ac.beABSTRACTRequirements engineering(RE)is concerned with the iden-tification of the goals to be achieved by the envisioned sys-tem,the operationalization of such goals into services and constraints,and the assignment of responsibilities for the resulting requirements to agents such as humans,devices, and software.The processes involved in RE include domain analysis,elicitation,specification,assessment, negotiation,documentation,and evolution.Getting high-quality requirements is difficult and critical.Recent surveys have confirmed the growing recognition of RE as an area of utmost importance in software engineering research and practice.The paper presents a brief history of the main concepts and techniques developed to date to support the RE task,with a special focus on modeling as a common denominator to all RE processes.The initial description of a complex safety-critical system is used to illustrate a number of current research trends in RE-specific areas such as goal-oriented requirements elaboration,conflict management,and the handling of abnormal agent behaviors.Opportunities for goal-based architecture derivation are also discussed together with research directions to let thefield move towards more disciplined habits.1. INTRODUCTIONSoftware requirements have been repeatedly recognized during the past25years to be a real problem.In their early empirical study,Bell and Thayer observed that inadequate, inconsistent,incomplete,or ambiguous requirements are numerous and have a critical impact on the quality of the resulting software[Bel76].Noting this for different kinds of projects,they concluded that“the requirements for a system do not arise naturally;instead,they need to be engineered and have continuing review and revision”.Boehm esti-mated that the late correction of requirements errors could cost up to200times as much as correction during such requirements engineering[Boe81].In his classic paper on the essence and accidents of software engineering,Brooks stated that“the hardest single part of building a sofware system is deciding precisely what to build...Therefore,the most important function that the software builder performs for the client is the iterative extraction and refinement of the product requirements”[Bro87].In her study of software errors in NASA’s V oyager and Galileo programs,Lutz reported that the primary cause of safety-related faults was errors in functional and interface requirements [Lut93]. Recent studies have confirmed the requirements problem on a much larger scale.A survey over8000projects under-taken by350US companies revealed that one third of the projects were never completed and one half succeeded only partially,that is,with partial functionalities,major cost overruns,and significant delays[Sta95].When asked about the causes of such failure executive managers identifed poor requirements as the major source of problems(about half of the responses)-more specifically,the lack of user involve-ment(13%),requirements incompleteness(12%),changing requirements(11%),unrealistic expectations(6%),and unclear objectives(5%).On the European side,a recent sur-vey over3800organizations in17countries similarly con-cluded that most of the perceived software problems are in the area of requirements specification(>50%)and require-ments management (50%) [ESI96].Improving the quality of requirements is thus crucial.But it is a difficult objective to achieve.To understand the reason one shouldfirst define what requirements engineering is really about.The oldest definition already had the main ingredients.In their seminal paper,Ross and Schoman stated that“require-ments definition is a careful assessment of the needs that a system is to fulfill.It must say why a system is needed,based on current or foreseen conditions,which may be internal operations or an external market.It must say what system features will serve and satisfy this context.And it must say how the system is to be constructed”[Ros77b].In other words,requirements engineering must address the contex-tual goals why a software is needed,the functionalities the software has to accomplish to achieve those goals,and the constraints restricting how the software accomplishing those functions is to be designed and implemented.Such goals,functions and constraints have to be mapped to pre-cise specifications of software behavior;their evolution over time and across software families has to be coped with as well [Zav97b].This definition suggests why the process of engineering requirements is so complex.•The scope is fairly broad as it ranges from a world of human organizations or physical laws to a technical arti-fact that must be integrated in it;from high-level objec-tives to operational prescriptions;and from informal to formal.The target system is not just a piece of software,Invited paper for ICSE’2000 - To appear inLimerick, June 2000, ACM PressProc. 22nd International Conference on Software Engineering,but also comprises the environment that will surround it; the latter is made of humans,devices,and/or other soft-ware.The whole system has to be considered under many facets,e.g.,socio-economic,physical,technical,opera-tional, evolutionary, and so forth.•There are multiple concerns to be addressed beside func-tional ones-e.g.,safety,security,usability,flexibility,per-formance,robustness,interoperability,cost, maintainability,and so on.These non-functional concerns are often conflicting.•There are multiple parties involved in the requirements engineering process,each having different background, skills,knowledge,concerns,perceptions,and expression means-namely,customers,commissioners,users,domain experts,requirements engineers,software developers,or system maintainers.Most often those parties have conflict-ing viewpoints.•Requirement specifications may suffer a great variety of deficiencies[Mey85].Some of them are errors that may have disastrous effects on the subsequent development steps and on the quality of the resulting software product-e.g.,inadequacies with respect to the real needs,incom-pletenesses,contradictions,and ambiguities;some others areflaws that may yield undesired consequences(such as waste of time or generation of new errors)-e.g.,noises, forward references,overspecifications,or wishful thinking.•Requirements engineering covers multiple intertwined activities.–Domain analysis:the existing system in which the soft-ware should be built is studied.The relevant stakehold-ers are identified and interviewed.Problems and deficiencies in the existing system are identified;oppor-tunities are investigated;general objectives on the target system are identified therefrom.–Elicitation:alternative models for the target system are explored to meet such objectives;requirements and assumptions on components of such models are identi-fied,possibly with the help of hypothetical interaction scenarios.Alternative models generally define different boundaries between the software-to-be and its environ-ment.–Negotiation and agreement:the alternative require-ments/assumptions are evaluated;risks are analyzed;"best"tradeoffs that receive agreement from all parties are selected.–Specification:the requirements and assumptions are for-mulated in a precise way.–Specification analysis:the specifications are checked for deficiencies(such as inadequacy,incompleteness or inconsistency)and for feasibility(in terms of resources required, development costs, and so forth).–Documentation:the various decisions made during the process are documented together with their underlying rationale and assumptions.–Evolution:the requirements are modified to accommo-date corrections,environmental changes,or new objec-tives.Given such complexity of the requirements engineering pro-cess,rigorous techniques are needed to provide effective support.The objective of this paper is to provide:a brief his-tory of25years of research efforts along that way;a concrete illustration of what kind of techniques are available today; and directions to be explored for requirements engineering to become a mature discipline.The presentation will inevitably be biased by my own work and background.Although the area is inherently interdisci-plinary,I will deliberately assume a computing science viewpoint here and leave the socological and psychological dimensions aside(even though they are important).In partic-ular,I will not cover techniques for ethnographic observation of work environments,interviewing,negotiation,and so forth.The interested reader may refer to[Gog93,Gog94]for a good account of those dimensions.A comprehensive,up-to-date survey on the intersecting area of information model-ing can be found in [Myl98].2. THE FIRST 25 YEARS: A FEW RESEARCHMILESTONESRequirements engineering addresses a wide diversity of domains(e.g.,banking,transportation,manufacturing),tasks (e.g.,administrative support,decision support,process con-trol)and environments(e.g.,human organizations,physical phenomena).A specific domain/task/environment may require some specific focus and dedicated techniques.This is in particular the case for reactive systems as we will see after reviewing the main stream of research..Modeling appears to be a core process in requirements engi-neering.The existing system has to be modelled in some way or another;the alternative hypothetical systems have to be modelled as well.Such models serve as a basic common interface to the various activities above.On the one hand, they result from domain analysis,elicitation,specification analysis,and negotiation.On the other hand,they guide fur-ther domain analysis,elicitation,specification analysis,and negotiation.Models also provide the basis for documenta-tion and evolution.It is therefore not surprising that most of the research to date has been devoted to techniques for mod-eling and specification.The basic questions that have been addressed over the years are:•what aspects to model in the why-what-how range,•how to model such aspects,•how to define the model precisely,•how to reason about the model.The answer to thefirst question determines the ontology of conceptual units in terms of which models will be built-e.g., data,operations,events,goals,agents,and so forth.The answer to the second question determines the structuring relationships in terms of which such units will be composed and linked together-e.g.,input/output,trigger,generaliza-tion,refinement,responsibility assignment,and so forth.The answer to the third question determines the informal,semi-formal,or formal specification technique used to define the required properties of model components precisely.The answer to the fourth question determines the kind of reason-ing technique available for the purpose of elicitation,specifi-cation, and analysis.The early daysThe seminal paper by Ross and Schoman opened thefield [Ros97b].Not only did this paper comprehensively explain the scope of requirements engineering;it also suggested goals,viewpoints,data,operations,agents,and resources as potential elements of an ontology for RE.The companion paper introduced SADT as a specific modeling technique [Ros97a].This technique was a precursor in many respects. It supported multiple models linked through consistency rules-a model for data,in which data are defined by produc-ing/consuming operations;a model for operations,in which operations are defined by input/output data;and a data/oper-ation duality principle.The technique was ontologically richer than many techniques developed afterwards.In addi-tion to data and operations,it supported some rudimentary representation of events,triggering operations,and agents responsible for them.The technique also supported the step-wise refinement of global models into more detailed ones-an essential feature for complex models.SADT was a semi-formal technique in that it could only support the formaliza-tion of the declaration part of the system under consideration -that is,what data and operations are to be found and how they relate to each other;the requirements on the data/opera-tions themselves had to be asserted in natural language.The semi-formal language,however,was graphical-an essential feature for model communicability.Shortly after,Bubenko introduced a modeling technique for capturing entities and events.Formal assertions could be written to express requirements about them,in particular, temporal constraints[Bub80].At that time it was already recognized that such entities and events had to take part in the real world surrounding the software-to-be [Jac78]. Other semi-formal techniques were developed in the late seventies,notably,entity-relationship diagrams for the mod-eling of data[Che76],structured analysis for the stepwise modeling of operations[DeM78],and state transition dia-grams for the modeling of user interaction[Was79].The popularity of those techniques came from their simplicity and dedication to one specific concern;the price to pay was their fairly limited scope and expressiveness,due to poor underlying ontologies and limited structuring facilities. Moreover they were rather vaguely defined.People at that time started advocating the benefits of precise and formal specifications,notably,for checking specification adequacy through prototyping [Bal82].RML brought the SADT line of research significantly further by introducing rich structuring mechanisms such as generali-zation,aggregation and classification[Gre82].In that sense it was a precursor to object-oriented analysis techniques. Those structuring mechanisms were applicable to three kinds of conceptual units:entities,operations,and con-straints.The latter were expressed in a formal assertion lan-guage providing,in particular,built-in constructs for temporal referencing.That was the time where progress in database modeling[Smi77],knowledge representation [Bro84,Bra85],and formal state-based specification [Abr80]started penetrating ourfield.RML was also proba-bly thefirst requirements modeling language to have a for-mal semantics,defined in terms of mappings tofirst-order predicate logic [Gre86].Introducing agentsA next step was made by realizing that the software-to-be and its environment are both made of active components. Such components may restrict their behavior to ensure the constraints they are assigned to.Feather’s seminal paper introduced a simple formal framework for modeling agents and their interfaces,and for reasoning about individual choice of behavior and responsibility for constraints[Fea87]. Agent-based reasoning is central to requirements engineer-ing since the assignment of responsibilities for goals and constraints among agents in the software-to-be and in the environment is a main outcome of the RE process.Once such responsibilities are assigned the agents have contractual obligations they need to fulfill[Fin87,Jon93,Ken93]. Agents on both sides of the software-environment boundary interact through interfaces that may be visualized through context diagrams [War85].Goal-based reasoningThe research efforts so far were in the what-how range of requirements engineering.The requirements on data and operations were just there;one could not capture why they were there and whether they were sufficient for achieving the higher-level objectives that arise naturally in any require-ments engineering process[Hic74,Mun81,Ber91,Rub92]. Yue was probably thefirst to argue that the integration of explicit goal representations in requirements models pro-vides a criterion for requirements completeness-the require-ments are complete if they are sufficient to establish the goal they are refining[Yue87].Broadly speaking,a goal corre-sponds to an objective the system should achieve through cooperation of agents in the software-to-be and in the envi-ronment.Two complementary frameworks arose for integrating goals and goal refinements in requirements models:a formal framework and a qualitative one.In the formal framework [Dar91],goal refinements are captured through AND/OR graph structures borrowed from problem reduction tech-niques in artificial intelligence[Nil71].AND-refinement links relate a goal to a set of subgoals(called refinement); this means that satisfying all subgoals in the refinement is a sufficient condition for satisfying the goal.OR-refinement links relate a goal to an alternative set of refinements;this means that satisfying one of the refinements is a sufficient condition for satisfying the goal.In this framework,a con-flict link between goals is introduced when the satisfaction of one of them may preclude the satisfaction of the others. Operationalization links are also introduced to relate goals to requirements on operations and objects.In the qualitative framework[Myl92],weaker versions of such link types are introduced to relate“soft”goals[Myl92].The idea is that such goals can rarely be said to be satisfied in a clear-cut sense.Instead of goal satisfaction,goal satisficing is intro-duced to express that lower-level goals or requirements are expected to achieve the goal within acceptable limits,ratherthan absolutely.A subgoal is then said to contribute partially to the goal,regardless of other subgoals;it may contribute positively or negatively.If a goal is AND-decomposed into subgoals and all subgoals are satisficed,then the goal is sat-isficeable;but if a subgoal is denied then the goal is deniable. If a goal contributes negatively to another goal and the former is satisficed, then the latter is deniable.The formal framework gave rise to the KAOS methodology for eliciting,specifying,and analyzing goals,requirements, scenarios,and responsibility assignments[Dar93].An optional formal assertion layer was introduced to support various forms of formal reasoning.Goals and requirements on objects are formalized in a real-time temporal logic [Man92,Koy92];one can thereby prove that a goal refine-ment is correct and complete,or complete such a refinement [Dar96].One can also formally detect conflicts among goals [Lam98b]or generate high-level exceptions that may prevent their achievement[Lam98a].Requirements on operations are formalized by pre-,post-,and trigger conditions;one can thereby establish that an operational requirement“imple-ments”higher-level goals[Dar93],or infer such goals from scenarios [Lam98c].The qualitative framework gave rise to the NFR methodol-ogy for capturing and evaluating alternative goal decomposi-tions.One may see it as a cheap alternative to the formal framework,for limited forms of goal-based reasoning,and as a complementary framework for high-level goals that can-not be formalized.The labelling procedure in[Myl92]is a typical example of qualitative reasoning on goals specified by names,parameters,and degrees of satisficing/denial by child goals.This procedure determines the degree to which a goal is satisficed/denied by lower-level requirements,by propagating such information along positive/negative sup-port links in the goal graph.The strength of those goal-based frameworks is that they do not only cover functional goals but also non-functional ones; the latter give rise to a wide range of non-functional require-ments.For example,[Nix93]showed how the NFR frame-work could be used to qualitatively reason about performance requirements during the RE and design phases. Informal analysis techniques based on similar refinement trees were also proposed for specific types of non-functional requirements,such as fault trees[Lev95]and threat trees [Amo94]for exploring safety and security requirements, respectively.Goal and agent models can be integrated through specific links.In KAOS,agents may be assigned to goals through AND/OR responsibility links;this allows alternative bound-aries to be investigated between the software-to-be and its environment.A responsibility link between an agent and a goal means that the agent can commit to perform its opera-tions under restricted pre-,post-,and trigger conditions that ensure the goal[Dar93].Agent dependency links were defined in[YuM94,Yu97]to model situations where an agent depends on another for a goal to be achieved,a task to be accomplished,or a resource to become available.For each kind of dependency an operator is defined;operators can be combined to define plans that agents may use to achieve goals.The purpose of this modeling is to support the verification of properties such as the viability of an agent's plan or the fulfilment of a commitment between agents. Viewpoints, facets, and conflictsBeside the formal and qualitative reasoning techniques above,other work on conflict management has emphasized the need for handling conflicts at the goal level.A procedure was suggested in[Rob89]for identifying conflicts at the requirements level and characterizing them as differences at goal level;such differences are resolved(e.g.,through nego-tiation)and then down propagated to the requirements level. In[Boe95],an iterative process model was proposed in which(a)all stakeholders involved are identified together with their goals(called win conditions);(b)conflicts between these goals are captured together with their associ-ated risks and uncertainties;and(c)goals are reconciled through negotiation to reach a mutually agreed set of goals, constraints, and alternatives for the next iteration.Conflicts among requirements often arise from multiple stakeholders viewpoints[Eas94].For sake of adequacy and completeness during requirements elicitation it is essential that the viewpoints of all parties involved be captured and eventually integrated in a consistent way.Two kinds of approaches have emerged.They both provide constructs for modeling and specifying requirements from different view-points in different notations.In the centralized approach,the viewpoints are translated into some logic-based“assembly”language for global analysis;viewpoint integration then amounts to some form of conjunction[Nis89,Zav93].In the distributed approach,viewpoints have specific consistency rules associated with them;consistency checking is made by evaluating the corresponding rules on pairs of viewpoints [Nus94].Conflicts need not necessarily be resolved as they arise;different viewpoints may yield further relevant infor-mation during elicitation even though they are conflicting in some respect.Preliminary attempts have been made to define a paraconsistent logical framework allowing useful deduc-tions to be made in spite of inconsistency [Hun98]. Multiparadigm specification is especially appealling for requirements specification.In view of the broad scope of the RE process and the multiplicity of system facets,no single language will ever serve all purposes.Multiparadigm frame-works have been proposed to combine multiple languages in a semantically meaningful way so that different facets can be captured by languages thatfit them best.OMT’s combina-tion of entity-relationship,dataflow,and state transition dia-grams was among thefirst attempts to achieve this at a semi-formal level[Rum91].The popularity of this modeling tech-nique and other similar ones led to the UML standardization effort[Rum99].The viewpoint construct in[Nus94]pro-vides a generic mechanism for achieving such combinations. Attempts to integrate semi-formal and formal languages include[Zav96],which combines state-based specifications [Pot96]andfinite state machine specifications;and[Dar93], which combines semantic nets[Qui68]for navigating through multiple models at surface level,temporal logic for the specification of the goal and object models[Man92, Koy92],and state-based specification[Pot96]for the opera-tion model.Scenario-based elicitation and validationEven though goal-based reasoning is highly appropriate for requirements engineering,goals are sometimes hard to elicit. Stakeholders may have difficulties expressing them in abstracto.Operational scenarios of using the hypothetical system are sometimes easier to get in thefirst place than some goals that can be made explicit only after deeper understanding of the system has been gained.This fact has been recognized in cognitive studies on human problem solving[Ben93].Typically,a scenario is a temporal sequence of interaction events between the software-to-be and its environment in the restricted context of achieving some implicit purpose(s).A recent study on a broader scale has confirmed scenarios as important artefacts used for a variety of purposes,in particular in cases when abstract modeling fails[Wei98].Much research effort has therefore been recently put in this direction[Jar98].Scenario-based techniques have been proposed for elicitation and for valida-tion-e.g.,to elicit requirements in hypothetical situations [Pot94];to help identify exceptional cases[Pot95];to popu-late more abstract conceptual models[Rum91,Rub92];to validate requirements in conjunction with prototyping [Sut97],animation[Dub93],or plan generation tools [Fic92]; to generate acceptance test cases [Hsi94].The work on deficiency-driven requirements elaboration is especially worth pointing out.A system there is specified by a set of goals(formalized in some restricted temporal logic), a set of scenarios(expressed in a Petri net-like language), and a set of agents producing restricted scenarios to achieve the goals they are assigned to.The technique is twofold:(a) detect inconsistencies between scenarios and goals;(b) apply operators that modify the specification to remove the inconsistencies.Step(a)is carried out by a planner that searches for scenarios leading to some goal violation. (Model checkers might probably do the same job in a more efficient way[McM93,Hol97,Cla99].)The operators offered to the analyst in Step(b)encode heuristics for speci-fication debugging-e.g.,introduce an agent whose responsi-bility is to prevent the state transitions that are the last step in breaking the goal.There are operators for introducing new types of agents with appropriate responsibilities,splitting existing types,introducing communication and synchroniza-tion protocols between agents,weakening idealized goals, and so forth.The repeated application of deficiency detec-tion and debugging operators allows the analyst to explore the space of alternative models and hopefully converge towards a satisfactory system specification.The problem with scenarios is that they are inherently par-tial;they raise a coverage problem similar to test cases,mak-ing it impossible to verify the absence of errors.Instance-level trace descriptions also raise the combinatorial explo-sion problem inherent to the enumeration of combinations of individual behaviors.Scenarios are generally procedural, thus introducing risks of overspecification.The description of interaction sequences between the software and its envi-ronment may force premature choices on the precise bound-ary between st but not least,scenarios leave required properties about the intended system implicit,in the same way as safety/liveness properties are implicit in a pro-gram trace.Work has therefore begun on inferring goal/ requirement specifications from scenarios in order to support more abstract, goal-level reasoning [Lam98c].Back to groundworkIn parallel with all the work outlined above,there has been some more fundamental work on clarifying the real nature of requirements[Jac95,Par95,Zav97].This was motivated by a certain level of confusion and amalgam in the literature on requirements and software specifications.At about the same time,Jackson and Parnas independently made afirst impor-tant distinction between domain properties(called indicative in[Jac95]and NAT in[Par95])and requirements(called optative in[Jac95]and REQ in[Par95]).Such distinction is essential as physical laws,organizational policies,regula-tions,or definitions of objects or operations in the environ-ment are by no means requirements.Surprisingly,the vast majority of specification languages existing to date do not support that distinction.A second important distinction made by Jackson and Parnas was between(system)require-ments and(software)specifications.Requirements are for-mulated in terms of objects in the real world,in a vocabulary accessible to stakeholders[Jac95];they capture required relations between objects in the environment that are moni-tored and controlled by the software,respectively[Par95]. Software specifications are formulated in terms of objects manipulated by the software,in a vocabulary accessible to programmers;they capture required relations between input and output software objects.Accuracy goals are non-func-tional goals requiring that the state of input/output software objects accurately reflect the state of the corresponding mon-itored/controlled objects they represent[Myl92,Dar93]. Such goals often are to be achieved partly by agents in the environments and partly by agents in the software.They are often overlooked in the RE process;their violation may lead to major failures[LAS93,Lam2Ka].A further distinction has to be made between requirements and assumptions. Although they are both optative,requirements are to be enforced by the software whereas assumptions can be enforced by agents in the environment only[Lam98b].If R denotes the set of requirements,As the set of assumptions,S the set of software specifications,Ac the set of accuracy goals,and G the set of goals,the following satisfaction rela-tions must hold:S, Ac, D|== R with S, Ac, D|=/=falseR, As, D|== G with R, As, D|=/=falseThe reactive systems lineIn parallel with all the efforts discussed above,a dedicated stream of research has been devoted to the specific area of reactive systems for process control.The seminal paper here was based on work by Heninger,Parnas and colleagues while reengineering theflight software for the A-7aircraft [Hen80].The paper introduced SCR,a tabular specification technique for specifying a reactive system by a set of parallel finite-state machines.Each of them is defined by different types of mathematical functions represented in tabular for-mat.A mode transition table defines a mode(i.e.a state)as a transition function of a mode and an event;an event table defines an output variable(or auxiliary quantity)as a func-。

jec投稿要求和须知Submissions to the Journal of Engineering and Computing (JEC) must adhere to strict requirements and guidelines to ensure the quality and consistency of the content. Authors are expected to submit original, unpublished work that contributes significantly to the field of engineering and computing. The manuscript should be formatted in accordance with the journal's style guidelines, including the use of appropriate headings, subheadings, and citations.向《工程与计算杂志》(JEC)投稿需遵循严格的要求和须知,以确保内容的质量和一致性。

作者应提交原创、未发表的作品,并对工程与计算领域作出重要贡献。

稿件应按照杂志的样式指南进行格式化,包括使用适当的标题、副标题和引文。

In terms of length, manuscripts should be concise yet comprehensive, typically ranging between 4000 and 6000 words. Authors are encouraged to provide clear and precise language, avoiding jargon or technical terms that may not be familiar to a general readership. The use of figures, tables, and equations should be sparing and only when they effectively enhance the understanding of the presented material.就篇幅而言,稿件应简洁明了且内容全面,通常字数在4000至6000字之间。

international journal of pavementengineering 稿件要求International Journal of Pavement Engineering: Submission RequirementsIntroduction:The International Journal of Pavement Engineering (IJPE) is a renowned publication that focuses on the latest advancements, research, and innovations in the field of pavement engineering. As a leading platform for disseminating knowledge and promoting excellence in this domain, IJPE has specific requirements for manuscript submissions. This article aims to provide a comprehensive overview of these requirements, highlighting key points that authors should consider when submitting their work.I. Manuscript Structure:1. Title and Abstract:1.1 The title should be concise, informative, and accurately reflect the content of the manuscript.1.2 The abstract should provide a brief summary of the research objectives, methodology, key findings, and significance of the study.2. Introduction:2.1 Clearly state the research problem and its significance in the field of pavement engineering.2.2 Provide a comprehensive literature review to establish the context and identify the research gap.2.3 Clearly state the research objectives and the scope of the study.3. Methodology:3.1 Describe the research design, experimental setup, or computational methods used in the study.3.2 Explain the data collection process, including the selection criteria for samples or data sources.3.3 Detail the data analysis techniques or statistical methods employed.4. Results and Discussion:4.1 Present the findings in a logical and organized manner, using tables, figures, or graphs where appropriate.4.2 Analyze and interpret the results, comparing them with existing literature or theoretical expectations.4.3 Discuss the implications of the findings, highlighting their significance and contributions to the field.5. Conclusion:5.1 Summarize the main findings and their implications.5.2 Discuss the limitations of the study and suggest potential areas for future research.5.3 Emphasize the practical relevance and application of the research.II. Formatting and Style:1. Manuscript Length:1.1 The manuscript should be concise, typically between 6,000 and 8,000 words, excluding references and appendices.1.2 Avoid excessive repetition or redundancy in the content.2. Language and Grammar:2.1 Ensure the use of clear, concise, and grammatically correct language.2.2 Follow the journal's preferred spelling and punctuation style.3. Figures and Tables:3.1 Include high-quality figures and tables that enhance the understanding of the research.3.2 Clearly label and reference all figures and tables within the text.III. Citations and References:1. Citation Style:1.1 Follow the journal's preferred citation style, such as APA, MLA, or IEEE.1.2 Ensure accurate and consistent citation throughout the manuscript.2. References:2.1 Include a comprehensive list of all cited references at the end of the manuscript.2.2 Follow the journal's guidelines for formatting the references, including the use of DOI or URL where applicable.IV. Ethical Considerations:1. Plagiarism:1.1 Ensure that all content is original and properly cited.1.2 Avoid self-plagiarism by properly referencing any previously published work by the same authors.2. Conflict of Interest:2.1 Disclose any potential conflicts of interest that may influence the research or its interpretation.3. Ethical Approval:3.1 If applicable, mention any necessary ethical approvals obtained for the research involving human subjects or animals.Conclusion:In conclusion, the International Journal of Pavement Engineering has specific requirements for manuscript submissions. Authors should carefully adhere to these guidelines to ensure their work is accurately and professionally presented. By following the recommended structure, formatting, and ethical considerations, researchers can increase their chances of publication in this prestigious journal and contribute to the advancement of pavement engineering knowledge.。

系统工程与电子技术英文版投稿指南Submission Guidelines for System Engineering and Electronic TechnologyThank you for considering submitting your work to System Engineering and Electronic Technology journal. To ensure smooth processing of your submission, please carefully read and follow the guidelines below:1. Manuscript Preparation:- All manuscripts should be written in English and formatted according to the journal's guidelines.- Manuscripts should be submitted in Microsoft Word format.- Ensure the manuscript is double-spaced, with 1-inch margins, and utilizes Times New Roman font with 12-point size.- Include a title page with the title (concise and informative), author(s) name and affiliation, corresponding author's contact information, and a list of keywords.- The abstract should be no more than 250 words and provide a brief overview of the research conducted, methods used, and key findings.- References should be cited in APA style and listed at the end of the manuscript.2. Submission Process:- Authors are required to submit their manuscripts online through the journal's submission system. Register an account and follow the instructions to upload your manuscript.- Authors may track the status of their submission through the online system.- Only original work that has not been published elsewhere will be considered for publication.- Ensure all necessary permissions and copyrights are obtained for any figures, tables, or supplementary material used in the manuscript.3. Peer Review Process:- All submitted manuscripts will undergo a rigorous peer review process to ensure quality and relevance to the journal.- The review process is double-blind, where both authors and reviewers remain anonymous.- Authors may suggest potential reviewers during the submission process, but the final selection is at the discretion of the editorial board.4. Ethical Considerations:- Authors should ensure that their work is original and has not been plagiarized from any other source.- Proper acknowledgment and citation should be given for any contributions or sources used in the research.- Any conflicts of interest should be disclosed in the manuscript.5. Publication Fees:- System Engineering and Electronic Technology does not charge any submission or publication fees.We look forward to receiving your submission and thank you for considering System Engineering and Electronic Technology journal for publication. If you have any questions or concerns, please contact the editorial office at [email protected]Best regards,Editorial TeamSystem Engineering and Electronic Technology。

英文版学报审稿流程详解The manuscript review process for English academic journals is a crucial step in ensuring the quality and integrity of published research. This process involves a thorough evaluation of the submitted manuscript by a panel of experts in the field, with the ultimate goal of selecting high-quality, impactful, and original contributions that meet the journal's standards. The review process can vary slightly across different journals, but generally, it follows a similar structure.The first step in the review process is the initial screening by the journal's editorial team. This phase involves a cursory examination of the manuscript to ensure that it aligns with the journal's scope, follows the required formatting and submission guidelines, and meets the minimum criteria for further consideration. The editorial team may also check for potential ethical concerns, conflicts of interest, or instances of plagiarism. If the manuscript passes this initial screening, it will be forwarded to the next stage of the review process.The second step is the peer review process. The manuscript is typically assigned to one or more subject-matter experts, known as peer reviewers, who are selected based on their expertise and relevant research experience. These reviewers are tasked with conducting a comprehensive evaluation of the manuscript, which includes assessing the research methodology, the validity of the findings, the accuracy of the literature review, the clarity of the writing, and the overall contribution to the field.The peer reviewers are often required to provide a detailed, constructive feedback to the authors, highlighting the strengths and weaknesses of the manuscript. This feedback may include suggestions for improving the manuscript, such as clarifying the research questions, strengthening the data analysis, or enhancing the presentation of the findings. The peer reviewers are also expected to provide a recommendation to the journal's editorial team regarding the suitability of the manuscript for publication.Once the peer review process is complete, the editorial team will carefully consider the feedback and recommendations provided by the reviewers. They may engage in further discussions with the authors, requesting additional information or revisions to address the concerns raised by the reviewers. This stage of the review process is often referred to as the "revise and resubmit" phase, during which the authors have the opportunity to address the issuesidentified by the reviewers and resubmit their manuscript for further consideration.If the manuscript is deemed suitable for publication, the editorial team will typically provide the authors with a formal acceptance letter, outlining the next steps in the publication process. This may include additional editorial and formatting requirements, as well as information about the expected timeline for the publication.It is important to note that the manuscript review process can be a lengthy and iterative process, as the editorial team and peer reviewers strive to ensure the highest level of quality and rigor in the published research. Authors may need to be patient and responsive throughout this process, as the journal's staff works diligently to provide a fair and thorough evaluation of their work.In addition to the standard peer review process, some journals may also incorporate additional review mechanisms, such as open peer review or post-publication peer review. Open peer review allows the authors and the public to access the reviewers' comments and engage in discussions about the manuscript, while post-publication peer review encourages ongoing feedback and commentary on the published work.The manuscript review process is not only crucial for maintaining theintegrity of academic research but also plays a vital role in the advancement of knowledge within a particular field. By subjecting manuscripts to rigorous scrutiny, journals can ensure that the research published within their pages is of the highest quality, making a meaningful contribution to the broader academic community.In conclusion, the detailed explanation of the manuscript review process for English academic journals highlights the importance of this crucial step in the publication process. From the initial screening to the final decision, the review process is designed to uphold the standards of academic excellence, foster the development of new knowledge, and promote the dissemination of high-impact research. By understanding and respecting this process, authors can better navigate the publication landscape and contribute to the ongoing progress of their respective fields.。

Materials LettersIntroductionMaterials Letters is a reputable scholarly journal focused on the latest advancements and research in the field of materials science. It provides a valuable platform for researchers, scientists, and engineers to publish their original contributions and share their findings with the scientific community. This document aims to provide an overview of Materials Letters, including its scope, submission process, and the benefits of publishing in this prestigious journal.ScopeMaterials Letters covers a wide range of topics in materials science, including but not limited to:1.Advanced materials2.Biomaterialsposites4.Electronic materials5.Energy materials6.Nanomaterials7.Optical materials8.Sustainable materials9.Thin filmsThe journal accepts submissions from various disciplines, such as chemistry, physics, engineering, and biology. It encourages interdisciplinary research and promotes the integration of different approaches to materials science.Submission ProcessSubmitting a manuscript to Materials Letters is a straightforward process. Authors are required to adhere to the journal’s guidelines and formatting requirements. The submission process can be summarized as follows:1.Manuscript preparation: Authors should carefullyprepare th eir manuscript, ensuring it meets the journal’sformatting guidelines. This includes organizing the content with headings, subheadings, figures, and tables.2.Online submission: Authors can submit theirmanuscript online through the journal’s submission syst em.They are required to provide information about thearticle’s title, authors, affiliations, and abstract. Themanuscript should be uploaded as a well-formatted PDFfile.3.Peer review: Once the manuscript is submitted, itgoes through a rigorous peer review process. Experts in the field evaluate the manuscript’s scientific validity,methodology, and significance. The peer reviewers provideconstructive feedback to the authors, who may be required to make revisions based on this feedback.4.Acceptance and publication: If the manuscriptsuccessfully passes the peer review process, it is accepted for publication. The authors are notified about theacceptance, and the article is scheduled for publication in one of the upcoming issues of Materials Letters. The journal follows a continuous publication model, ensuring timelydissemination of research.Benefits of Publishing in Materials LettersPublishing in Materials Letters offers several benefits for researchers and scientists in the field of materials science:1.Visibility and Impact: Materials Letters has a widereadership and is indexed in various scientific databases,increasing the visibility and impact of published articles.This enhances the reputation and recognition ofresearchers and their work.2.Rapid Publication: Materials Letters strives toensure rapid publication of accepted manuscripts. Thisallows researchers to share their findings with the scientific community promptly, accelerating the dissemination ofknowledge.3.Quality and Rigor: As a reputable journal, MaterialsLetters maintains high standards for scientific rigor andquality. It follows a rigorous peer review process, ensuring that published articles meet the highest scientific standards.4.Interdisciplinary Collaboration: Materials Lettersencourages interdisciplinary collaboration by acceptingarticles from various disciplines within materials science.This fosters the integration of different approaches andfacilitates collaborations between researchers from diverse backgrounds.5.Open Access Options: Materials Letters offers openaccess options, allowing articles to be freely accessible to a wider audience. This promotes the democratization ofknowledge and facilitates global collaboration.ConclusionMaterials Letters is a premier journal in the field of materials science, providing a platform for researchers to publish and share their original contributions. With its wide scope, rigorous peer review process, and reputation for quality, the journal offers numerous benefits to authors. Publishing in Materials Letters not only enhances the visibility and impact of research but also fosters interdisciplinary collaboration and promotes the advancement of materials science.。

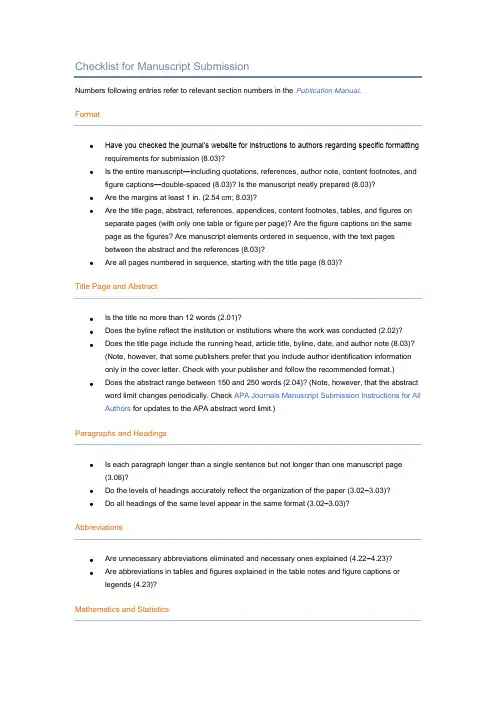

Checklist for Manuscript SubmissionNumbers following entries refer to relevant section numbers in the Publication Manual.Format•Have you checked the journal’s website for instructions to authors regarding specific formatting requirements for submission (8.03)?•Is the entire manuscript—including quotations, references, author note, content footnotes, and figure captions—double-spaced (8.03)? Is the manuscript neatly prepared (8.03)?•Are the margins at least 1 in. (2.54 cm; 8.03)?•Are the title page, abstract, references, appendices, content footnotes, tables, and figures on separate pages (with only one table or figure per page)? Are the figure captions on the samepage as the figures? Are manuscript elements ordered in sequence, with the text pagesbetween the abstract and the references (8.03)?•Are all pages numbered in sequence, starting with the title page (8.03)?Title Page and Abstract•Is the title no more than 12 words (2.01)?•Does the byline reflect the institution or institutions where the work was conducted (2.02)?•Does the title page include the running head, article title, byline, date, and author note (8.03)?(Note, however, that some publishers prefer that you include author identification informationonly in the cover letter. Check with your publisher and follow the recommended format.) •Does the abstract range between 150 and 250 words (2.04)? (Note, however, that the abstract word limit changes periodically. Check APA Journals Manuscript Submission Instructions for All Authors for updates to the APA abstract word limit.)Paragraphs and Headings•Is each paragraph longer than a single sentence but not longer than one manuscript page(3.08)?•Do the levels of headings accurately reflect the organization of the paper (3.02–3.03)?•Do all headings of the same level appear in the same format (3.02–3.03)?Abbreviations•Are unnecessary abbreviations eliminated and necessary ones explained (4.22–4.23)?•Are abbreviations in tables and figures explained in the table notes and figure captions or legends (4.23)?Mathematics and Statistics•Are Greek letters and all but the most common mathematical symbols identified on the manuscript (4.45, 4.49)?•Are all non-Greek letters that are used as statistical symbols for algebraic variables in italics(4.45)?Units of Measurement•Are metric equivalents for all nonmetric units provided (except measurements of time, which have no metric equivalents; see 4.39)?•Are all metric and nonmetric units with numeric values (except some measurements of time) abbreviated (4.27, 4.40)?References•Are references cited both in text and in the reference list (6.11–6.21)?•Do the text citations and reference list entries agree both in spelling and in date (6.11–6.21)?•Are journal titles in the reference list spelled out fully (6.29)?•Are the references (both in the parenthetical text citations and in the reference list) ordered alphabetically by the authors’ surnames (6.16, 6.25)?•Are inclusive page numbers for all articles or chapters in books provided in the reference list(7.01, 7.02)?•Are references to studies included in your meta-analysis preceded by an asterisk (6.26)? Notes and Footnotes•Is the departmental affiliation given for each author in the author note (2.03)?•Does the author note include both the author’s current affiliation if it is different from the bylin e affiliation and a current address for correspondence (2.03)?•Does the author note disclose special circumstances about the article (portions presented at a meeting, student paper as basis for the article, report of a longitudinal study, relationship thatmay be perceived as a conflict of interest; 2.03)?•In the text, are all footnotes indicated, and are footnote numbers correctly located (2.12)? Tables and Figures•Does every table column, including the stub column, have a heading (5.13, 5.19)?•Have all vertical table rules been omitted (5.19)?•Are all tables referred to in text (5.19)?•Are the elements in the figures large enough to remain legible after the figure has been reduced to the width of a journal column or page (5.22, 5.25)?•Is lettering in a figure no smaller than 8 points and no larger than 14 points (5.25)?•Are the figures being submitted in a file format acceptable to the publisher (5.30)?•Has the figure been prepared at a resolution sufficient to produce a high-quality image (5.25)?•Are all figures numbered consecutively with Arabic numerals (5.30)?•Are all figures and tables mentioned in the text and numbered in the order in which they are mentioned (5.05)?Copyright and Quotations•Is written permission to use previously published text; test; or portions of tests, tables, or figures enclosed with the manuscript (6.10)? See Permissions Alert (PDF: 16KB) for more information.•Are page or paragraph numbers provided in text for all quotations (6.03, 6.05)?Submitting the Manuscript•Is the journal editor’s contact information current (8.03)?•Is a cover letter included with the manuscript? Does the lettera. include the author’s postal address, e-mail address, telephone number, and faxnumber for future correspondence?b. state that the manuscript is original, not previously published, and not underconcurrent consideration elsewhere?c. inform the journal editor of the existence of any similar published manuscripts writtenby the author (8.03, Figure 8.1)?d. mention any supplemental material you are submitting for the online version of yourarticle?。

稿件要求投稿方式录用奖励英语作文全文共3篇示例,供读者参考篇1Title: Submission Requirements, Submission Process, Acceptance, and Rewards for ManuscriptsIntroduction:Submitting manuscripts for publication in academic journals is a crucial step in the dissemination of research findings and scholarly contributions. In order to streamline the submission process, ensure the quality of submissions, and reward authors for their hard work, journals often have specific requirements for manuscript submission, a well-defined process for reviewing submissions, criteria for accepting manuscripts, and rewards for accepted publications.Submission Requirements:Authors must adhere to the submission requirements set forth by the journal in order for their manuscripts to be considered for publication. These requirements may include guidelines for formatting, word count, referencing style, and ethical considerations such as plagiarism and conflicts of interest.It is important for authors to carefully read and follow the submission requirements to increase their chances of acceptance.Submission Process:Once a manuscript is submitted to a journal, it undergoes a rigorous peer-review process to evaluate its quality, originality, and relevance to the journal's scope. The submission process typically involves multiple rounds of review by experts in the field, who provide feedback and recommendations for revisions. Authors are often required to respond to reviewers' comments and make necessary revisions to their manuscripts before a final decision is made.Acceptance:Manuscripts that meet the journal's standards for quality and originality are accepted for publication. Accepted manuscripts may undergo additional editing and formatting to ensure they meet the journal's publishing standards. Authors are then notified of the acceptance of their manuscripts and provided with information on the publication process and timeline.Rewards:Authors whose manuscripts are accepted for publication are often rewarded in various ways. Some journals offer financial incentives, such as publication fees or royalties, to authors whose manuscripts are accepted. Other rewards may include recognition in the form of awards, certificates, or prizes. Additionally, publication in a reputable journal can enhance authors' credibility and visibility in their field, leading to career advancement opportunities and increased impact of their research.Conclusion:In conclusion, submitting manuscripts for publication involves adhering to submission requirements, navigating the submission process, meeting acceptance criteria, and potentially reaping rewards for accepted publications. By understanding and following the guidelines set forth by journals, authors can increase their chances of success and contribute valuable research findings to the academic community.篇2Submission Requirements, Submission Methods, Acceptance, and RewardsSubmission Requirements:1. Submissions must be original and previously unpublished works.2. Submissions must be related to the specified theme or topic.3. Submissions must be written in English.4. Submissions must adhere to the specified word count and format guidelines.5. Submissions must be accompanied by a cover letter containing the author's contact information.Submission Methods:1. Submissions can be sent via email to the specified email address.2. Submissions can be uploaded to the specified online submission platform.3. Submissions can be mailed to the specified mailing address.Acceptance:1. Submissions will be reviewed by a panel of judges.2. Authors will be notified of the acceptance or rejection of their submissions.3. Accepted submissions will be published in the designated publication.Rewards:1. Authors of accepted submissions will receive a monetary reward.2. Authors of accepted submissions will receive a certificate of achievement.3. Authors of accepted submissions will have the opportunity to participate in special events or activities.In conclusion, authors are encouraged to carefully adhere to the submission requirements, choose the appropriate submission method, and await the acceptance notification and rewards for their hard work.篇3Submission Guidelines: Submission, Acceptance, RewardsSubmission Guidelines:To submit a manuscript for consideration, please follow these guidelines:************************************************* Word format.2. Include the title of your manuscript in the subject line.3. Include a cover letter with your contact information, a brief summary of your manuscript, and any relevant disclosures.4. Manuscripts must be original and not under consideration elsewhere.5. Ensure all co-author disclosures are included.6. Follow the journal's style guide for formatting and citation requirements.Acceptance Policy:Manuscripts are reviewed by our editorial team and external reviewers. Acceptance decisions are based on the quality, relevance, and originality of the manuscript. Authors will be notified of acceptance within 4-6 weeks of submission.Rewards:1. Publication: Accepted manuscripts will be published in the journal.2. Financial Reward: Authors of accepted manuscripts will receive a monetary reward based on the quality and impact of their work.3. Recognition: Authors will be recognized for their contributions in the journal and on the journal's website.4. Networking: Authors will have the opportunity to connect with other researchers, scholars, and professionals in their field.5. Career Advancement: Publication in a reputable journal can enhance an author's reputation and career prospects.Submit your manuscript today and be on your way to publication, rewards, and recognition!For any questions or further information, please contact******************.Thank you for considering submitting your work to our journal. We look forward to receiving your manuscript!。