Visualizing-the-risk-of-global-Sourcing

- 格式:pdf

- 大小:201.04 KB

- 文档页数:3

- disruption ,: Global convergence vs nationalSustainable - ,practices and dynamic capabilities in the food industry: A critical analysis of the literature5 Mesoscopic - simulation6 Firm size and sustainable performance in food -s: Insights from Greek SMEs7 An analytical method for cost analysis in multi-stage -s: A stochastic / model approach8 A Roadmap to Green - System through Enterprise Resource Planning (ERP) Implementation9 Unidirectional transshipment policies in a dual-channel -10 Decentralized and centralized model predictive control to reduce the bullwhip effect in - ,11 An agent-based distributed computational experiment framework for virtual - / development12 Biomass-to-bioenergy and biofuel - optimization: Overview, key issues and challenges13 The benefits of - visibility: A value assessment model14 An Institutional Theory perspective on sustainable practices across the dairy -15 Two-stage stochastic programming - model for biodiesel production via wastewater treatment16 Technology scale and -s in a secure, affordable and low carbon energy transition17 Multi-period design and planning of closed-loop -s with uncertain supply and demand18 Quality control in food - ,: An analytical model and case study of the adulterated milk incident in China19 - information capabilities and performance outcomes: An empirical study of Korean steel suppliers20 A game-based approach towards facilitating decision making for perishable products: An example of blood -21 - design under quality disruptions and tainted materials delivery22 A two-level replenishment frequency model for TOC - replenishment systems under capacity constraint23 - dynamics and the ―cross-border effect‖: The U.S.–Mexican border’s case24 Designing a new - for competition against an existing -25 Universal supplier selection via multi-dimensional auction mechanisms for two-way competition in oligopoly market of -26 Using TODIM to evaluate green - practices under uncertainty27 - downsizing under bankruptcy: A robust optimization approach28 Coordination mechanism for a deteriorating item in a two-level - system29 An accelerated Benders decomposition algorithm for sustainable - / design under uncertainty: A case study of medical needle and syringe -30 Bullwhip Effect Study in a Constrained -31 Two-echelon multiple-vehicle location–routing problem with time windows for optimization of sustainable - / of perishable food32 Research on pricing and coordination strategy of green - under hybrid production mode33 Agent-system co-development in - research: Propositions and demonstrative findings34 Tactical ,for coordinated -s35 Photovoltaic - coordination with strategic consumers in China36 Coordinating supplier׳s reorder point: A coordination mechanism for -s with long supplier lead time37 Assessment and optimization of forest biomass -s from economic, social and environmental perspectives – A review of literature38 The effects of a trust mechanism on a dynamic - /39 Economic and environmental assessment of reusable plastic containers: A food catering - case study40 Competitive pricing and ordering decisions in a multiple-channel -41 Pricing in a - for auction bidding under information asymmetry42 Dynamic analysis of feasibility in ethanol - for biofuel production in Mexico43 The impact of partial information sharing in a two-echelon -44 Choice of - governance: Self-managing or outsourcing?45 Joint production and delivery lot sizing for a make-to-order producer–buyer - with transportation cost46 Hybrid algorithm for a vendor managed inventory system in a two-echelon -47 Traceability in a food -: Safety and quality perspectives48 Transferring and sharing exchange-rate risk in a risk-averse - of a multinational firm49 Analyzing the impacts of carbon regulatory mechanisms on supplier and mode selection decisions: An application to a biofuel -50 Product quality and return policy in a - under risk aversion of a supplier51 Mining logistics data to assure the quality in a sustainable food -: A case in the red wine industry52 Biomass - optimisation for Organosolv-based biorefineries53 Exact solutions to the - equations for arbitrary, time-dependent demands54 Designing a sustainable closed-loop - / based on triple bottom line approach: A comparison of metaheuristics hybridization techniques55 A study of the LCA based biofuel - multi-objective optimization model with multi-conversion paths in China56 A hybrid two-stock inventory control model for a reverse -57 Dynamics of judicial service -s58 Optimizing an integrated vendor-managed inventory system for a single-vendor two-buyer - with determining weighting factor for vendor׳s ordering59 Measuring - Resilience Using a Deterministic Modeling Approach60 A LCA Based Biofuel - Analysis Framework61 A neo-institutional perspective of -s and energy security: Bioenergy in the UK62 Modified penalty function method for optimal social welfare of electric power - with transmission constraints63 Optimization of blood - with shortened shelf lives and ABO compatibility64 Diversified firms on dynamical - cope with financial crisis better65 Securitization of energy -s in China66 Optimal design of the auto parts - for JIT operations: Sequential bifurcation factor screening and multi-response surface methodology67 Achieving sustainable -s through energy justice68 - agility: Securing performance for Chinese manufacturers69 Energy price risk and the sustainability of demand side -s70 Strategic and tactical mathematical programming models within the crude oil - context - A review71 An analysis of the structural complexity of - /s72 Business process re-design methodology to support - integration73 Could - technology improve food operators’ innovativeness? A developing country’s perspective74 RFID-enabled process reengineering of closed-loop -s in the healthcare industry of Singapore75 Order-Up-To policies in Information Exchange -s76 Robust design and operations of hydrocarbon biofuel - integrating with existing petroleum refineries considering unit cost objective77 Trade-offs in - transparency: the case of Nudie Jeans78 Healthcare - operations: Why are doctors reluctant to consolidate?79 Impact on the optimal design of bioethanol -s by a new European Commission proposal80 Managerial research on the pharmaceutical - – A critical review and some insights for future directions81 - performance evaluation with data envelopment analysis and balanced scorecard approach82 Integrated - design for commodity chemicals production via woody biomass fast pyrolysis and upgrading83 Governance of sustainable -s in the fast fashion industry84 Temperature ,for the quality assurance of a perishable food -85 Modeling of biomass-to-energy - operations: Applications, challenges and research directions86 Assessing Risk Factors in Collaborative - with the Analytic Hierarchy Process (AHP)87 Random / models and sensitivity algorithms for the analysis of ordering time and inventory state in multi-stage -s88 Information sharing and collaborative behaviors in enabling - performance: A social exchange perspective89 The coordinating contracts for a fuzzy - with effort and price dependent demand90 Criticality analysis and the -: Leveraging representational assurance91 Economic model predictive control for inventory ,in -s92 - ,ontology from an ontology engineering perspective93 Surplus division and investment incentives in -s: A biform-game analysis94 Biofuels for road transport: Analysing evolving -s in Sweden from an energy security perspective95 - ,executives in corporate upper echelons Original Research Article96 Sustainable - ,in the fast fashion industry: An analysis of corporate reports97 An improved method for managing catastrophic - disruptions98 The equilibrium of closed-loop - super/ with time-dependent parameters99 A bi-objective stochastic programming model for a centralized green - with deteriorating products100 Simultaneous control of vehicle routing and inventory for dynamic inbound -101 Environmental impacts of roundwood - options in Michigan: life-cycle assessment of harvest and transport stages102 A recovery mechanism for a two echelon - system under supply disruption103 Challenges and Competitiveness Indicators for the Sustainable Development of the - in Food Industry104 Is doing more doing better? The relationship between responsible - ,and corporate reputation105 Connecting product design, process and - decisions to strengthen global - capabilities106 A computational study for common / design in multi-commodity -s107 Optimal production and procurement decisions in a - with an option contract and partial backordering under uncertainties108 Methods to optimise the design and ,of biomass-for-bioenergy -s: A review109 Reverse - coordination by revenue sharing contract: A case for the personal computers industry110 SCOlog: A logic-based approach to analysing - operation dynamics111 Removing the blinders: A literature review on the potential of nanoscale technologies for the ,of -s112 Transition inertia due to competition in -s with remanufacturing and recycling: A systems dynamics mode113 Optimal design of advanced drop-in hydrocarbon biofuel - integrating with existing petroleum refineries under uncertainty114 Revenue-sharing contracts across an extended -115 An integrated revenue sharing and quantity discounts contract for coordinating a - dealing with short life-cycle products116 Total JIT (T-JIT) and its impact on - competency and organizational performance117 Logistical - design for bioeconomy applications118 A note on ―Quality investment and inspection policy in a supplier-manufacturer -‖119 Developing a Resilient -120 Cyber - risk ,: Revolutionizing the strategic control of critical IT systems121 Defining value chain architectures: Linking strategic value creation to operational - design122 Aligning the sustainable - to green marketing needs: A case study123 Decision support and intelligent systems in the textile and apparel -: An academic review of research articles124 - ,capability of small and medium sized family businesses in India: A multiple case study approach125 - collaboration: Impact of success in long-term partnerships126 Collaboration capacity for sustainable - ,: small and medium-sized enterprises in Mexico127 Advanced traceability system in aquaculture -128 - information systems strategy: Impacts on - performance and firm performance129 Performance of - collaboration – A simulation study130 Coordinating a three-level - with delay in payments and a discounted interest rate131 An integrated framework for agent basedinventory–production–transportation modeling and distributed simulation of -s132 Optimal - design and ,over a multi-period horizon under demand uncertainty. Part I: MINLP and MILP models133 The impact of knowledge transfer and complexity on - flexibility: A knowledge-based view134 An innovative - performance measurement system incorporating Research and Development (R&D) and marketing policy135 Robust decision making for hybrid process - systems via model predictive control136 Combined pricing and - operations under price-dependent stochastic demand137 Balancing - competitiveness and robustness through ―virtual dual sourcing‖: Lessons from the Great East Japan Earthquake138 Solving a tri-objective - problem with modified NSGA-II algorithm 139 Sustaining long-term - partnerships using price-only contracts 140 On the impact of advertising initiatives in -s141 A typology of the situations of cooperation in -s142 A structured analysis of operations and - ,research in healthcare (1982–2011143 - practice and information quality: A - strategy study144 Manufacturer's pricing strategy in a two-level - with competing retailers and advertising cost dependent demand145 Closed-loop - / design under a fuzzy environment146 Timing and eco(nomic) efficiency of climate-friendly investments in -s147 Post-seismic - risk ,: A system dynamics disruption analysis approach for inventory and logistics planning148 The relationship between legitimacy, reputation, sustainability and branding for companies and their -s149 Linking - configuration to - perfrmance: A discrete event simulation model150 An integrated multi-objective model for allocating the limited sources in a multiple multi-stage lean -151 Price and leadtime competition, and coordination for make-to-order -s152 A model of resilient - / design: A two-stage programming with fuzzy shortest path153 Lead time variation control using reliable shipment equipment: An incentive scheme for - coordination154 Interpreting - dynamics: A quasi-chaos perspective155 A production-inventory model for a two-echelon - when demand is dependent on sales teams׳ initiatives156 Coordinating a dual-channel - with risk-averse under a two-way revenue sharing contract157 Energy supply planning and - optimization under uncertainty158 A hierarchical model of the impact of RFID practices on retail - performance159 An optimal solution to a three echelon - / with multi-product and multi-period160 A multi-echelon - model for municipal solid waste ,system 161 A multi-objective approach to - visibility and risk162 An integrated - model with errors in quality inspection and learning in production163 A fuzzy AHP-TOPSIS framework for ranking the solutions of Knowledge ,adoption in - to overcome its barriers164 A relational study of - agility, competitiveness and business performance in the oil and gas industry165 Cyber - security practices DNA – Filling in the puzzle using a diverse set of disciplines166 A three layer - model with multiple suppliers, manufacturers and retailers for multiple items167 Innovations in low input and organic dairy -s—What is acceptable in Europe168 Risk Variables in Wind Power -169 An analysis of - strategies in the regenerative medicine industry—Implications for future development170 A note on - coordination for joint determination of order quantity and reorder point using a credit option171 Implementation of a responsive - strategy in global complexity: The case of manufacturing firms172 - scheduling at the manufacturer to minimize inventory holding and delivery costs173 GBOM-oriented ,of production disruption risk and optimization of - construction175 Alliance or no alliance—Bargaining power in competing reverse -s174 Climate change risks and adaptation options across Australian seafood -s – A preliminary assessment176 Designing contracts for a closed-loop - under information asymmetry 177 Chemical - modeling for analysis of homeland security178 Chain liability in multitier -s? Responsibility attributions for unsustainable supplier behavior179 Quantifying the efficiency of price-only contracts in push -s over demand distributions of known supports180 Closed-loop - / design: A financial approach181 An integrated - / design problem for bidirectional flows182 Integrating multimodal transport into cellulosic biofuel - design under feedstock seasonality with a case study based on California183 - dynamic configuration as a result of new product development184 A genetic algorithm for optimizing defective goods - costs using JIT logistics and each-cycle lengths185 A - / design model for biomass co-firing in coal-fired power plants 186 Finance sourcing in a -187 Data quality for data science, predictive analytics, and big data in - ,: An introduction to the problem and suggestions for research and applications188 Consumer returns in a decentralized -189 Cost-based pricing model with value-added tax and corporate income tax for a - /190 A hard nut to crack! Implementing - sustainability in an emerging economy191 Optimal location of spelling yards for the northern Australian beef -192 Coordination of a socially responsible - using revenue sharing contract193 Multi-criteria decision making based on trust and reputation in -194 Hydrogen - architecture for bottom-up energy systems models. Part 1: Developing pathways195 Financialization across the Pacific: Manufacturing cost ratios, -s and power196 Integrating deterioration and lifetime constraints in production and - planning: A survey197 Joint economic lot sizing problem for a three—Layer - with stochastic demand198 Mean-risk analysis of radio frequency identification technology in - with inventory misplacement: Risk-sharing and coordination199 Dynamic impact on global -s performance of disruptions propagation produced by terrorist acts。

计算机专业外文文献及翻译微软Visual Studio 微软 Visual Studio1 微软 Visual Studio Visual Studio 是微软公司推出的开发环境,Visual Studio 可以用来创建 Windows 平台下的Windows 应用程序和网络应用程序,也可以用来创建网络服务、智能设备应用程序和 Office 插件。

Visual Studio 是一个来自微软的集成开发环境 IDE(inteqrated development environment),它可以用来开发由微软视窗,视窗手机,Windows CE、.NET 框架、.NET 精简框架和微软的 Silverlight 支持的控制台和图形用户界面的应用程序以及 Windows 窗体应用程序,网站,Web 应用程序和网络服务中的本地代码连同托管代码。

Visual Studio 包含一个由智能感知和代码重构支持的代码编辑器。

集成的调试工作既作为一个源代码级调试器又可以作为一台机器级调试器。

其他内置工具包括一个窗体设计的 GUI 应用程序,网页设计师,类设计师,数据库架构设计师。

它有几乎各个层面的插件增强功能,包括增加对支持源代码控制系统(如 Subversion 和 Visual SourceSafe)并添加新的工具集设计和可视化编辑器,如特定于域的语言或用于其他方面的软件开发生命周期的工具(例如 Team Foundation Server 的客户端:团队资源管理器)。

Visual Studio 支持不同的编程语言的服务方式的语言,它允许代码编辑器和调试器(在不同程度上)支持几乎所有的编程语言,提供了一个语言特定服务的存在。

内置的语言中包括 C/C 中(通过Visual C)(通过 Visual ),C,中(通过 Visual C,)和 F,(作为Visual Studio2010),为支持其他语言,如 MPython和 Ruby 等,可通过安装单独的语言服务。

二语习得三种基本学习策略摘要语言能力和动机是构成影响习得第二语言的效率与水平的主要因素。

但它们又是如何对其产生影响和作用的呢?有一种可能性就是学习者各自使用的学习策略潜移默化的影响。

接下来这篇论文将讨论三种基本的学习策略——视觉学习型,听觉学习型,动觉学习型。

SummaryLanguage aptitude and motivation constitute general factors that influence the rate and level of L2 achievement.But how dose their influence operate?One possibility is that they affect the nature and the frequency with which individual learners use learning strategies.Then,this thesis will talk about the tree main learning strategies--Visual Learner ,Auditory Learner,Kinesthetic Learner.Tree Main Learning StrategiesLearning strategies are the particular approaches or techniques that learners employ to try to learn an L2.They can be behavioural (for example,repeating new words aloud to help you remember them)or they can be mental (for example,using the linguistic or situational context to infer the meaning of a new word).They are typically problem-oriented.That is,learners employ learning strategies when they are faced with some problem,such as how to remember a new word.Learners are generally aware of the strategies they use and,when asked,can explain what they did to try to learn something.Different kinds of learning strategies have been identified.Cognitive strategies are those that are involved in the analysis,synthesis,or transformation of learning materials. An example is recombination', which involves constructing a meaningful sentence by recombining known elements of the L2 in a new way.Metacognitive strategies are those involved in planning, monitoring,and evaluating learning.An example is 'selective attention',where the learner makes a conscious decision to attend to particular aspects of the input.Social\affective strategies concern the ways in which learners choose to interact with other speakers.An example is 'questioning for clarification'(i.e.asking for repetition,a paraphrase,or an example).There have been various attempts to discover which strategies are important for L2 acquisitions. One way is to investigate how ‘good language leaner’try to learn. This involves identifying learners who have been successful in learning an L2 and interviewing them to find out the strategies that work for them. One of the main findings of such studies is that successful language learners pay attention to both from and meaning. Good language learners are also very active, show awareness of the learning process and their own personal learning styles and, above all, are flexible and appropriate in their use of learning strategies. They seem to be especially adept at using metaconignitive strategies.Other studies have sought to relate learners’ reported use of different strategies totheir L2proficiency to try to find out which strategies are important for language development. Such studies have shown, not surprisingly, that successful learners use more strategies than unsuccessful learners. They have also shown that different strategies are related to different aspects of L2 learning. Thus, strategies that involve formal practice contribute to the development of linguistic competence whereas strategies involving functional practice aid the development of communicative skills. Successful learners may also call on different strategies at different stages of their development. However, there is the problem with how to interpret this research. Does strategy use result in learning or does learning increase learners’ability to employ more strategies? At the moment, it is not clear..An obvious question concerns how these learning strategies relate to the general kinds of psycholinguistic processes discussed in the thesis. What strategies are involved in noticing or noticing the gap, for example? Unfortunately, however, no attempt has yet been made to incorporate the various learning strategies that have been identified into a model of psycholinguistic processing. The approach to date has been simply to describe strategies and quantifying their use.The study of learning strategies is of potential value to language teachers. If those strategies that are crucial for learning can be identified, it may proved possible to train students to use them. We will examine this idea in the broader context of a discussion of the role of instruction in L2 acquisition.Following are tree basic learning strategies:Visual LearnerVisual learning is a teaching and learning style in which ideas, concepts, data and other information are associated with images and techniques.Visual learners prefer to have information presented in graphs, graphic organizers such as webs, concept maps and idea maps, plots, and illustrations such as stack plots and V enn plots, are some of the techniques used in visual learning to enhance thinking and learning skills. Visual learners are said to possess great instinctive direction, can easily visualize objects, and are excellent organizers.Although learning styles have "enormous popularity" and both children and adults express personal preferences, there is no evidence that identifying a student's learning style produces better outcomes, and there is significant evidence that the widespread "meshing hypothesis" (that a student will learn best if taught in a method deemed appropriate for the student's learning style) is invalid. Well-designed studies "flatly contradict the popular meshing hypothesis".The characteristics of the Visual learners:∙Reader/observer∙Scans everything; wants to see things, enjoys visual stimulation∙Enjoys maps, pictures, diagrams, and color∙Needs to see the teacher’s body language/facial expression to fully understand∙Not pleased with lectures∙Daydreams; a word, sound or smell causes recall and mental wandering ∙Usually takes detailed notes∙May think in pictures and learn best from visual display.∙Have a clear view of your teachers when they are speaking so you can see their body language and facial expression∙Use color to highlight important points in text∙Illustrate your ideas as a picture and use mind maps∙Use multi-media such as computers or videos.∙Study in a quiet place away from verbal disturbances∙Visualize information as a picture to aid learning∙Make charts, graphs and tables in your notes.∙Participate actively in class—this will keep you involved and alert∙When memorizing material, write it over and over∙Keep pencil and paper handy so you can write down good ideas.Auditory LearnerAuditory learners are those who learn best through hearing things.They may struggle to understand a chapter they've read, but then experience a full understanding as they listen to the class lecture.. An auditory learner may benefit by using the speech recognition tool available on many PCs.Auditory learners may have a knack for ascertaining the true meaning of someone's words by listening to audible signals like changes in tone. When memorizing a phone number, an auditory learner will say it out loud and then remember how it sounded to recall it.Look over these traits to see if they sound familiar to you. Y ou may be an auditory learner if you are someone who:∙Likes to read to self out loud.∙Is not afraid to speak in class.∙Likes oral reports.∙Is good at explaining.∙Remembers names.∙Notices sound effects in movies.∙Enjoys music.∙Is good at grammar and foreign language.∙Reads slowly.∙Follows spoken directions well.∙Can't keep quiet for long periods.∙Enjoys acting, being on stage.∙Is good in study groups.∙Using word association to remember facts and lines.∙Recording lectures.∙Watching videos.∙Repeating facts with eyes closed.∙Participating in group discussions.∙Using audiotapes for language practice.Taping notes after writing them.Kinesthetic LearnersKinesthetic learners are those who learn through experiencing/doing things. Kinesthetic learning is a learning style in which learning takes by the student actually carrying out a physical activity, rather than listening to a lecture or merely watching a demonstration. It is also referred to as tactile learning. People with a kinaesthetic learning style are also commonly known as do-ers.According to proponents of the learning styles theory, students who have a predominantly kinesthetic learning style are thought to be natural discovery learners: they have realizations through doing, as opposed to having thought first before initiating action. They may struggle to learn by reading or listening.When revising it helps for the student to move around as this increases the students understanding with learners generally getting better marks in exams when they use that style. The kinesthetic learner usually does well in things such as chemistry experiments, sporting activities, art and acting. They also may listen to music while learning or studying. It is common for kinesthetic learners to focus on two different things at the same time. They will remember things by going back in their minds to what their body was doing. They also have very high hand-eye coordination and very quick receptors.Kinesthetic learning is a learning style in which learning takes place by the learner using their body in order to express a thought, an idea or an understanding of a particular concept (which could be related to any field).People with dominant kinesthetic and tactile learning style are commonly known as do-ers. In an elementary classroom setting, these students may stand out because of their constant need to move; high levels of energy which may cause them to be agitated, restless and/or impatient. Kinesthetic learners' short- and long-term memory is strengthened by their use of their own body's movements. They will often remember things by going back in their minds and visualizing their own body'smovements. They may also have high hand-eye coordination and quick receptors.Following are the traits of kinesthetic learner:∙Is good at sports.∙Can't sit still for long.∙Is not great at spelling.∙Does not have great handwriting.∙Likes science lab.∙Studies with loud music on.∙Likes adventure books, movies.∙Likes role playing.∙Takes breaks when studying.∙Builds models.∙Is involved in martial arts, dance.∙Is fidgety during lectures.∙Studying in short blocks.∙Taking lab classes.∙Role playing.∙Taking field trips, visiting museums.∙Studying with others.∙Using memory games.∙Using flash cards to memorize.All above are the learning strategies.But learning styles are various approaches or ways of learning. They involve educating methods, particular to an individual, that are presumed to allow that individual to learn best. Most people prefer an identifiable method of interacting with, taking in, and processing stimuli or information. Proponents say that teachers should assess the learning styles of their students and adapt their classroom methods to best fit each student's learning style .The alleged basis and efficacy for these proposals has been extensively criticized. Although children and adults express personal preferences, there is no evidence that identifyinga student's learning style produces better outcomes, and there is significant evidence that the widespread "meshing hypothesis" (that a student will learn best if taught in a method deemed appropriate for the student's learning style) is invalid.Well-designed studies "flatly contradict the popular meshing hypothesis".References1,《第二语言习得》(Second Language Acquisition),Rod Ellis,上海外语教育出版社,2009年2,《认知语言学》(Cognitive Linguistics),王寅,上海外语教育出版社,2007 年3,.。

高三现代科技前沿探索英语阅读理解20题1<背景文章>Artificial intelligence (AI) is rapidly transforming the field of healthcare. In recent years, AI has made significant progress in various aspects of medical care, bringing new opportunities and challenges.One of the major applications of AI in healthcare is in disease diagnosis. AI-powered systems can analyze large amounts of medical data, such as medical images and patient records, to detect diseases at an early stage. For example, deep learning algorithms can accurately identify tumors in medical images, helping doctors make more accurate diagnoses.Another area where AI is making a big impact is in drug discovery. By analyzing vast amounts of biological data, AI can help researchers identify potential drug targets and design new drugs more efficiently. This can significantly shorten the time and cost of drug development.AI also has the potential to improve patient care by providing personalized treatment plans. Based on a patient's genetic information, medical history, and other factors, AI can recommend the most appropriate treatment options.However, the application of AI in healthcare also faces some challenges. One of the main concerns is data privacy and security. Medicaldata is highly sensitive, and ensuring its protection is crucial. Another challenge is the lack of transparency in AI algorithms. Doctors and patients need to understand how AI makes decisions in order to trust its recommendations.In conclusion, while AI holds great promise for improving healthcare, it also poses significant challenges that need to be addressed.1. What is one of the major applications of AI in healthcare?A. Disease prevention.B. Disease diagnosis.C. Health maintenance.D. Medical education.答案:B。

Visual Studio ALM 词汇表本词汇表定义了Visual Studio Application Lifecycle Management (ALM) 帮助中使用的关键术语。

'@me'由当前登录用户的用户名替换的查询值宏。

'@project'由当前选择的 Team Foundation 项目的名称替换的查询值宏。

'@Today'由运行应用程序的工作站的系统日期值替换的查询值宏。

Acceptance Criteria —验收标准产品或产品组件要被用户、客户或其他授权实体接受所必须符合的标准。

Acceptance Testing —验收测试为使用户、客户或其他授权实体能够确定是否接受产品或产品组件而进行的正式测试。

Action Recording —操作录制对测试中的每个步骤记录一个或多个应用程序中的用户输入和操作的文件。

记录之后,通过播放操作录制,可以自动运行特定测试步骤。

Action Recording Section —操作录制部分操作录制的一部分,它基于在测试中标记为通过或未通过的步骤。

每次标记测试步骤的结果时,都会创建操作录制的新部分。

Activity —活动为一个目的而共同执行的工作模式。

活动可以使用或生产工作产品,并且可以通过工作项进行跟踪。

Adversary —对手不受欢迎的角色或以获取资产访问权为目标的角色。

黑客便是对手之一。

Agile Methods —敏捷方法一系列工程最佳过程,其目标是迅速交付优质软件以及使开发满足客户需要和公司目标的业务方法。

在此范例中,需要对事关项目是否成功的团队协作、自律行为和责任进行经常性检查和调整。

Algorithm —算法用于解决问题的规则或过程。

Alpha Version — Alpha 版本用于获取有关功能集和可用性的初步反馈的非常早的产品发布。

Analysis —分析在概念设计中,指的是对业务和用户信息进行分类和检查,形成记录工作过程的用例和方案。

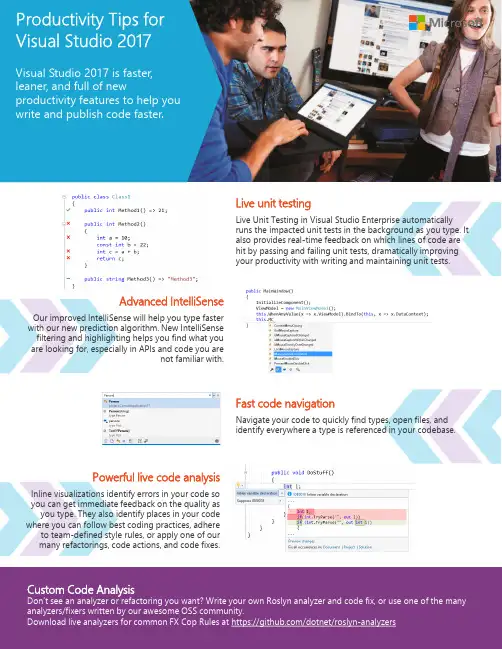

Advanced IntelliSenseOur improved IntelliSense will help you type faster with our new prediction algorithm. New IntelliSense filtering and highlighting helps you find what you are looking for, especially in APIs and code you arenot familiar with.Powerful live code analysisInline visualizations identify errors in your code so you can get immediate feedback on the quality as you type. They also identify places in your code where you can follow best coding practices, adhereto team-defined style rules, or apply one of our many refactorings, code actions, and code fixes.Custom Code AnalysisDon’t see an analyzer or refactoring you want? Write your own Roslyn analyzer and code fix, or use one of the many analyzers/fixers written by our awesome OSS community.Download live analyzers for common FX Cop Rules at https:///dotnet/roslyn-analyzersFast code navigationNavigate your code to quickly find types, open files, and identify everywhere a type is referenced in your codebase.Live unit testingLive Unit Testing in Visual Studio Enterprise automatically runs the impacted unit tests in the background as you type. It also provides real-time feedback on which lines of code are hit by passing and failing unit tests, dramatically improving your productivity with writing and maintaining unit tests.Visual Studio 2017 is faster, leaner, and full of newproductivity features to help you write and publish code faster.Become a Power User. Master the shortcuts with Visual Studio 2017.Code Assistance & AnalysisVisual Studio 2015Visual Studio2017ReSharperShow available quick actions and refactorings Ctrl + . Alt+Enter or Ctrl + .Alt+Enter IntelliSense code completion Ctrl + Space Ctrl + Space Ctrl + SpaceFormat Document Ctrl+E, D Ctrl+E, D Ctrl+E, CFormat Selection Ctrl+E, F Ctrl+E, F Ctrl+E, C Parameter Info/Signature Help Ctrl+K, P Ctrl+K, P Ctrl+Shift+SpaceMove code up/down Alt+Up/Down Arrow Alt+Up/Down Arrow Ctrl+Shift+Alt+Up/DownArrowComment with line comment Ctrl+K, C Ctrl+K, C Ctrl+Alt+/ Uncomment line comment Ctrl+K, U Ctrl+K, U Ctrl+Shift+/Search and NavigationFinding References/UsagesFind All References Shift+F12Shift+F12Shift+F12Cycle through references F8F8Ctrl+Alt+PgUp/PgDown Quick Find Ctrl+F Ctrl+F Ctrl+FFind/Replace in Files Ctrl+Shift+F Ctrl+Shift+F N/AGo ToGo To Definition F12F12F12Peek Definition Alt+F12Alt+F12Ctrl+Shift+QGo To Implementation Ctrl+F12Ctrl+F12Ctrl+Alt, clickGo To All/Type/File/Member/Symbol Ctrl + ,Ctrl + , or Ctrl+T Ctrl + TOpen Error List Ctrl+W,E Ctrl+W,E N/AGo To Next Error F8F8Shift+Alt+PgUp/PgDown RefactoringsRename Ctrl+R, R Ctrl+R, R Ctrl+R, RMove Type to Matching File N/A Ctrl+. or Alt+Enter Ctrl+Shift+R Introduce temporary variable Ctrl + . Ctrl+. or Alt+Enter Ctrl+R, VInline temporary variable Ctrl + . Ctrl+. or Alt+Enter Ctrl+R, IEncapsulate field Ctrl + . Ctrl+. or Alt+Enter orCtrl+R,ECtrl+R, EChange signature Ctrl + . Ctrl+. or Alt+Enter Ctrl+R, SRemove Parameters Ctrl+. or Ctrl+R, V Ctrl+. or Alt+Enter orCtrl+R, VCtrl+R, SReorder Parameters Ctrl+. or Ctrl+R, O Ctrl+. or Alt+Enter orCtrl+R, OCtrl+R, SExtract Method Ctrl+. or Ctrl+R, M Ctrl+. or Alt+Enter orCtrl+R, MCtrl+R, MExtract Interface Ctrl+. or Ctrl+R, I Ctrl+. or Alt+Enter orCtrl+R, ICtrl+Shift+ROtherSync active file with Solution Explorer Ctrl+[, S Ctrl+[, S Shift+Alt+LSend code snippet to C# Interactive Window Ctrl+E, E Ctrl+E, E N/A Want to remap your shortcuts?T ry https://aka.ms/hotkeys。

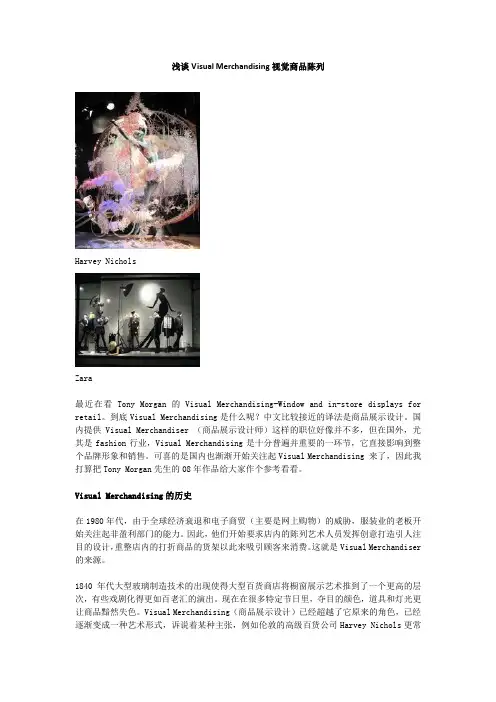

浅谈Visual Merchandising视觉商品陈列Harvey NicholsZara最近在看Tony Morgan的Visual Merchandising-Window and in-store displays for retail。

到底Visual Merchandising是什么呢?中文比较接近的译法是商品展示设计。

国内提供Visual Merchandiser (商品展示设计师)这样的职位好像并不多,但在国外,尤其是fashion行业,Visual Merchandising是十分普遍并重要的一环节,它直接影响到整个品牌形象和销售。

可喜的是国内也渐渐开始关注起Visual Merchandising 来了,因此我打算把Tony Morgan先生的08年作品给大家作个参考看看。

Visual Merchandising的历史在1980年代,由于全球经济衰退和电子商贸(主要是网上购物)的威胁,服装业的老板开始关注起非盈利部门的能力。

因此,他们开始要求店内的陈列艺术人员发挥创意打造引人注目的设计,重整店内的打折商品的货架以此来吸引顾客来消费。

这就是Visual Merchandiser 的来源。

1840年代大型玻璃制造技术的出现使得大型百货商店将橱窗展示艺术推到了一个更高的层次,有些戏剧化得更如百老汇的演出。

现在在很多特定节日里,夺目的颜色,道具和灯光更让商品黯然失色。

Visual Merchandising(商品展示设计)已经超越了它原来的角色,已经逐渐变成一种艺术形式,诉说着某种主张,例如伦敦的高级百货公司Harvey Nichols更常与知名设计师和艺术家合作,让商品变成艺术的一部分。

由于大型百货商店拥有大型商品展示区域及橱窗空间,所以我们可以说百货商店是橱窗陈列的先驱。

而最接近现在的百货商店则最早见于法国。

当年在巴黎,Aristide Boucicaut是第一个提出建立这种百货商店的人。

Visual merchandising is the activity of promoting the sale of goods, especially by their presentation in retail outlets.(New Oxford Dictionary of English, 1999, Oxford University Press). This includes combining products, environments, and spaces into a stimulating and engaging display to encourage the sale of a product or service. It has become such an important element in retailing that a team effort involving the senior management, architects, merchandising managers, buyers, the visual merchandising director, industrial designers, and staff is needed.FacilityVisual merchandising starts with the store building itself. The management decides on the store design to reflect the products the store is going to sell and how to create a warm, friendly, and approachable atmosphere for its potential customers.Many elements can be used by visual merchandisers in creating displays including color, lighting, space, product information, sensory inputs (such as smell, touch, and sound), as well as technologies such as digital displays and interactive installations.Visual merchandising is not a science; there are no absolute rules. It is more like an art in the sense that there are implicit rules but they may be broken for striking effects. The main principle of visual merchandising is that it is intended to increase sales, which is not the case with a "real" art.Visual merchandising is one of the final stages in trying to set out a store in a way that customers will find attractive and appealing and it should follow and reflect the principles that underpin the store’s image. Visual merchandising is the way one displays 'goods for sale' in the most attractive manner with the end purpose of making a sale. "If it does not sell, it is not visual merchandising."Especially in today’s challenging economy, people may avoid designers/ visual merchandisers because they fear unmanageable costs. But in reality,visual merchandisers can help economise by avoiding costly mistakes. With guidance of a professional, a retailer can eliminate errors, saving time and money. It is important to understand that the visual merchandiser is there, not to impose ideas, but to help clients articulate their own personal style.Visual merchandising is the art of implementing effective design ideas to increase store traffic and sales volume. VM is an art and science of displaying merchandise to enable maximum sale. VM is a tool to achieve sales and targets, a tool to enhance merchandise on the floor, and a mechanism to communicate to a customer and influence his decision to buy. VM uses season based displays to introduce new arrivals to customers, and thus increase conversions through a planned and systematic approach by displaying stocks available.Recently visual merchandising has gained in importance as a quick and cost effective way to revamp retail stores.[edit] PurposeRetail professionals display to make the shopping experience more comfortable, convenient and customer friendly by:Making it easier for the shopper to locate the desired category and merchandise.Making it easier for the shopper to self-select.Making it possible for the shopper to co-ordinate & accessorize.Informing about the latest fashion trends by highlighting them at strategic locations.Merchandise presentation refers to most basic ways of presenting merchandise in an orderly, understandable, ’easy to shop’ and ‘find the product’ format. This easier format is especially implemented in fast fashion retailers.VM helps in:Educating the customers about the product/service in an effective and creative way.Establishing a creative medium to present merchandise in 3D environment, thereby enabling long lasting impact and recall value.Setting the company apart in an exclusive position.Establishing linkage between fashion, product design and marketing by keeping the product in prime focus.Combining the creative, technical and operational aspects of a product and the business.Drawing the attention of the customer to enable him to take purchase decision within shortest possible time, and thus augmenting the selling process.HistoryWhen the giant nineteenth century dry goods establishments like Marshall Field & Co. shifted their business from wholesale to retail, the visual display of goods became necessary to attract the general consumers. The store windows were often used to attractively display the store's merchandise. Over time, the design aesthetic used in window displays moved indoors and became part of the overall interior store design, eventually reducing the use of display windows in many suburban malls.In the twentieth century, well-known artists such as Salvador Dalí and Andy Warhol created window displays. It is also common practice for retail venues to display original art for visual merchandising purposes.VariancesPlanogramA Planogram allows planning of the arrangement of merchandise on a given fixture configuration to support sales through proper placement of merchandise by Style, Option, Size, Price points, etc. It also enablesa chain of stores to have the same merchandise displayed in a coherent and similar manner across the chain.The main purpose is to support ease of applicability to the merchandiser while also increasing selection & enhancing the merchandise display in a neat and organized manner.[edit] Window DisplaysDisplay windows may communicate style, content, and price point. A window might combine seasonal and festive points of the year such asBack-to-school, Spring, Summer, Easter, Christmas, New Year approaching, Diwali, Valentine's Day, Mother's Day,Women's Day, etc.[edit] Food MerchandisingRestaurants, Grocery Stores, and C-stores are using visual merchandising as a tool to differentiate themselves in a saturated market. With Whole Foods leading the way, many are recognizing the impact that good food merchandising can have on sales. If a food merchandising strategy considers the 5 senses, it will keep customers lingering in the store, and help them with the buying decision process. Aroma, if pleasant, can be used to help sell product and visual graphics on the boxes and packaging can make them “look” as good as they taste. Texture can be utilized to entice customers to touch, and samples are the best form of food advertising. Especially for large quantity items, the ability to experience the product before committing to the purchase is critical. Food merchandising should educate customers, entice them to buy, and create loyalty to the store.[1]视觉营销是促进商品的销售活动,尤其是他们在零售网点的介绍(新牛津英语词典,1999年,牛津大学出版社)。

TEST FOR ENGLISH MAJORS (1993)-GRADE EIGHT-TEXT AA scientist who does research in economic psychology and who wants to predict the way in which consumers will spend their money must study consumer behaviour. He must obtain data both on the resources of consumers and on the motives that tend to encourage or discourage money spending.If an economist were asked which of three groups borrow most 梡eople with rising incomes, stable incomes, or declining incomes 梙e would probably answer: those with declining incomes. Actually, in the years 1947-1950, the answer was: people with rising incomes. People with declining incomes were next and people with stable incomes borrowed the least. This shows us that traditional assumptions about earning and spending are not always reliable. Mother traditional assumption is that if people who have money expect prices to go up, they will hasten to buy. If they expect prices to go down, they will postpone buying. But research surveys have shown that this is not always true. The expectations of price increases may not stimulate buying. One typical attitude was expressed by the wife of a mechanic in an interview at a time of rising prices. "In a few months, she said, "we'll have to pay more for meat and milk; we'll have less to spend on other things. "Her family had been planning to buy a new car but they postponed this purchase. Furthermore, the rise in prices that has already taken place may be resented add buyer' s resistance may be evoked. This is shown by the following typical comment: "I just don' t pay these prices; they are too high. "Traditional assumptions should be investigated carefully, and factors of time and place should be considered. The investigations mentioned above were carried out in America. Investigations conducted at the same time in Great Britain, however, yielded results that were more in agreement with traditional assumptions about saving and spending patterns. The condition most conductive to spending appears to be price stability. If prices have been stable and people have become accustomed to consider them "right" and expect them to remain stable, they are likely to buy. Thus, it appears that the common business policy of maintaining stable prices with occasional sales or discounts is based on a correct understanding of consumer psychology.21. The best title of the passage isA. Consumer' s Purchasing PowerB. Relationship between Income and Purchasing PowerC. Traditional AssumptionsD. Studies in Consumer Behaviour22. The example of the mechanic' s wife is intended to show that in times of rising pricesA. people with declining income tend to buy lessB. people with stable income tend to borrow lessC. people with increasing income tend to buy moreD. people with money also tend to buy less23. Findings in investigations in Britain are mentioned to showA. factors of time and place should be taken into considerationB. people in Britain behave in the same way as those in AmericaC. maintaining stable prices is based on a correct understanding of consumer psychologyD. occasional discounts and sales are necessary24. According to the passage people tend to buy more whenA. prices are expected to go upB. prices are expected to go downC. prices don' t fluctuateD. the business policy remains unchangedRead TEXT B, an extract from a popular science book, and answer questions 25 to 28.TEXT BWeed CommunitiesIn an intact plant community, undisturbed by human intervention, the composition of a community is mainly a function of the climate and the type of soil. Today' , such original communities are very rare 梩hey are practically limited to national parks and reservations.Civilization has progressively transformed the conditions determining the composition of plant communities. For several thousand years vast areas of arable land have been hoed, ploughed, harrowed and grassland has been cut or grazed. During the last decades the use of chemical substances, such as fertilizers and most recently of weed killers (herbicides) has greatly influenced the composition of weed communities in farm land.All selective herbicides have specific ranges of activity. They control the most important weeds but not all the plants of a community. The latter profit fronithe new free space and from the fertilizer as much as the crop does; hence they often spread rapidly and become problem weeds unless another herbicide for their eradication is found.The soil contains enormous quantities of seeds of numerous species 梪p to half a million per m' according to scientific literature 梩hat retain their ability to germinate for decades. Thus it may occur that weeds that were hardly noticed before emerge in masses after the elimination of their competitors. Hence, the knowledge of the composition of weed communities before selective weed killers are applied is not only of scientific interest since the plant species present in the soil in the form of seeds must be considered as potential weeds. For efficient control the identification of weeds at the seedling stage, i.e. at a time when they can still be controlled, is particularly necessary; for the choice of the appropriate herbicides depends on the composition of the weed community.25. The composition of a plant community -A. depends on climate and soil type in a virgin environment undamaged by human beingsB. was greatly affected by human beings before they started using chemical substances on the soilC. was radically transformed by uncivilized human beingsD. refers to plants, trees, climate, type of soil and the ecological environment26. Why are there problem weeds?A. Because they are the weeds that cannot be eradicated by herbicides.B. Because all selective herbicides can encourage the growth of previously unimportant weeds by eliminating their competitors.C. Because they were hardly considered before so that their seeds were not prevented from germinating.D. Because they benefit greatly from the fertilizer applied to the farm land.27. A knowledge of the composition of a weed communityA. is essential to the efficient control of weedsB. may lead us to be aware of the fact that the soil contains enormous quantities of seeds of numerous speciesC. helps us to have a good idea of why seeds can lie dormant for yearsD. provides us with the means to identify weeds at the seedling stage28. The best alternative title for the passage will beA. A study of Weed CommunitiesB. The Importance of Studying How Plants Live in CommunitiesC. How Herbicides May Affect Farm LandD. Weed Control by Means of HerbicidesTEXT DPsychologists study memory and learning with both animal and human subjects. The two experiments reviewed here show how short-term memory has been studied.Hunter studied short-term memory in rats. He used a special apparatus which had a cage for the rat and three doors. There was a light in each door. First the rat was placed in the closed cage. Next one of the lights was turned on and then off. %. There was food for the rat only at this door. After the light was turned off, the rat had to wait a short time before it was released from its cage. Then, if k went to the correct door, it was rewarded with the food that was there. Hunter did this experiment many times. He always turned on the lights in a random order. The rat had to wait different intervals before it was released from the cage. Hunter found that if the rat had to. wait more than ten seconds, it could not remember the correct door. Hunter' s results show that rats have a short-term memory of about ten seconds.Henning studies how students who learning English as a second language remember vocabulary. The subjects in his experiment were 75 students at the University of California in Los Angeles. They represented all levels of ability in English. beginning, intermediate, advanced; and native-speaking students.To begin, the subjects listened to a recording of a native speaker reading a paragraph in English. Following the recording, the subjects took a 15-question test to see which words they remembered. Each question had four choices. The subjects had to circle the word they had heard in the recording. Some of the questions had four choices that sound alike. For example, weather, whether, wither, and wetter are four words that sound alike. Some of the questions had four choices that have the same meaning. Method, way, manner, and system would be four words with the same meaning. Some of them had four unrelated choices. For instance, weather, method, love, result could be used as four unrelated words. Finally the subjects took a language proficiency test. Henning found that students with a lower proficiency in English made more of their mistakes on words that sound alike; students with a higher proficiency made more of their mistakes on words that have the same meaning. Henning' s results suggest that beginning students hold the sound of words in their short-term memory, and advanced students hold the meaning of words in their short-term memory.32. In Hunter' s experiment, the rat had to rememberA. where the food wasB. how to leave the cageC. how big the cage wasD. which light was turned on33. Hunter found that ratsA. can remember only where their food isB. cannot learn to go to the correct doorC. have a short-term memory of one-sixth a minuteD. have no short-term memory34. Henning tested the students' memory ofA. words copied several timesB. words explainedC. words heardD. words seen35. Henning-concluded that beginning and advanced studentsA. have no difficulty holding words in their short-term memoryB. have much difficulty holding words in their short-term memoryC. differ in the way they retain wordsD. hold words in their short-term memory in the same wayRead TEXT E, a book review, and answer questions 36 to 40.TEXTEGoal TrimmerTITLE: THE END OF EQUALITYAUTHOR: MICKEY KAUSPUBLISHER: BASIC BOOKS; 293 PAGES; $25THE BOTTOM LINE: Let the American rich get richer, says Kaus, and the poor get respects. That' s a plan for the Democrats?By RICHARD LACAYOUTIOPIAS ARE SUPPOSED TO BE dreams of the future. But the American Utopia? Lately it' s a dream that was, a twilit memory of the Golden Age between V-J day and OPEC, when even a blue-collar paycheck bought a place in the middle class. The promise of paradise regained has become a key to the Democratic party pitch. Mickey Kaus, a senior editor of the New Republic, says the Democrats are wasting their time. As the U. S. enters a world where only the highly skilled and well educated will make a decent living, the gap between rich and poor is going to keep growing. No fiddling with the tax code, retreat to protectionism or job training for jobs that aren' t there is going to stop it. Income equality is a hopeless cause in the U. S."Liberalism would be less depressing if it had a more attainable end. Kaus writes, "a goal short of money equality. "Liberal Democrats should embrace an aim he calls civic equality. If government can' t bring everyone into the middle class, let it expand the areas of life in which everyone, regardless of income, receives the same treatment. National health care, improved public schools, universal national service and government financing of nearly all election campaigns, which would freeze out special-interest money 梩here are the unobjectionable components of his enlarged public sphere.Kaus is right to fear the hardening of class lines, but wrong to think the stresses can be relieved without a continuing effort to boost income for the bottom half. "No, we can' t tell them they' 11 be rich, "he admits. "Or even comfortably well- off. But we can offer them at least a material minimum and a good shot at climbing up the ladder. And we can offer them respect. " And what might they offer back? The Bronx had a rude cheer for it. A good chunk of the Democratic core constituency would probably peel off.At the center of Kaus' book is a thoughtful but no less risky proposal to dynamite welfare.He rightly understands how fear and loathing of the chronically unemployed underclass have encouraged middle- income Americans to flee from everyone below them on the class scale. The only way to eliminate welfare dependency, Kaus maintains, is by cutting off checks for all able- bodied recipients, including single mothers with children. He would have government provide them instead with jobs that pay slightly less than the minimum wage, earned-income tax credits tonudge them over the poverty line, drug counselling, job training and, if necessary, day care for their children.Kaus doesn' t sell this as social policy on the cheap. He expects it would cost up to $ 59 billion a year more than the $ 23 billion already spent annually on welfare in the U. S. And he knows it would be politically perilous, because he suggests paying for the plan by raiding Social Security funds and trimming benefits for upper-income retirees. Yet he considers it money well spent if it would undo the knot of chronic poverty and help foster class rapprochement. And it would be too. But one advantage of being an author is that you only ask people to listen to you, not to vote for you.36. According to Mickey Kaus, which of the following is NOT true?A. Methods like evading income tax or providing more chances for job training might help reduce the existing inequality.B. The Democratic Party is spreading propaganda that they could regain the lost paradise.C. Americans once had a period of time when they could obtain middle-class status easily.D. Income inequality results from the fact that society needs more and more workers who have a high skill and a good education.37. In Kaus' opinionA. the government should strive to realize equality in everybody' s incomeB. the government should do its best to bring every American into the middle classC. the goal will be easier to attain if we change it from money equality to civic equalityD. it' s almost impossible for the government to provide such things as national health care, improved public schools, universal national service, etc.38. Kaus has realized thatA. real equality cannot be achieved if the poor cannot increase their incomeB. his idea will probably meet with disapproval from the supporters of the Democratic PartyC. only the Bronx might cheer for his theoryD. the division of social strata has become increasingly conspicuous39. The proposal as offered by KausA. will increase the fear and loathing of the unemployed underclass by cutting off checks for all able-bodied recipientsB. will. drastically increase the income taxes for taxpayersC. is supposed to help establish reconciliation between the poor and the rich though the gap may be unbridgeableD. is too costly to be carried out40. The title of the review suggestsA. giving the poor more financial aid and more job opportunitiesB. a fundamental Change in the goal which the Democratic Party uses to appeal to AmericansC. the elimination of the unfair distribution of social wealth among AmericansD. a modification of the objective to make it more securableTEST FOR ENGLISH MAJORS (1994)- GRADE EIGHT -TEXT APanic and Its EffectsOne afternoon while she was preparing dinner in her kitchen, Anne Peters, a 32-year old American housewife, suddenly had severe pains in her chest accompanied by the shortness of breath.Terrified by the thought she was having a heart attack, Anne screamed for help. Her frightened husband immediately rushed Ann to a nearby hospital where, to her great relief, her pains were diagnosed as having been caused by panic, and not a heart attack.More and more Americans nowadays are having panic attacks like the one experienced by Anne Peters. Benjamin Crocker, a psychiatrist and assistant director of the Anxiety Disorders Clinic at the University of Southern California, reveals that as many as ten million adult Americans have already or will experience at least one panic attack in their lifetime. Moreover, studies conducted by the National Institute of Mental Health in the United States disclose that approximately 1.2 million adult individuals are currently suffering from severe and recurrent panic attack.These attacks are spontaneous and inexplicable and may last for a few minutes; some, however, continue for several hours, not only frightening the victim but also making him or her wholly disoriented. The symptoms of panic attack bear such remarkable similarity to those of heart attack that many victims are convinced that they are indeed having a heart attack.Panic attack victims show the following symptoms: they often become easily frightened or feel uneasy in situations where people normally would not be afraid; they suffer shortness of breath, dizziness or lightheadedness; experience chest pains, a quick heartbeat, tingling in the hands; a choking feeling, faintness, sudden fits of trembling, a feeling that persons and things around them are not real; and most of all, a fear of dying or going crazy. A person seized by a panic attack may show all or as few as four of these symptoms.There has been a lot of conjecture as to the cause of panic attack. Both laymen and experts alike claim that psycho,logical stress could be a logical cause, but as yet, no evidence has been found to support this theory. However, studies show that more women than men experience panic attack and people who drink a lot as well as those who take marijuana or beverages containing a lot of caffeine are more prone to attacks.Dr. Wayne Keaton, an associate professor of psychiatry at the University of Washington Medical School, claims thatthere are at least three signs that indicate a person is suffering from panic attack rather than a heart attack. The first is age. People between the ages of 20 and 30 are more often victims of panic attack. The second is sex. More women suffer from recurrent panic attacks than men, while heart attack rarely strikes women before their menopause. The third is the multiplicity of symptoms. A panic attack victim usually suffers at least four of the previous mentioned symptoms while a heart attack victim often experience only pain and shortness of breath.It is generally concluded that panic attack does not endanger a person' s life. All the same, it can unnecessarily disrupt a person' s life by making him or her so afraid that he or she will have a panic attack in a public place that he or she may refuse to leave home and may eventually become isolated from the rest of society. Dr. Crocker' s advice to any person who thinks he is suffering from panic attack is to consult a doctor for a medical check-up to rule out the possibilities of physical illness first. Once it has been confirmed that he or she is, in fact, suffering from panic attack, the victim should seek psychological and medical help.16. According to the passage, panic attack is[A] both frightening and fatal. [B] actually a form of heart attack.[C] more common among women than men. [D] likely to last several hours.17. One factor both panic and heart attacks have in common is[A] a feeling of faintness. [B] uncontrollable movements.[C] a horror of going mad. [D] difficulty in breathing.18. It is indicated in the last paragraph that panic attack may[A] make a victim reluctant to leave home any more. -[B] threaten a victim' s physical well-being.[C] cause serious social problems for the victim' s family.[D] prevent a victim from enjoying sport anymore.19. Dr. Crocker suggests that for panic attack sufferers[A] physical fitness is not so crucial.[B] a medical checkup is needed to confirm the illness.[C] psychological and medical help is necessary.[D] nutritional advice is essential to cure the disease.TEXT BHow the Smallpox War Was WonThe world' s last known case of smallpox was reported in Somalia, the horn of Africa, in October 1977. The victim was a young cook called Ali Maow Maalin. His case became a landmark in medical history, for smallpox is the first communicable disease ever to be eradicated.The smallpox campaign to free the world of smallpox has been led by the World Health Organization. The Horn of Africa, embracing the Ogaden region of Ethiopia and Somalia, was one of the last few smallpox ridden areas of the world when the WHO-sponsored Smallpox Eradication Program (SEP) got underway there in 1971.Many of the 25 million inhabitants, mostly farmers and nomads living in a wildness of desert, bush and mountains, already have smallpox. The problem of tracing the disease in such formidable country was exacerbated by continuous warfare in the area.The program concentrated on an imaginative policy of "search and containment". Vaccination was used to reduce the widespread incidence of the disease, but the success of the campaign depended on the work of volunteers. There were men, paid by the day, who walked hundreds of miles in search of "rumors" ?information about possible smallpox cases.Often these rumors turned out to be cases of measles, chick pox or syphilis ?but nothing could be left to change.the program progressed the disease was gradually brought under control. By September 1976 the SEP made its first that no new cases had been reported. But that first optimism was short-lived. A three:year-old girl called Amina Salat, from a dusty village in the Ogaden in the south-east of Ethiopia, had given smallpox to a young nomad visitor. Leaving the village the nomad had walked across the border into Somalia. There he infected 3,000 people, and among them had been the cook, Ali. It was further 14 months before the elusive "target zero" ?no further cases ?was reached. Even now, the search continues in "high risk" areas and in parts of the country unchecked for some time. The flow of rumors has now diminished to a trickle ?but each must still be checked by a qualified person.Victory is in sight, but two years must pass since the "last case" be fore an international declare that the world is entirely free from smallpox.20. All Maow Maalin' s case is significant because he was the[A] last person to be cured of smallpox in Somalia.[B] last known sufferer of smallpox in the world.[C] first smallpox victim in the Horn of Africa.[D] first Somalian to be vaccinated for smallpox.21. The work to stamp out smallpox was made more difficult by[A] people' s unwillingness to report cases. [B] the lack of vaccine.[C] the backwardness of the region. [D] the incessant local wars.22. The volunteers mentioned were paid to[A] find out about the reported cases of smallpox.[B] vaccinate people in remote areas.[C] teach people how to treat smallpox.[D] prevent infected people from moving around.23. Nowadays, smallpox investigations are only.carried out[A] at regular two-yearly intervals.[B] when news of an outbreak occurs.[C] in those areas with previous history of the disease.[D] by a trained professional.TEXT CThe Form Master' s observations about punishment were by no means without their warrant at St. James' s school. Flogging with the birch in accordance with the Eton fashion was a great feature in its curriculum. But I am sure no Eton boy, and certainly no Harrow boy of my day, ever received such a cruel flogging as this headmaster was accustomed to inflict upon the little boys who were in his care and power. They exceeded in severity anything that would be tolerated in any of the Reformatories under the Home Office. My reading in later life has supplied me with some possible explanations of his temperament. Two or three times a month the whole school was marshalled in the Library, and one or more delinquents were hauled off to an adjoining apartment by the two head boys, and there flogged until they bled freely, while the rest sat quaking, listening to their screams...How I hated this school, and what a life of anxiety Hived there for more than two years. I made very little progress at my lessons, and none at all games. I counted the days and the hours to the end of every term, when I should return home from this hateful servitude and range my soldiers in line of battle on the nursery floor. The greatest pleasure I had in those days was reading. When I was nine and a half my father gave me Treasure Island and I remember the delight with which I devoured it. My teacher saw me at once backward and precocious, reading books beyond my years and yet at the bottom of the form. They were offended. They had large resources of compulsion at their disposal, but I was stubborn. Where myreason, imagination or interest were not engaged, I would not or I could not learn. In all the twelve'years I was at school no one ever succeeded in making me write a Latin verse or learn any Greek except the alphabet. I do not at all excuse myself for this foolish neglect of opportunities procured at so much expense by my parents and brought so forcibly to my attention by my preceptors. Perhaps if I had been introduced to the ancients through their history and customs, instead of through their grammar and syntax, I might have had abetter record.24. Which of the following statements about flogging at St. James' s school is NOT correct?[A] Corporal punishment was accepted in the school:[B] Flogging was part of the routine in the school.[C] Flogging was more severe in schools for juvenile delinquents.[D] The Headmaster' s motive for flogging was then rather obscure.25. When he was back at home, the author enjoyed[A] playing war games. [B] dressing up like a soldier.[C] reading war stories. [D] talking to soldiers.26. "They had large resources of compulsion at their disposal." means that the teachers[A] had tried to suspend him from school several times.[B] had physically punished him quite a lot.[C] had imposed upon him many of their ideas.[D] had tried to force him to learn in many different ways.27. The author failed to learn Greek because -[A] he lacked sufficient intelligence. [B] he could not master the writing system.[C] of his parents' attitude to the subject. [D] the wrong teaching approach was used.TEXT DI HA VE A DREAM ----30 Years Ago and NowFew issues are as clear as the one that drew a quarter-million Americans to the Lincoln Memorial 30 years ago this August 28. "America has given the Negro people a bad check", the nation was told. It has promised quality but delivered second-class citizenship because of race. Few orators could define the justice as eloquently as Martin Luther King Jr. whose words on that sweltering day remain etched in the public consciousness: "I have a dream that my four little children will one day live in a nation where they will not be judged by the color of their skin but by the content of their character."The march on Washington had been the dream of a black labor leader. A. Philip Randolph who was a potent figure in the civil-rights movement. But it was King who emerged as the symbol of the black people' s struggle. His "I have a dream" speech struck such an emotional chord that recordings of it were made, sold, bootlegged and resold within weeks of its delivery. The magic of the moment was that it gave white America a new prospective on black America and pushed civil rights forward on the nation' s agenda.When the march was planned by a coalition of civil rights, union and church leaders, nothing quite like it had ever been seen. Tens of thousands of blacks streamed into the nation' s capital by car, bus, train and foot, an invading army of the disenfranchised singing freedom songs and demanding rights. By their very members, they forced the world' s greatest democracy to face an embarrassing question: How could America continue on a course that denied so many the simple amenities of a water fountain or a lunch counter? Or the most essential element ?of democracy the vote?Three decades later, we still wrestle with questions of black and white, but now they are confused by shades of gray. The gap persists between the quality of black life and white. The urban underclass has grown more entrenched. Bias remains. And the nation is jarred from time to time by sensational cases stemming from racial hate. But the clarity of the 1963 issue is gone. No longer do governors stand in schoolhouse doors. Nor do signs bar blacks from restaurants or theaters; It is illegal to deny African-Americans the vote. There are 7,500 black elected officials, including 338 mayors and 40 members of Congress, plus a large black middle class. And we are past the point when white American must look to one eloquent leader to answer the question "What does the Negro want ?"The change is reflected in the variety of causes on the wish list of this year' s anniversary march。

Product nameGolgi/ER Staining Kit - Cytopainter Sample typeAdherent cells, Suspension cells Assay typeCell-based Species reactivityReacts with: Mammals, Other species Product overview Golgi/ER Staining Kit | Cytopainter (ab139485) provides a rapid and convenient method forvisualizing the Golgi and ER within living cells, without requiring lengthy transfection procedures.ab139485 is validated with human cervical carcinoma cell line HeLa, human T-lymphocyte cell lineJurkat, canine kidney cell line MDCK, and human bone osteosarcoma epithelial cell line U2OS.The kit should also be suitable for identifying Golgi body and endoplasmic reticulum perturbingagents and thus can be a useful tool for examining the transport and recycling of molecules fromthe GA to ER in cellular secretory pathways.Organelle Reagent III contains a mixture of GA-selective, ER-selective and nucleus-selective dyessuitable for live cell staining. Compared with other commercially available dyes for labeling Golgibodies, the green dye component of the Reagent III is more faithfully localized to the Golgiapparatus, with minimal staining of the endoplasmic reticulum. The red dye component of theReagent III stains the endoplasmic reticulum with high fidelity and is specifically designed for usewith green fluorescing probes.Review other dyes and kits for Golgi staining , or the live cell staining fluorescent dyes guidePlatform Fluorescence microscopeStorage instructionsPlease refer to protocols.Product datasheetGolgi/ER Staining Kit - Cytopainter ab1394853 Abreviews 2 References 5 ImagesOverviewPropertiesComponents100 tests 10X Assay Buffer 11 x 15ml 50X Assay Buffer 21 x 1.2ml Organelle Reagent III (lyophilized) 1 vialFluoroescent staining using CytoPainter Golgi/ER Staining Kit (ab139485)This image is courtesy of an A breview submitted by Edwin Hernandez CytoPainter Golgi/ER Staining Kit (ab139485) staining live rat astrocyte cells. Cells were seeded on a Ibidi u-slide 18 well flat poly-L-Lysine coated. Cells were washed with 1XAssay Solution, incubated for 30 min at 37º with the Organelle Reagent III, washed 3 times with ice cold medium (DMEM F-12) and incubated again in medium for 30 minutes at 37º.ER (red), Golgi (green), Nuclear (blue).Absorbance and fluorescent emission - blue dye Absorbance and fluorescent emission spectra for the blue dyeImagesFluorescence image - red dyeFluorescence excitation and emission spectra for the red dye Fluorescence image - green dyeFluorescence excitation and emission spectra for the green dyeFluoroescent staining using Organelle Reagent III ab139485 kit staining of MDCK epithelial cells. The ER (red), Golgi (green) and nuclear (blue) dyes highlight their respective subcellular targets with high dependability.Please note: A ll products are "FOR RESEA RCH USE ONLY. NOT FOR USE IN DIA GNOSTIC PROCEDURES"Our Abpromise to you: Quality guaranteed and expert technical support Replacement or refund for products not performing as stated on the datasheetValid for 12 months from date of deliveryResponse to your inquiry within 24 hoursWe provide support in Chinese, English, French, German, Japanese and SpanishExtensive multi-media technical resources to help youWe investigate all quality concerns to ensure our products perform to the highest standardsIf the product does not perform as described on this datasheet, we will offer a refund or replacement. For full details of the Abpromise, please visit https:///abpromise or contact our technical team.Terms and conditionsGuarantee only valid for products bought direct from Abcam or one of our authorized distributors。