Probability_1

- 格式:doc

- 大小:191.50 KB

- 文档页数:9

excel随机生成数字的函数

Excel提供了多种随机生成数字的函数,可以用于生成随机数、随机整数、随机小数等。

以下是几个常用的函数:

1. RAND()函数:该函数会生成一个0到1之间的随机数,每次计算时都会生成一个新的随机数。

2. RANDBETWEEN(min,max)函数:该函数可以生成一个指定范围内的随机整数,如=RANDBETWEEN(1,100)可以生成1到100范围内的随机整数。

3. ROUND(RAND()*range,decimals)函数:该函数可以生成一个指定范围内的随机小数,如=ROUND(RAND()*10,2)可以生成0到10范围内的保留2位小数的随机数。

4. NORMINV(probability,mean,standard_dev)函数:该函数可以生成符合指定正态分布的随机数,其中probability是概率,mean是均值,standard_dev是标准差。

通过这些函数的组合和调整参数,可以实现各种不同类型的随机数字生成。

- 1 -。

科普一下随机微积分随便写了一个关于随机微积分的科普,如果大家觉得写的不错,读者受益,如果大家觉得写的不好,请指出,我受益,总之是件好事,呵呵1. 随机微积分(Stochastic Calculus)是干什么的?一言以蔽之,给随机变量建立一套类似于普通微积分的理论,让我们能够像对普通的变量做微积分那样对随机变量做微积分。

知道了这一点,我们很多时候都可以把普通微积分的思维方式对应到随机微积分上。

比如,有些概念,一开始如果我们不理解这个概念起的作用是什么,就可以想想在普通微积分里面跟这个概念相对应的概念的作用。

2. 随即微积分的理论框架是怎么样建立起来的?一言以蔽之,依样画葫芦。

这里的“样”,说的是普通微积分。

在普通微积分里面,最基本的理论基础是“收敛”(convergence)和“极限”(limit)的概念,所有其他的概念都是基于这两个基本概念的。

对于随机微积分,在我们建立了现代的概率论体系(基于实分析和测度论)之后,同样的我们就像当初发展普通微积分那样先建立“收敛”和“极限”这两个概念。

与普通数学分析不同的是,现在我们打交道的是随机变量,比以前的普通的变量要复杂得多,相应的建立起来的“收敛”和“极限”的概念也要复杂得多。

事实上,随机微积分的“收敛”不止一种,相应的“极限”也就不止一种。

用的比较多的收敛概念是convergence with probability 1 (almost surely) 和mean-square convergence。

另一个需要新建立的东西是积分变量。

在普通微积分里面,积分变量就是一般的实变量,也就是被积函数(integrand)的因变量,基本上不需要我们做什么文章。

而随即微积分的积分变量是布朗运动,在数学上严格的定义和构造布朗运动是需要一点功夫的。

这个过程是构建随机微积分的的过程中的基本的一环。

“收敛”,“极限”和“积分变量”都定义好了之后,我们就可以依样画葫芦,像普通微积分里面的定义那样去定义接下来的一系列概念。

上课材料之三:第二节 分布函数(Distribution function),数学期望(Expectation)与方差(Variance)本节主要介绍概率及其分布函数,数学期望,方差等方面的基础知识。

一、概率(Probability)1、概率定义(Definition of Probability)在自然界和人类社会中有着两类不同的现象,一类是决定性现象,其特征是在一定条件必然会发生的现象;另一类是随机现象,其特征是在基本条件不变的情况下,观察到或试验的结果会不同。

换句话说,就个别的试验或观察而言,它会时而出现这种结果,时而出现那样结果,呈现出一种偶然情况,这种现象称为随机现象。

随机现象有其偶然性的一面,也有其必然性的一面,这种必然性表现为大量试验中随机事件出现的频率的稳定性,即一个随机事件出现的频率常在某了固定的常数附近变动,这种规律性我们称之为统计规律性。

频率的稳定性说明随机事件发生可能性大小是随机事件本身固定的,不随人们意志而改变的一种客观属性,因此可以对它进行度量。

对于一个随机事件A ,用一个数P (A )来表示该事件发生的可能性大小,这个数P (A )就称为随机事件A 的概率,因此,概率度量了随机事件发生的可能性的大小。

对于随机现象,光知道它可能出现什么结果,价值不大,而指出各种结果出现的可能性的大小则具有很大的意义。

有了概率的概念,就使我们能对随机现象进行定量研究,由此建立了一个新的数学分支——概率论。

概率的定义定义在事件域F 上的一个集合函数P 称为概率,如果它满足如下三个条件: (i )P (A )≥0,对一切∈A F (ii )P (Ω)=1;(iii )若∈i A ,i=1,2…,且两两互不相容,则∑∑∞=∞==⎪⎭⎫ ⎝⎛11)(i ii i AP A P性质(iii )称为可列可加性(conformable addition )或完全可加性。

推论1:对任何事件A 有)(1)(A P A P -=;推论2:不可能事件的概率为0,即0)(=φP ; 推论3:)()()()(AB P B P A P B A P -+=⋃。

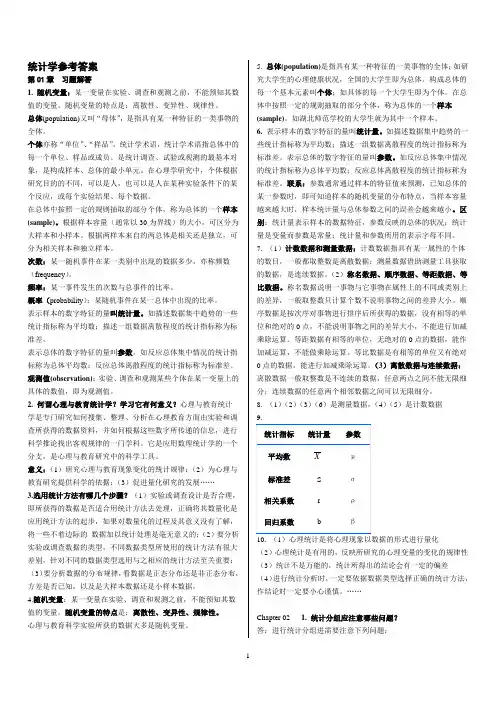

统计学参考答案第01章习题解答1. 随机变量:某一变量在实验、调查和观测之前,不能预知其数值的变量。

随机变量的特点是:离散性、变异性、规律性。

总体(population)又叫“母体”,是指具有某一种特征的一类事物的全体。

个体亦称“单位”、“样品”,统计学术语,统计学术语指总体中的每一个单位、样品或成员。

是统计调查、试验或观测的最基本对象,是构成样本、总体的最小单元。

在心理学研究中,个体根据研究目的的不同,可以是人,也可以是人在某种实验条件下的某个反应,或每个实验结果、每个数据。

在总体中按照一定的规则抽取的部分个体,称为总体的一个样本(sample)。

根据样本容量(通常以30为界线)的大小,可区分为大样本和小样本。

根据两样本来自的两总体是相关还是独立,可分为相关样本和独立样本。

次数:某一随机事件在某一类别中出现的数据多少,亦称频数(frequency)。

频率:某一事件发生的次数与总事件的比率。

概率(probability):某随机事件在某一总体中出现的比率。

表示样本的数字特征的量叫统计量。

如描述数据集中趋势的一些统计指标称为平均数;描述一组数据离散程度的统计指标称为标准差。

表示总体的数字特征的量叫参数。

如反应总体集中情况的统计指标称为总体平均数;反应总体离散程度的统计指标称为标准差。

观测值(observation):实验、调查和观测某些个体在某一变量上的具体的数值,即为观测值。

2. 何谓心理与教育统计学?学习它有何意义?心理与教育统计学是专门研究如何搜集、整理、分析在心理教育方面由实验和调查所获得的数据资料,并如何根据这些数字所传递的信息,进行科学推论找出客观规律的一门学科。

它是应用数理统计学的一个分支,是心理与教育研究中的科学工具。

意义:(1)研究心理与教育现象变化的统计规律;(2)为心理与教育研究提供科学的依据;(3)促进量化研究的发展……3.选用统计方法有哪几个步骤?(1)实验或调查设计是否合理,即所获得的数据是否适合用统计方法去处理,正确将其数量化是应用统计方法的起步,如果对数量化的过程及其意义没有了解,将一些不着边际的数据加以统计处理是毫无意义的;(2)要分析实验或调查数据的类型,不同数据类型所使用的统计方法有很大差别,针对不同的数据类型选用与之相应的统计方法至关重要;(3)要分析数据的分布规律,看数据是正态分布还是非正态分布,方差是否已知,以及是大样本数据还是小样本数据。

资料范本本资料为word版本,可以直接编辑和打印,感谢您的下载计量经济学实验一 EViews 软件的基本操作地点:__________________时间:__________________说明:本资料适用于约定双方经过谈判,协商而共同承认,共同遵守的责任与义务,仅供参考,文档可直接下载或修改,不需要的部分可直接删除,使用时请详细阅读内容实验一 EViews 软件的基本操作1.1 实验目的了解EViews 软件的基本操作对象,掌握软件的基本操作。

1.2 实验内容以表1.1所列中国的GDP与消费的总量数据(1990~2000,亿元)为例,利用EViews 软件进行如下操作:(1)EViews 软件的启动(2)数据的输入、编辑与序列生成(3)图形分析与描述统计分析(4)数据文件的存贮、调用与转换表1.1数据来源:2004年中国统计年鉴,中国统计出版社1.3 实验步骤1.3.1 EViews的启动步骤进入Windows /双击EViews5快捷方式,进入EViews窗口;或点击开始/程序/EViews5/EViews5进入EViews窗口,如图1.1。

图1.1标题栏:窗口的顶部是标题栏,标题栏的右端有三个按钮:最小化、最大化(或复原)和关闭,点击这三个按钮可以控制窗口的大小或关闭窗口。

菜单栏:标题栏下是主菜单栏。

主菜单栏上共有7个选项: File,Edit,Objects,View,Procs,Quick,Options,Window,Help。

用鼠标点击可打开下拉式菜单(或再下一级菜单,如果有的话),点击某个选项电脑就执行对应的操作响应。

命令窗口:主菜单栏下是命令窗口,窗口最左端一竖线是提示符,允许用户在提示符后通过键盘输入EViews命令。

主显示窗口:命令窗口之下是EViews的主显示窗口,以后操作产生的窗口(称为子窗口)均在此范围之内,不能移出主窗口之外。

状态栏:主窗口之下是状态栏,左端显示信息,中部显示当前路径,右下端显示当前状态,例如有无工作文件等。

最近在学习过程中学习了Copula函数,在看了一些资料的基础上总结成了本文,希望对后面了解该知识的同学有所帮助。

本文读者要已知概率分布,边缘分布,联合概率分布这几个概率论概念。

我们为什么要引入Copula函数?当边缘分布(marginal probability distribution)不同的随机变量(random variable),互相之间并不独立的时候,此时对于联合分布的建模会变得十分困难。

此时,在已知多个已知边缘分布的随机变量下,Copula函数则是一个非常好的工具来对其相关性进行建模。

什么是Copula函数?copula这个单词来自于拉丁语,意思是“连接”。

最早是由Sklar在1959年提出的,即Sklar定理:以二元为例,若 H(x,y) 是一个具有连续边缘分布的F(x) 与 G(y) 的二元联合分布函数,那么存在唯一的Copula函数 C ,使得H(x,y)=C(F(x),G(y)) 。

反之,如果 C 是一个copula函数,而 F 和 G 是两个任意的概率分布函数,那么由上式定义的 H 函数一定是一个联合分布函数,且对应的边缘分布刚好就是 F 和 G 。

Sklars theorem : Any multivariate joint distribution can be written in terms of univariate marginal distribution functions and a copula which describes the dependence structure between the twovariable.Sklar认为,对于N个随机变量的联合分布,可以将其分解为这N个变量各自的边缘分布和一个Copula函数,从而将变量的随机性和耦合性分离开来。

其中,随机变量各自的随机性由边缘分布进行描述,随机变量之间的耦合特性由Copula函数进行描述。

概率名词解释

概率(probability)是指某一事件在相同条件下重复出现的可能性,即一个随机事件a发生的可能性。

1.概率的基本概念。

从数学角度看,是一种度量,表示为P(a|b)。

2.概率的性质。

(1)一个大于0的自然数,不能确定地确定其发生的可能性。

(2)概率不依赖于具体的对象和条件。

概率只能是关于大于等于0的自然数的一些性质。

(3)概率可以用加法和乘法来定义。

(4)两个互不相同的事件,必有一个发生的概率小于另一个发生的概率。

3.抽样调查时,必须知道总体中每一个单位被抽中的可能性,才能使用概率进行分析。

概率还常用于其他问题的分析,这时,我们称之为事件的概率。

概率的大小用“或然率”(probable rate)来表示。

或然率是所有相互独立的可能事件中,每一个事件发生的概率。

通常把这个概率记为P(E|M)。

其中E表示总体, M表示每个个体。

或然率愈小,说明事件的发生可能性愈小。

举例来说,你掷一枚硬币,正面朝上的概率是1/2,如果连续6次正面朝上,那么正面朝上的概率就是1/6,所以是1/2。

那么正面朝上的可能性就是1/6,反面朝上的可能性就是1/2。

而每一次都是正面朝上的可能性是1/6,这就是事件的概率。

- 1 -。

SPC计算公式统计项目名称:SPC计算公式统计项目编号:SPC-002文档编号:版本号: 1.0编制单位:研发部文档控制目录SPC计算公式统计 (1)文档控制 (1)一、计量型 (3)Mean均值 (3)Max最大值 (3)Min最小值 (3)Range极差最大跨距 (3)MR移动极差 (3)StdDev标准差 (3)Sigma (4)UCL、CL、LCL上控制限、中心限、下控制限(计量型) (4)Cp过程能力指数 (5)Cmk机器能力指数 (5)Cr过程能力比值 (5)Cpl下限过程能力指数 (5)Cpu上限过程能力指数 (6)Cpk修正的过程能力指数 (6)k:偏移系数 (6)Pp过程性能指数 (6)Pr过程性能比值 (6)Ppu上限过程性能指数 (6)Ppl下限过程性能指数 (6)Ppk修正的过程性能指数 (7)Cpm目标能力指数 (7)Ppm目标过程性能指数 (7)Zu(Cap)规格上限Sigma水平 (7)Zl(Cap)规格下限Sigma水平 (7)Zu(Perf) (7)Zl(Perf) (7)Fpu(Cap)超出控制上限机率 (8)Fpl(Cap)超出控制下限机率 (8)Fp (Cap)超出控制界线的机率 (8)Fpu(Perf) (8)Fpl(Perf) (8)Fp (Perf) (8)Skewness偏度,对称度 (8)Kurtosis峰度 (8)二、计数型 (8)Mean均值 (9)Max (9)Min (9)Range极差 (10)StdDev标准差 (10)UCL、CL、LCL上控制限、中心限、下控制限(计件型、计点型) (10)三、DPMO (10)四、相关分析 (11)五、正态分布函数Normsdist(z) (11)六、综合能力指数分析 (12)一、计量型输入参数:x :参与计算的样本值ChartType :图形编号,1均值极差;2均值标准差;3单值移动极差;8直方图 USL :规格上限 LSL :规格下限Target :目标值,在公式中简写为T Mr_Range :移动跨距σˆ:估计sigma 计算出:n :样本总数x :所有样本的平均值注意:1、 设置常量NOTV ALID=-99999,如统计量计算不出,则返回该常量Mean 均值nxMean ni i∑==1子组数中的所有均值(字段名叫取值)的总平均值Max 最大值max X Max = 子组数中最大的均值Min 最小值min X Min = 子组数中最小的均值Range 极差 最大跨距min max X X Range -=MR 移动极差i n i X X MR -=+ 本子组取值与上一子组的差值绝对值StdDev 标准差1)(12--=∑=n Mean xStdDev ni i例:X1=2,X2=4,X3=6,X4=4,求)44()46()44()42(2222-+-+-+-Sigma1、 极差估计σˆ 2/d R =∧σ2、 标准差估计σˆ 4/ˆC S =σ当子组容量在25以内时可查表得到4C 的值,当子组容量大于25时可用公式:3*4)1(*44--=n n C3、 计算σn k m n k m x xmi i*,1)(12=--=∑=,则为个子组,每个子组容量σ4、组内波动σˆ n k nx xki iki nj i ij为个子组,每个子组容量,)1()(1112∑∑∑-==∧--=σUCL 、CL 、LCL 上控制限、中心限、下控制限(计量型)1、 均值-极差控制图(x - R )均值控制图 极差控制图UCL=R X 2A + UCL=R D 4 LCL=R X 2A - LCL=),0(3R D Max CL=X CL=R 其中:232d n A ⋅=23314d dD ⋅+= 23313d d D ⋅-= 3是指控制标准差倍数2、 均值-标准差控制图(x -S )均值控制图 标准差控制图UCL=S A X 3+ UCL=S B 4 LCL=S A X 3- LCL=),0(3S B Max CL=X CL=S其中:)(334n C n A ⋅=)()(1314424n C n c B -⋅+= )()(1313424n C n c B -⋅-= 3是指控制标准差倍数3、 单值-移动极差控制图(X-Rs )单值控制图 极差控制图UCL=s R E X 2+ UCL=s R D 4 LCL=s R E X 2- LCL=),0(3s R D Max CL=X CL=s R 其中:232d E =23314d d D ⋅+= 23313d d D ⋅-= 3是指控制标准差倍数Cp 过程能力指数(短期)过程能力,即工序的能力(Process Capbility ,PC ),是指过程加工质量方面的能力。

概率的计算公式

概率(Probability)是用来评估某一事件发生的可能性的数字,它介于0和1之间,其中0代表完全不可能发生,1代表完全可能发生,它反映了某一事件发生的概率有多大,其计算公式为:

概率 P(E) = 发生事件E的次数/总次数

即可以通过P(E)=观测事件E发生次数/总次数,来计算事件E发生的概率。

其计算方法可以举例说明:假设投掷一枚硬币,投掷正面朝上的概率是1/2,也就是说这个概率 P(正)=发生正面朝上的次数/总次数=1/2,同理反面朝上的概率P(反)=发生反面朝上的次数/总次数=1/2,即两面朝上概率之和为 1,也就是说两种情况出现的概率之和必须为1。

有了以上基础,我们可以总结出概率计算的基本思路:

1、确定概率的计算对象:首先要确定概率计算的对象,确立该怎么去计算概率。

2、确定概率的计算方法:确定概率的计算方法,通常是概率 = 发生事件的次数/总次数。

3、计算概率:当已确定计算对象和计算方法后就可以开始计算概率了。

4、验证概率正确性:计算完成后,概率结果可能不正确,需要进行验证。

概率计算是一门科学,也是统计学的一部分,它是从解释已有数据并用于建立概率模型,以及进行决策分析的重要工具。

在统计、金融、风险管理、投资决策和保险等领域中概率计算都发挥重要的作用。

因此,掌握概率计算的基本思路和步骤对日常生活中的各种做出正确的决策也是至关重要的。

先验概率与后验概率的区别-1先验概率和后验概率

先验概率(prior probability):指根据以往经验和分析。

在实验或采样前就可以得到的概率。

后验概率(posterior probability):指某件事已经发生,想要计算这件事发生的原因是由某个因素引起的概率。

先验概率是指根据以往经验和分析得到的概率,如全概率公式,它往往作为"由因求果"问题中的"因"出现.

后验概率是指依据得到"结果"信息所计算出的最有可能是那种事件发生,如贝叶斯公式中的,是"执果寻因"问题中的"因".

可以看出,先验概率就是事先可估计的概率分布,而后验概率类似贝叶斯公式“由果溯因”的思想。

下面我们通过PRML(Pattern Recognition and Machine Learning)这本书中的例子来理解一下上面的定义。

任何一个学科,最基本的就是概念,概念一定要清楚,清晰,否则概念都模棱两可的话,这之上的一切建筑都不牢固。

很多概念可能长时间不使用就会变得模糊,所以在这里记录一下,输出是最好的记忆。

先验与后验的区别主要在于有没有利用样本信息。

没用样本信息是先验。

用了样本信息是后验。

观测样本前的经验是先验,观测样本后的经验是后验。

“先”与“后”主要体现在对样本信息的利用上。

先验概率可理解为先(样本)概率,观测样本之前的概

率估计。

后验概率可理解为后(样本)概率,观测样本之后的概率估计。

概率的单词单词:probability1. 定义与释义1.1词性:名词1.2释义:表示某事发生的可能性大小。

1.3英文解释:The extent to which an event is likely to occur.1.4相关词汇:likelihood(同义词)、chance(同义词)、probable (派生词,形容词)。

---2. 起源与背景2.1词源:源自拉丁语“probabilitas”,由“probabilis”演变而来,意思是“可相信的,可证实的”。

2.2趣闻:在古代,人们就开始对各种事件发生的概率有一定的直觉感受。

例如在赌博游戏中,赌徒们虽然没有精确的概率计算,但他们能感觉到某些结果更容易出现。

古希腊时期,哲学家们也探讨过关于不确定性和可能性的概念,这为概率概念的发展奠定了一定的思想基础。

---3. 常用搭配与短语3.1短语:(1) probability theory:概率论例句:Probability theory is very important in modern statistics.翻译:概率论在现代统计学中非常重要。

(2) high probability:高概率例句:There is a high probability that it will rain tomorrow.翻译:明天很有可能下雨。

(3) low probability:低概率例句:The low probability event still might happen.翻译:低概率事件仍然有可能发生。

---4. 实用片段(1). "What's the probability of getting two heads when flipping two coins?" Tom asked his math teacher. His teacher replied, "The probability is one fourth."翻译:“抛两枚硬币得到两个正面的概率是多少?”汤姆问他的数学老师。

英国wjec数学a水平考试内容介绍小伙伴们,今天来给你们唠唠英国WJEC数学A水平考试的内容。

一、总体概况。

这个考试啊,就像是一场数学大冒险,有好多不同的关卡要闯呢。

它涵盖了从基础数学知识到比较高深的数学概念的各种内容。

二、代数部分(Algebra)1. 方程式(Equations)你得会解各种类型的方程式,像一元一次方程,那就是小case啦,就像2x + 3=7,移项、计算,轻松搞定。

但是一元二次方程ax^2+bx + c = 0就有点小挑战了,得会用求根公式x=frac{-b±√(b^2)-4ac}{2a},这就像是掌握了一把打开特殊大门的钥匙。

还有联立方程,就好比是要同时满足好几个条件。

比如说,一个方程是y =2x+1,另一个是y=x 2,你得找到那个同时在这两条线上的点,也就是x和y的值,这就像在找两条路的交叉点一样。

2. 函数(Functions)函数可是个大家族。

线性函数y = mx + c,你得知道斜率m和截距c的意义。

斜率就像是山坡的陡峭程度,截距就是直线和y轴相交的那个点的纵坐标。

还有二次函数y=ax^2+bx + c,它的图像是个抛物线,有顶点,有对称轴。

你得能分析它的开口方向是向上(a>0)还是向下(a < 0),这就像判断一个碗是正着放还是倒扣着一样。

三、几何部分(Geometry)1. 平面几何(Plane Geometry)三角形可是个重要角色。

你得知道三角形的内角和是180度,像等腰三角形,它的两条边相等,两个底角也相等;等边三角形就更厉害了,三条边都相等,三个角都是60度。

还有四边形,比如说矩形,四个角都是直角,对边相等;平行四边形呢,对边平行且相等。

就像一群规规矩矩排队的小伙伴,各有各的位置特点。

圆也很有趣。

你得知道圆的周长公式C = 2π r,面积公式S=π r^2。

这里的r就是圆的半径,π这个数啊,就像数学里的一个神秘嘉宾,大约是3.14,老是出现在跟圆有关的计算里。

分布函数严格单调增加,是指概率密度函数(Probability Density Function, PDF)在每一个点处的梯度都为正,表示随着变量的增加,概率值也会增加。

在概率论中,这种单调增加的函数称为严格单调函数,也就是说概率密度函数的导数不会出现负值。

一个典型的分布函数严格单调增加的例子是均匀分布,它在[0,1]上的概率密度函数为

f(x)=1, 0≤x≤1

我们可以从定义出发,来分析均匀分布的性质。

均匀分布在[0,1]上的概率密度函数是一个

常数,即f(x)=1,其中x∈[0,1],所以,均匀分布的概率密度函数是一个定值函数,而且

满足分布函数严格单调增加的条件。

均匀分布的概率密度函数f(x)=1,其中x∈[0,1],表示在[0,1]上的每一个取值都是相等的,即每一个取值的概率都是相等的。

由于概率密度函数f(x)在[0,1]上是一个常数,所以,它满足分布函数严格单调增加的条件。

均匀分布的概率密度函数是一个定值函数,这意味着它的概率密度函数不会因为x的取值

而改变,即概率密度函数的值是一个恒定的值,概率密度函数的导数也是一个恒定的值,

其值为0,所以,均匀分布的概率密度函数满足分布函数严格单调增加的条件。

均匀分布的概率密度函数是一个定值函数,它满足分布函数严格单调增加的条件,这也就

意味着,在[0,1]上的每一个取值都有一个相等的概率,即每一个取值的概率都是相等的。

因此,均匀分布可以用来模拟随机事件的发生,它可以用于模拟抛硬币、抽签等随机事件。

差分隐私(⼀)----基本介绍说明:主要参考资料来源于在本节中,我们介绍差分隐私,⾸先我们会介绍Warner 提出的第⼀个差分隐私算法[1]。

⼀、Randomized Response问题描述问题: 假设⾃⼰是⼀个班级的⽼师,这个班级有⼀场考试,但是这场考试有很多⼈作弊,但是⾃⼰不确定多少⼈作弊。

那怎么你怎么能计算出有多少学⽣作弊呢?(当然学⽣肯定不会⽼实承认⾃⼰作弊了)将问题抽象出来:有n 个⼈,每个⼈i 有个私密的数据X i ∈{0,1},他们确保其他⼈不知道⾃⼰的这个私密数据X i 到底是0还是1。

但是为了配合分析师分析所有⼈的数据,每个⼈i 根据⾃⼰的私密数据X i 和⼀些⾃⼰产⽣的随机数来产⽣⼀个Y i ∈{0,1} ,然后向分析师发送⼀个消息Y i 。

最后分析师根据收到的所有的Y i 来得到⼀个概率估计:p =1n n∑i =1X i这样分析师就⼤概知道这⾥⾯⼤概有多少⼈的X i 是0,多少⼈的X i 是1了。

在上述问题中,可以抽象为:X =∑n Xi ,其中X 1,X 2...X n 是相互独⽴的,且Pr (X i =1)=p i ,Pr (X i =0)=1−p i 。

(即有n 种期望分别为p i 的伯努利分布)令p =µ=E(X )=1n ∑n i =1X i如何⽣成随机数(1)我们先假设所有⼈都说的是真话,每个⼈只可能说真话。

意思是个体i 的隐私数据X i 是多少,那就向分析师发送的数据是多少。

那么分析师收到的Y 值就等于X :Y i =X i with probability 11−X i with probability 0那么可以得到:˜p =1n ∑n i =1Y i,实际上:p =˜p 。

但是虽数值准确,但是分析师可以确切知道⼤家都说的真话,因此明确地知道了每个⼈的X i 值。

那么应该如何既保证个体的隐私,⼜让分析师能计算出真实的p 值呢?(2)如果每个⼈都有⼀半可能说真话,⼀半可能说假话,Y i 的均值是1/2,意思是,每个⼈如果多次发送的话,期望都是1/2,那么分析师分析的数据Y 完全独⽴于X ,两个变量之间没有相关性,那么其实收集到的数据就是个⼆项分布:Y i =X iwith probability 121−X i with probability 12,即每⼈说⾃⼰是0还是1的概率与其本⾝不符合。

1 P ROBABILITY1.1Probability Theory and Statistical InferenceWhenever we have available just a sample of data from a population - and this will be the typical situation – then we need to be able to infer the (unknown) values of the population characteristics from their estimated sample counterparts. This is known as statistical inference and requires the knowledge of basic concepts in probability and an appreciation of probability distributions. 6.2 Basic Concepts in ProbabilityMany, if not all, of you will have had some exposure to the basic concepts of probability, so our treatment here is accordingly brief. We will begin with a few definitions, which will enable us to establish a vocabulary.∙ An experiment is an action, such as tossing a coin, which has a number ofpossible outcomes or events , such as heads (H ) or tails (T ).∙ A trial is a single performance of the experiment, with a single outcome.∙ The sample space consists of all possible outcomes of the experiment. Theoutcomes for a single toss of a coin are []T H ,, for example. The outcomes in the sample space are mutually exclusive , which means that the occurrence of one rules out all the others: you cannot have both H and T in a single toss of the coin. They are also exhaustive , since they define all possibilities: you can only have either H or T in a single toss.∙ With each outcome in the sample space we can associate a probability , whichis the chance of that outcome occurring. Thus, if the coin is fair, the probability of H is 0.5.With these definitions, we have the following probability axioms∙ The probability of an outcome A , ()A P , must lie between 0 and 1, i.e. ()10≤≤A P ,(1.1)∙ The sum of the probabilities associated with all the outcomes in the samplespace is 1. This follows from the fact that one, and only one, of the outcomesmust occur, since they are mutually exclusive and exhaustive, i.e., if i P is the probability of outcome i occurring and there are n possible outcomes, then∑==n i i P 11(1.2)∙ The complement of an outcome is defined as everything in the sample spaceapart from that outcome: the complement of H is T , for example. We will write the complement of A as A (to be read as ‘not -A ’ and is not to be confused with the mean of A !). Thus, since A and A are mutually exclusive and exhaustive()()A P A P -=1(1.3)Most practical problems require the calculation of the probability of a set of outcomes, rather than just a single one, or the probability of a series of outcomes in separate trials, e.g., what is the probability of throwing three H in five tosses? We refer to such sets of outcomes as compound events .Although it is sometimes possible to calculate the probability of a compound event by examining the sample space, typically the sample space is too complex, even impossible, to write down. To calculate compound probabilities in such situations, we make use of a few simple rules.The addition ruleThis rule is associated with ‘or’ (sometimes denoted as ⋃, known as the union of two events): ()()()()B A P B P A P B A P and or -+=(1.4)Here we have introduced a further event, ‘A and B ’, which encapsulates the idea of the intersection ()⋂ of two events.Consider the experiment of rolling a six-sided die. The sample space is thus []6,5,4,3,2,1, 6=n and 61=i P for all 6,,2,1 =i . The probability of rolling a five or a six is ()()()()6and 5656or 5P P P P -+=Now, ()06and 5=P , since a five and a six cannot simultaneously occur (the events are mutually exclusive). Thus()31061616and 5=-+=PIt is not always the case that the two events are mutually exclusive, so that their intersection does not always have zero probability. Consider now the experiment of drawing a single playing card from a standard 52=n card pack. The sample space is the entire 52 card deck and 521=i P for all i . Let us now compute the probability of obtaining either a Queen or a Spade from this single draw. Using obvious notation()()()()S Q P S P Q P S Q P and or -+=(1.5)Now, ()4=Q P and ()5213=S P (obtained in each case by counting up the number of outcomes in the sample space that are defined by each event and dividing the result by the total number of outcomes in the sample space). However, by doing this, the outcome representing the Queen of Spades (Q and S ) gets included in both calculations and is thus ‘double counted’. It must therefore be subtracted from()()S P Q P +, thus leading to (1.5). The events Q and S are not mutually exclusive, ()521and =S Q P , and()5216521134or =-+=S Q PThe multiplication ruleThe multiplication rule is associated with ‘and’. Consider the event of rolling a die twice and asking what the probability of obtaining fives on both rolls is. Denote this probability as ()215and 5P , where we now use the notation j i to signify obtaining outcome i on trial j : this probability will be given by ()()()3616161555and 52121=⨯=⨯=P P PThe logic of this calculation is straightforward. The probability of obtaining a five on a single roll of the die is 61 and, because the outcome of one roll of the die cannot affect the outcome of a second roll, the probability of this second roll also producing a five must again be 61. The probability of both rolls producing fives must then be the product of these two individual probabilities. Technically, we can multiply the individual probabilities together because the events are independent.What happens when events are not independent? Consider now drawing two playing cards and asking for the probability of obtaining a queen of spades (QS 1) on the first card and an S on the second (S 2). If the first card is replaced before the second card is drawn then the events 1QS and 2S are still independent and the probability will be ()()0048.0521521352421==⨯=⨯S P Q PHowever, if the second card is drawn without the first being replaced (i.e., there is a ‘normal’ deal) t hen we have to take into account the fact that, if 1QS has occurred, then the sample space for the second card has been reduced to 51 cards, 12 of which will be S : thus the probability of 2S occurring is 5112, not 5213. The probability of 2S is therefore dependent on 1QS having occurred and thus the two events are not independent. Technically, this is a conditional probability, denoted ()12QS S P and in general ()A B P , and the general multiplication rule of probabilities is, for our example, ()()()00452.05112521 and 12121=⨯=⨯=Q S P QS P S QS Pand, in general, ()()()A B P A P B A P ⨯= andOnly if the events are independent is ()()B P A B P =. More formally, if A and B are independent, then, because the probability of B occurring is unaffected by whether A occurs or not ()()()B P A B P A B P == and ()()()A P B A P B A P ==Combining the rulesConsider the following example. It is equally likely that a mother has a boy or a girl on the first (single) birth, but a second birth is more likely to be a boy if there was a boy on the first birth and similarly for a girl. Thus ()()5.011==G P B Pbut, for example,()()6.01212==G G P B B Pwhich implies that ()()4.01212==B G P G B PThe probability of a mother having one child of each sex is thus()()()()()()()()()()4.04.05.04.05.0and or and boy 1 and girl 11211212121=⨯+⨯=+==B G P B P G B P G P G B P B G P PCounting rules: combinations and permutationsThe preceding problem can be illustrated using a tree diagram , which is a way of enumerating all possible outcomes in a sample space with the associated probabilities. Tree diagrams have their uses for simple problems (consult one of the textbooks for more discussion on them), but for more complicated problems they quickly become very complex and difficult to work with.Suppose we have a family of five children of whom three are girls. To compute the probability of this event occurring, our first task is to be able to calculate the number of ways of having three girls and two boys, irrespective of whether successive births are independent or not. An ‘obvious’ way of doing this is to write down all the possible orderings, of which there are ten: GGGBB GGBGB GGBBG GBGGB GBGBGGBBGGBGGGBBGGBGBGBGGBBGGGIn more complex problems, this soon becomes difficult or impossible, and we then have to resort to using the combinatorial formula . Suppose the three girls are ‘named’ a , b and c . Girl a could have been born first, second, third, fourth or fifth, i.e., any one of five ‘places’ in the ordering:a ?????a ?????a ?????a ?????aSuppose that a is born first; then b can be born either second, third, fourth or fifth, i.e., any one of four places in the ordering: ab ???a ?b ???a ??b ?a ???bBut a could have been born second, etc., so that the total number of places for a and b to ‘choose’ is 2045=⨯. Three places remain for c to choose, so, by extending the argument, the three girls can choose a total of 60345=⨯⨯ places between them. This is the number of permutations of three named girls in five births, and can be given the notation !2!5121234534535=⨯⨯⨯⨯⨯=⨯⨯=Pwhich uses the factorial (!) notation. 6035=P is six times as large as the number of possible orderings written down above. The reason for this is that the listing does not distinguish between the girls, denoting each of them as G rather than a , b or c . The permutation formula thus overestimates the number of combinations by a factor representing the number of ways of ordering the three girls, which is !3123=⨯⨯. Thus the formula for the combination of three girls in five births is ()()101212312345!2!3!5!33535=⨯⨯⨯⨯⨯⨯⨯⨯===P CIn general, if there are n children and r of them are girls, the number of combinations is()!!!!r n r n r P C r nr n-==Bayes TheoremRecall that ()()()A B P A P B A P ⨯= andor, alternatively,()()()A PB A P A B P and =This can be expanded to be written as()()()()()()()()()()B P B A P B P B A P B P B A P A P B P B A P A B P ⨯+⨯⨯⨯=which is known as Bayes theorem . With this, we can now answer the following question: given that the second birth was a girl, what is the probability that the first birth was a boy, i.e., what is ()21G B P ? Noting that the event 1B is 1G , Bayes theorem gives us()()()()()()()()()4.05.06.05.04.05.04.011211211221=⨯+⨯⨯=⨯+⨯⨯=G P G G P B P B G P B P B G P G B PThus, knowing that the second child was a girl allows us to update our probability that the first child was a boy from the unconditional value of 0.5 to the new value of 0.4.Definitions of probabilityIn our development of probability, we have begged one important question: where do the actual probabilities come from? This, in fact, is a question that has vexed logicians and philosophers for several centuries and, consequently, there are (at least) three definitions of probability in common use today.The first is the classical or a priori definition and is the one that has been implicitly used in the illustrative examples. Basically, it assumes that each outcome in the sample space is equally likely , so that the probability that an event occurs is calculated by dividing the number of outcomes that indicate the event by the total number of possible outcomes in the sample space. Thus, if the experiment is rolling a fair six-sided die, and the event is throwing an even number, then the number of outcomes indicating the event is three (the outcomes []6,4,2), the total number of outcomes in the sample space is six []6,5,4,3,2,1, so that the required probability is 2163=.This will only work if, in our example, we can legitimately assume that the die is fair. What would be the probability of obtaining an even number if the die was biased, but in an unknown way? For this, we need to use the relative frequency definition. If we conduct an infinite number of trials of an experiment (that is, ∞→n ), and the number of times an event occurs in these n trials is k , then the probability of the event is defined as the limiting value of the ratio n k .Suppose that even numbers were twice as likely to occur on a throw of the die as odd numbers (the probability of any even number is 92, the probability of any odd number is 91, so that the probability of an even number being thrown is 32). However, we do not know this, and decide to estimate this probability by throwing the die a large number (10,000) of times and recording as we go the relative frequency of even numbers being thrown. A plot of this ‘cumulative’ relative frequency is shown overleaf. We observe that it eventually ‘settles down’ on 32, but it takes quite a large number of throws (around 6,000) before we get a really good estimate of the probability..62.64.66.68.70.72.74.76.7810002000300040005000600070008000900010000P r o p o r t i o n o f e v e n n u m b e r sN umber of rollsWhat happens if the experiment cannot be conducted more than once, if at all, e.g., if it is a ‘thought experiment’? We can then use subjective probability , which assigns adegree of belief on an event actually occurring. If we believe that it is certain to occur, then we assign a probability of one to the event, if we believe that it is impossible to occur then we assign a probability of zero, and if we think that it has a ‘good chance’ of occurring then we presumably assign a probability that is greater than 0.5 but less than 1, etc.No matter what definition seems appropriate to the experiment and outcome at hand, fortunately all definitions follow the same probability axioms, and hence rules, as those outlined above.。