Centrum voor Wiskunde en Informatica Known-item retrieval on broadcast TV J.A. List, A.R. v

- 格式:pdf

- 大小:112.05 KB

- 文档页数:13

The Mogao Caves, nestled in the heart of Dunhuang, Gansu Province in China, are a treasure trove of ancient Chinese art and culture. These caves, also known as the Thousand Buddha Grottoes, are home to a vast array of exquisite murals that have stood the test of time, offering a glimpse into the rich history and religious beliefs of the past.The murals of the Mogao Caves are a testament to the artistic prowess and spiritual depth of the people who created them. Spanning over a thousand years, from the 4th to the 14th century, the murals depict a wide range of subjects, including Buddhist narratives, historical events, and scenes from daily life. The vibrant colors and intricate details of these paintings are a testament to the skill and dedication of the artists who labored to create them.One of the most striking features of the Mogao Caves murals is their use of color. The artists employed a rich palette of hues, from deep blues and greens to bright reds and yellows, to bring their subjects to life. The use of gold leaf adds a touch of opulence and grandeur to the paintings, reflecting the wealth and prosperity of the time.The murals also serve as a visual narrative of Buddhist teachings and stories. Scenes from the life of the Buddha, such as his birth, enlightenment, and death, are depicted in a series of panels, illustrating the key events and moral lessons of his life. The paintings also feature depictions of bodhisattvas, celestial beings, and other figures from Buddhist mythology, each with their own unique attributes and symbolic meanings.In addition to their religious significance, the Mogao Caves murals also provide a window into the daily life and customs of the people of ancient China. Scenes of farming, trade, and social gatherings offer a glimpse into the social structure and way of life of the time. The attention to detail in these scenes is remarkable, with even the smallest objects and figures rendered with precision and care.The preservation of the Mogao Caves murals is a testament to the efforts of countless individuals and organizations over the years. Despite the ravages of time, natural disasters, and human interference, these precious works of art have been carefully restored and protected. The use of advanced conservation techniques and the establishment of the Mogao Grottoes Research Institute have played a crucial role in ensuring the longevity of these cultural treasures.However, the Mogao Caves murals also face significant challenges in the modern era. Climate change, increased tourism, and environmental pollution pose threats to the delicate balance of the caves microclimate, potentially causing irreversible damage to the murals. Efforts are being made to mitigate these risks, such as limiting the number of visitors and implementing strict environmental controls within the caves.In conclusion, the Mogao Caves murals are a remarkable testament to the artistic and cultural heritage of ancient China. Their intricate designs, vibrant colors, and profound religious and historical significance make them a mustsee destination for anyone interested in exploring the richtapestry of Chinese history and art. As we continue to appreciate and preserve these treasures, we also bear the responsibility of ensuring their survival for future generations to marvel at and learn from.。

AMBULANT:A Fast,Multi-Platform Open Source SML Player Dick C.A. Bulterman, Jack Jansen, Kleanthis Kleanthous, Kees Blom and Daniel Benden CWI: Centrum voor Wiskunde en InformaticaKruislaan 4131098 SJ Amsterdam, The Netherlands +31 20 592 43 00 Dick.Bulterman@cwi.nl ABSTRACTThis paper provides an overview of the Ambulant Open SMIL player. Unlike other SMIL implementations, the Ambulant Player is a reconfigureable SMIL engine that can be customized for use as an experimental media player core.The Ambulant Player is a reference SMIL engine that can be integrated in a wide variety of media player projects. This paper starts with an overview of our motivations for creating a new SMIL engine then discusses the architecture of the Ambulant Core (including the scalability and custom integration features of the player).We close with a discussion of our implementation experiences with Ambulant instances for Windows,Mac and Linux versions for desktop and PDA devices.Categories and Subject Descriptors H.5.1 Multimedia Information Systems [Evaluation]H.5.4 Hypertext/Hypermedia [Navigation]. General TermsExperimentation, Performance, V erification KeywordsSMIL, Player, Open-Source, Demos1.MOTIV ATIONThe Ambulant Open SMIL Player is an open-source, full featured SMIL 2.0 player. It is intended to be used within the researcher community (in and outside our institute) in projects that need source code access to a production-quality SMIL player environment. It may also be used as a stand-alone SMIL player for applications that do not need proprietary mediaformats.The player supports a range of SMIL 2.0 profiles ( including desktop and mobile configurations) and is available in distributions for Linux, Macintosh, and Windows systems ranging from desktop devices to PDA and handheld computers. While several SMIL player implementationsexist,including the RealPlayer [4], InternetExplorer [5], PocketSMIL [7],GRiNS [6],X-SMILES [8] and various proprietary implementations for mobile devices, we developed Ambulant for three reasons:Permission to make digital or hard copiesof all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, or republish,to post on servers or to redistribute tolists,requires prior specific permissionand/or a fee.'MM' 04, October 10-16, 2004, New Y ork, New Y ork, USA.Copyright 2004 ACM 1-58113-893-8/04/0010...$5.00.•N one of the existi ng SMIL players provides a complete and correct SMIL 2.0 implementation. The Ambulant player implements all of SMIL, based on the SMIL 2.0 Language profile plus extensions to support advanced animation and the needs of the mobile variant used by the 3GPP/PSS-6 SMIL specification [9]. •A ll commercial SMIL players are geared to the presentation of proprietary media. The Ambulant player uses open-source media codecs and open-source network transfer protocols, so that the player can be easily customized foruse in a wide range of researchprojects.• Our goal is to build a platform that will encourage the development of comparable multimedia research output.By providing what we expect will be a standard baseline player, other researchers and developmentorganizations can concentrate on integratingextensions to the basic player (either in terms of new media codecs or new network control algorithms). These extensions can then be shared by others.In contrast to the Helix client architecture [10], which also moved to a GPL core in mid-2004, the Ambulant player supports a wider range of SMIL target application architectures,it provides a more complete and correct implementation of the SMIL language,it provides much better performance on low-resource devices and it provides a more extensible media player architecture. It also provides an implementation that includes all of the media codecs as part of the open client infrastructure.The Ambulant target community is not viewers of media content, but developers of multimedia infrastructures, protocols and networks. Our goal has been to augument the existing partial SMIL implementations produced by many groups with a complete implementation that supports even the exotic features of the SMIL language. The following sections provide an introduction to the architecture of the player and describe the state of the various Ambulant implementations. We then discuss how the Ambulant Core can be re-purposed in other projects. We start with a discussion of Ambulant 's functional support for SMIL.2.FUNCTIONAL SUPPORT FOR SMIL 2.0The SMIL 2.0 recommendation [1] defines 10 functional groups that are used to structure the standard '5s0+ modules. These modules define the approximately 30 XML elements and 150 attributes that make up the SMIL 2.0 language. In addition to defining modules, the SMIL 2.0 specification also defines a number of SMIL profiles: collection of elements, attributes and attribute values that are targeted to meet the needs of a particular implementation community. Common profiles include the full SMIL 2.0 Language, SMIL Basic, 3GPP SMIL,XHTML+SMIL and SMIL 1.0 profiles.A review of these profiles is beyond the scope of this paper(see [2]), but a key concern of Ambulant ' sdevelopment has been to provide a player core that can be used to support a wide range of SMIL target profiles with custom player components.This has resulted in an architecture that allows nearly all aspects of the player to be plug-replaceable via open interfaces. In this way, tailored layout, scheduling, media processing and interaction modules can be configured to meet the needs of individual profile requirements. The Ambulant player is the only player that supports this architecture.The Ambulant player provides a direct implementation of the SMIL 2.0 Language profile, plus extensions that provide enhanced support for animation and timing control. Compared with other commercial and non-commercial players, the Ambulant player implements not only a core scheduling engine, it also provides complete support for SMIL layout,interaction, content control and networking facilities.Ambulant provides the most complete implementation of the SMIL language available to date.3.AMBULANT ARCHITECTUREThis section provides an overview of the architecture of the Ambulant core. While this discussion is high-level, it will provide sufficient detail to demonstrate the applicability of Ambulant to a wide range of projects. The sections below consider thehigh-level interface structure, the common services layer and the player com mon core architecture.3.1The High-Level Interface StructureFigure 1 shows the highest level player abstract ion. The player core support top-level con trol exter nal entry points (in clud ing play/stop/pause) and in turn man ages a collection of external factories that provide in terfaces to data sources (both for sta ndard and pseudo-media), GUI and window system interfaces and in terfaces to ren derers. Unlike other players that treat SMIL as a datatype [4],[10], the Ambula nt en gi ne has acen tral role in in teractio n with the input/output/scree n/devices in terfaces.This architecture allows the types of entry points (and the moment of evaluation) to be customized and separated from the various data-sources and renderers. This is important forintegration with environments that may use non-SMIL layout or special device in terface process ing.Figuit 1 k Ambulaittliigk-ljtwLstruchm.3.2The Common Services LayerFigure 2 shows a set of com mon services that are supplied for the player to operate. These in clude operati ng systems in terfaces, draw ing systems in terfaces and support for baseli ne XML fun ctio ns.All of these services are provided by Ambulant; they may also be integrated into other player-related projects or they may be replaced by new service components that are optimized for particular devices or algorithms. Hsurt 2. Amldant Common [Services Liwr/3.3The Player Common CoreFigure 3 shows a slightly abstracted view ofthe Ambula nt com mon core architecture. The view is essentially that of a single instanceof the Ambula nt player. Although only oneclass object is shown for eachservice,multiple interchangeable implementations have been developed for all objects (except the DOM tree) during theplayer 'development. As an example,multiple schedulers have bee n developed to match the fun cti onalcapabilities of various SMIL profiles.Arrows in the figure denote that one abstract class depends on the services offered by the other abstract class. Stacked boxes denote that a si ngle in sta nce of the player will con tain in sta nces of multiple con crete classes impleme nting that abstract class: one for audio, one for images, etc. All of the stacked-box abstract classes come with a factory function to create the in sta nces of the required con crete class.The bulk of the player implementation is architected to be platform in depe ndent. As we will discuss, this platform in depe ndent component has already been reused for five separate player impleme ntati ons. The platform dependent portions of the player include support for actual ren deri ng, UI in teract ion and datasource processing and control. When the player is active, there is asingle instanee of the scheduler and layout manager, both of which depend on the DOM tree object. Multiple instances of data source and playable objects are created. These in teract with multiple abstract rendering surfaces. The playable abstract class is the scheduler in terface (play, stop) for a media no de, while the renderer abstract class is the drawing in terface (redraw). Note that not all playables are ren derers (audio, SMIL ani mati on). The architecture has bee n desig ned to have all comp onents be replaceable, both in terms of an alter native impleme ntati on of a give n set of functionality and in terms of a complete re-purposing of the player components. In this way, the Ambulant core can be migrated to being a special purpose SMIL engine or a non-SMIL engine (such as support for MPEG-4 or other sta ndards).The abstract in terfaces provided by the player do not require a “ SMIL on Top” model of docume nt process ing. The abstract in terface can be used with other high-level control 4.1 Implementation PlatformsSMIL profiles have been defined for a widerange of platforms and devices, ranging fromdesktop implementations to mobile devices. Inorder to support our research on distributedmodels (such as in an XHTML+SMIL implementation), or to control non-SMILlower-level rendering (such as timed text).Note that in order to improve readability of theillustrati on, all auxiliary classes (threadi ng, geometry and color han dli ng, etc.) and several classes that were not important for general un dersta nding (player driver engine, transitions, etc.) have been left out of the diagram.4. IMPLEMENTATION EXPERIENCESThis sectio nwill briefly review ourimpleme ntatio n experie nces with theAmbula nt player. We discuss the implementation platforms used during SMIL ' s development and describe a set of test documents that were created to test the fun cti on ality of the Ambula nt player core. We con clude with a discussi on on the performa nee of the Ambula nt player.SMIL document extensions and to provide a player that was useful for other research efforts, we decided to provide a wide range of SMIL implementations for the Ambulant project. The Ambulant core is available as a single C++ source distribution that provides support for the following platforms:•Linux: our source distributi on in elude makefiles that are used with the RH-8 distribution of Linux. We provide support for media using the FF-MPEG suite [11]. The player interface is built using the Qt toolkit [12]. •Macintosh:Ambulant supports Mac OS X 10.3. Media rendering support is available via the internal Quicktime API and via FF-MPEG . The player user interface uses standard Mac conventions and support (Coca). •Windows: Ambulant provides conventional Win32 support for current generation Windows platforms. It has been most extensivelytested with XP (Home,Professional and TabletPC) and Windows-2000. Media rendering include third-party and local support for imaging and continuous media. Networking and user interface support are provided using platform-embeddedlibraries.•PocketPC: Ambulant supports PocketPC-2000,PocketPC-2002andWindows Mobile 2003 systems. The PocketPC implementations provide support for basic imaging, audio and text facilities.•Linux PDA support:Ambulant provides support for the Zaurus Linux-PDA. Media support is provided via the FF-MPEG library and UI support is provide via Qt. Media support includes audio, images and simple text.In each of these implementations, our initial focus has been on providing support for SMIL scheduling and control functions.We have not optimized media renderer support in the Ambulant 1.0 releases, but expect to provide enhanced support in future versions. 4.2 Demos and Test SuitesIn order to validate the Ambulant player implementation beyond that available with the standard SMIL test suite [3], several demo and test documents have been distributed with the player core. The principal demos include: •Welcome: A short presentation that exercises basic timing,media rendering, transformations and animation.•NYC: a short slideshow in desktop and mobile configurations that exercises scheduling, transformation and media rendering.•News: a complex interactive news document that tests linking, event-based activation, advanced layout, timing and media integration. Like NYC, this demo support differentiated mobile and desktop configurations.•Links: a suite of linking and interaction test cases.•Flashlight: an interactive user'sguide that tests presentation customization using custom test attributes and linking/interaction support. These and other demos are distributed as part of the Ambulant player web site [13].4.3Performance EvaluationThe goal of the Ambulant implementation was to provide a complete and fast SMIL player. We used a C++ implementation core instead of Java or Python because our experience had shownthat on small devices (which we feel hold significant interest for future research), the efficiency of the implementation still plays a dominant role. Our goal was to be able to read, parse, model and schedule a 300-node news presentation in less than two seconds on desktop and mobile platforms. This goal was achieved for all of the target platforms used in the player project. By comparison, the same presentation on the Oratrix GRiNS PocketPC player took 28 seconds to read, parse and schedule. (The Real PocketPC SMIL player and the PocketSMIL players were not able to parseand schedule the document at all because of their limited SMIL language support.)In terms of SMIL language performance, our goal was to provide a complete implementation of the SMIL 2.0 Language profile[14]. Where other players have implemented subsets of this profile,Ambulant has managed to implement the entire SMIL 2.0 feature set with two exceptions: first, we currently do not support the prefetch elements of the content control modules; second, we provide only single top-level window support in the platform-dependent player interfaces. Prefetch was not supported because of the close association of an implementation with a given streaming architecture. The use of multiple top-level windows, while supported in our other SMIL implementation, was not included in version 1.0 of Ambulant because of pending working on multi-screen mobile devices. Both of these feature are expected to be supported in the next release of Ambulant.5.CURRENT STATUS AND AVAILABILITYT his paper describes version 1.0 of the Ambulant player, which was released on July 12, 2004. (This version is also known as the Ambulant/O release of the player.) Feature releases and platform tuning are expected to occur in the summer of 2004. The current release of Ambulant is always available via our SourceForge links [13], along with pointers to the most recent demonstrators and test suites.The W3C started its SMIL 2.1 standardization in May, 2004.At the same time, the W3C' s timed text working group is completing itsfirst public working draft. We will support both of these activities in upcoming Ambulant releases.6.CONCLUSIONSWhile SMIL support is becoming ubiquitous (in no small part due to its acceptance within the mobile community), the availability of open-source SMIL players has been limited. This has meant that any group wishing to investigate multimedia extensions or high-/low-level user or rendering support has had to make a considerable investment in developing a core SMIL engine.We expect that by providing a high-performance, high-quality and complete SMIL implementation in an open environment, both our own research and the research agendas of others can be served. By providing a flexible player framework, extensions from new user interfaces to new rendering engines or content control infrastructures can be easily supported.7.ACKNOWLEDGEMENTS This work was supported by the Stichting NLnet in Amsterdam.8.REFERENCES[1]W3C,SMIL Specification,/AudioVideo.[2]Bulterman,D.C.A and Rutledge, L.,SMIL 2.0:Interactive Multimedia for Weband Mobile Devices, Springer, 2004.[3]W3C,SMIL2.0 Standard Testsuite,/2001/SMIL20/testsuite/[4]RealNetworks,The RealPlayer 10,/[5]Microsoft,HTML+Time in InternetExplorer 6,/workshop/author/behaviors/time.asp[6]Oratrix, The GRiNS 2.0 SMIL Player./[7]INRIA,The PocketSMIL 2.0 Player,wam.inrialpes.fr/software/pocketsmil/. [8],X-SMILES: An Open XML-Browser for ExoticApplications./[9]3GPP Consortium,The Third-GenerationPartnership Project(3GPP)SMIL PSS-6Profile./ftp/Specs/archive/26_series/26.246/ 26246-003.zip[10]Helix Community,The Helix Player./.[11]FFMPEG ,FF-MPEG:A Complete Solution forRecording,Converting and Streaming Audioand Video./[12]Trolltech,Qtopia:The QT Palmtop/[13]Ambulant Project,The Ambulant 1.0 Open Source SMIL 2.0Player, /.[14]Bulterman,D.C.A.,A Linking andInteraction Evaluation Test Set for SMIL,Proc. ACM Hypertext 2004, SantaCruz,August, 2004.。

python教程微盘网盘全集大全想学会编程语言无法一蹴而就,Python语言也不例外。

网上Python资料和视频也不少,但这些资料零散且不系统,遇到问题也让学习积极性非常受挫。

若大家想成为技术型的数据分析师,或者未来往数据挖掘、系统性开发或二次开发方向发展,接下来带给你的就是系统学习python教程微盘网盘全集。

千锋Python基础教程:/s/1qYTZiNEPython课程教学高手晋级视频总目录:/s/1hrXwY8kPython课程windows知识点:/s/1kVcaH3xPython课程linux知识点:/s/1i4VZh5bPython课程web知识点:/s/1jIMdU2iPython课程机器学习:/s/1o8qNB8QPython课程-树莓派设备:/s/1slFee2TPython发源于八十年代后期。

开发者是Centrum Wiskunde & Informatica的Guido van Rossum,这是位于荷兰阿姆斯特丹科学园区的一个数学和计算机科学研究中心。

之后Van Rossum一直是Python开发很有影响的人物。

事实上,社区成员给了他一个荣誉称号:终生仁慈独裁者(BDFL)。

经过初期的不起眼,Python已经成为互联网最流行的服务端编程语言之一。

根据W3Techs的统计,它被用于很多的大流量的站点,超过了ColdFusion, PHP, 和。

其中超过98%的站点运行的是Python 2.0,只有1%多一点的站点运行3.0。

关于如何学习Python语言,实质上,任何语言的核心内容都不多,Python显得更精简一些。

但是就这些内容,要用好也很不容易,而从数据类型、类,异常入手是最基本方法。

如果你需要更系统地学习Python语言,不妨来千锋Python培训班看看,负责教学的Python讲师,可以说是业界无可挑剔的讲师天团。

尹老师,刘老师,杨老师均是清华大学毕业的高材生,精通多门编程语言,拥有丰富的开发经验,多年IT名企工作经验以及丰富的项目实战经验。

·4587·[32]SHIROBE M,WATANABE Y,TANAKA T,et al. Effect ofan oral frailty measures program on community-dwelling elderly people:a cluster-randomized controlled trial[J]. Gerontology,2021:1-10. DOI:10.1159/000516968.[33]MATSUO K,KITO N,OGAWA K,et al. Improvement oforal hypofunction by a comprehensive oral and physical exercise programme including textured lunch gatherings[J]. J Oral Rehabil,2021,48(4):411-421. DOI:10.1111/joor.13122.[34]NOMURA Y,ISHII Y,SUZUKI S,et al. Nutritional status andoral frailty :a community based study [J]. Nutrients,2020,12(9):E2886. DOI:10.3390/nu12092886.[35]DIBELLO V,LOZUPONE M,MANFREDINI D,et al. Oralfrailty and neurodegeneration in Alzheimer 's disease[J]. Neural Regen Res,2021,16(11):2149-2153. DOI:10.4103/1673-5374.310672.[36]HIRONAKA S,KUGIMIYA Y,WATANABE Y,et al. Associationbetween oral,social,and physical frailty in community-dwelling older adults[J]. Arch Gerontol Geriatr,2020,89:104105. DOI:10.1016/j.archger.2020.104105.[37]BABA H,WATANABE Y,MIURA K,et al. Oral frailty andcarriage of oral Candida in community-dwelling older adults(Check-up to discover Health with Energy for senior Residents in Iwamizawa ;CHEER Iwamizawa)[J]. Gerodontology,2022,39(1):49-58. DOI:10.1111/ger.12621.[38]HIHARA T,GOTO T,ICHIKAWA T. Investigating eatingbehaviors and symptoms of oral frailty using questionnaires[J]. D e n t J (B a s e l ),2019,7(3):E 66. D O I :10.3390/dj7030066.[39]NISHIMOTO M,TANAKA T,TAKAHASHI K,et al. Oral frailtyis associated with food satisfaction in community-dwelling older adults[J]. Nihon Ronen Igakkai Zasshi,2020,57(3):273-281. DOI:10.3143/geriatrics.57.273.[40]HATANAKA Y,FURUYA J,SATO Y,et al. Associationsbetween oral hypofunction tests,age,and sex[J]. Int J Environ Res Public Health,2021,18(19):10256. DOI:10.3390/ijerph181910256.[41]OHARA Y,MOTOKAWA K,WATANABE Y,et al. Associationof eating alone with oral frailty among community-dwelling older adults in Japan[J]. Arch Gerontol Geriatr,2020,87:104014. DOI:10.1016/j.archger.2020.104014.(收稿日期:2022-03-10;修回日期:2022-05-06)(本文编辑:康艳辉)·新进展·合并危险因素的高钾血症诊治进展罗培艺,马良,苟慎菊*【摘要】 高钾血症是临床上的常见问题,其发生的危险因素包括患有肾脏疾病、心血管疾病、糖尿病以及服用影响血钾的药物等。

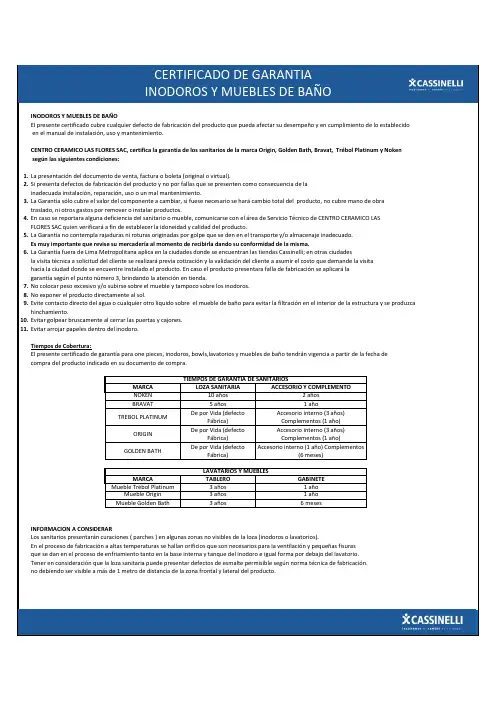

INODOROS Y MUEBLES DE BAÑO El presente certificado cubre cualquier defecto de fabricación del producto que pueda afectar su desempeño y en cumplimiento de lo establecido en el manual de instalación, uso y mantenimiento.CENTRO CERAMICO LAS FLORES SAC, certifica la garantía de los sanitarios de la marca Origin, Golden Bath, Bravat, Trébol Platinum y Noken según las siguientes condiciones: presentación del documento de venta, factura o boleta (original o virtual).2.Si presenta defectos de fabricación del producto y no por fallas que se presenten como consecuencia de lainadecuada instalación, reparación, uso o un mal mantenimiento. Garantía sólo cubre el valor del componente a cambiar, si fuese necesario se hará cambio total del producto, no cubre mano de obra traslado, ni otros gastos por remover o instalar productos.4.En caso se reportara alguna deficiencia del sanitario o mueble, comunicarse con el área de Servicio Técnico de CENTRO CERAMICO LAS FLORES SAC quien verificará a fin de establecer la idoneidad y calidad del producto. Garantía no contempla rajaduras ni roturas originadas por golpe que se den en el transporte y/o almacenaje inadecuado.Es muy importante que revise su mercadería al momento de recibirla dando su conformidad de la misma. Garantía fuera de Lima Metropolitana aplica en la ciudades donde se encuentran las tiendas Cassinelli; en otras ciudades la visita técnica a solicitud del cliente se realizará previa cotización y la validación del cliente a asumir el costo que demande la visita hacia la ciudad donde se encuentre instalado el producto. En caso el producto presentara falla de fabricación se aplicará lagarantía según el punto número 3, brindando la atención en tienda.7.No colocar peso excesivo y/o subirse sobre el mueble y tampoco sobre los inodoros.8.No exponer el producto directamente al sol.9.Evite contacto directo del agua o cualquier otro liquido sobre el mueble de baño para evitar la filtración en el interior de la estructura y se produzca hinchamiento.10.Evitar golpear bruscamente al cerrar las puertas y cajones.11.Evitar arrojar papeles dentro del inodoro.Tiempos de Cobertura:El presente certificado de garantía para one pieces, inodoros, bowls,lavatorios y muebles de baño tendrán vigencia a partir de la fecha de compra del producto indicado en su documento de compra.INFORMACION A CONSIDERARLos sanitarios presentarán curaciones ( parches ) en algunas zonas no visibles de la loza (inodoros o lavatorios).En el proceso de fabricación a altas temperaturas se hallan orificios que son necesarios para la ventilación y pequeñas fisuras que se dan en el proceso de enfriamiento tanto en la base interna y tanque del inodoro e igual forma por debajo del lavatorio.Tener en consideración que la loza sanitaria puede presentar defectos de esmalte permisible según norma técnica de fabricación.no debiendo ser visible a más de 1 metro de distancia de la zona frontal y lateral del producto.TREBOL PLATINUM ORIGIN De por Vida (defecto Fábrica)Accesorio interno (3 años)Complementos (1 año)GOLDEN BATH De por Vida (defecto Fábrica)Accesorio interno (1 año) Complementos(6 meses)3 años1 año Accesorio interno (3 años)Complementos (1 año)De por Vida (defecto Fábrica)LAVATARIOS Y MUEBLESMueble Golden Bath 3 años 6 mesesMueble Trébol Platinum 3 años1 año Mueble Origin CERTIFICADO DE GARANTIASANITARIOS Y MUEBLES DE BAÑOBRAVAT MARCA TABLEROGABINETE LOZA SANITARIA MARCA 2 años10 años NOKEN TIEMPOS DE GARANTIA DE SANITARIOS1 año5 años ACCESORIO Y COMPLEMENTOInspiramos el cambio en tuCERTIFICADO DE GARANTIA INODOROS Y MUEBLES DE BAÑOINSTALACION 1.Contratar personal calificado para la instalación de los productos adquiridos.2.Revisar los componentes del producto antes de la instalación (hacer lectura del manual propio de instalación o video brindado).3.Antes de instalar el inodoro se debe verificar si la medida del eje del punto de desagüe de piso o pared va acorde con la del inodoro,asimismo considerar la medida del punto de agua.4.El tubo de desagüe y la toma de agua, tanto interior como exterior, deben encontrarse libres de impurezas y residuos de cemento que puedan atorar el drenaje de agua.5.Para una instalación de inodoro al piso se debe marcar la posición de los pernos de anclaje en la superficie de forma paralela a la pared terminada, posteriormente perforar e instalar los pernos.6.Colocar el anillo de cera alrededor del desagüe del inodoro luego asentar el inodoro al piso y se asegura en la posición definitiva colocando los pernos de anclaje y ajustando a la loza. El anillo debe formar un sello entre el piso y el inodoro para evitar la salida de malos olores y filtraciones de agua. Hacer pruebas de funcionamiento antes de sellar. fijación del borde de la taza al piso o lavatorios de loza, debe realizarse con alguna silicona neutra para uso sanitario.Este producto permite desmontar la loza sin quebrarla (no usar productos que imposibiliten el desmontaje)MANTENIMIENTOSANITARIOS*Para la limpieza de la loza sanitaria no debe usarse materiales abrasivos como escobilla de alambre o similares que pueden deteriorar el acabado de la loza sanitaria.*Combinar los químicos de limpieza con agua antes de su aplicación para evitar dañar el acabado cromado de las bisagras decorativas,pulsadores o el mismo asiento.MUEBLES DE BAÑO*Los lavatorios de loza deben ser limpiados con agua y jabón líquido utilizando una esponja suave.*Posteriormente secar con un paño para evitar manchas.*Evitar acumulación de agua sobre el mueble, podría generar hinchamiento en su estructura..*Limpiar el mueble únicamente con paño ligeramente húmedo.*No aplicar detergentes y evitar la limpieza con esponjas abrasivas ya sea en el lavatorio y el propio mueble de baño.*Es importante la limpieza diaria de la loza para evitar manchas en la superficie (se recomienda utilizar bicarbonato de sodio y vinagre blanco para eliminar la mancha en la loza).Los datos personales que usted proporciona serán utilizados y/o tratados por Centro Cerámico Las Flores SAC estricta y únicamente a efectos de brindarle atención personalizada para la gestión de una posible solución del inconveniente reportado, así como para la acreditación de la atención del mismo. Centro Cerámico Las Flores SAC podrá compartir y/o usar y/o almacenar y/o transferir su información a terceras personas vinculadas o no a Centro Cerámico Las Flores SAC. sean estos socios comerciales o no de Centro Cerámico Las Flores SAC, con el objeto de realizar las actividades relacionadas a la atención post ventas y/o servicio técnico solicitado. Usted podrá ejercer en cualquier momento su derecho de información, acceso, rectificación, cancelación y oposición de sus datos de acuerdo a lo dispuesto por la Ley de Protección de Datos Personales, vigente y su Reglamento. Para más información en la web ó por correo a ********************************.Nombre de Titular de Compra ………………………………………………………………………………………………………………………………………………………………………..Numero de Boleta o Factura ………………………………………....……………………………………………………….Fecha de Compra …………………………………….DNI ………………………………………………………………………………………………………………………………..……….Firma ………………………………………………………….Nombre de Contacto ………………………………………………………………………………………………………..…….Firma de Contacto ………………………………………INSTALACION Y MANTENIMIENTO DE INODOROS Y MUEBLES DE BAÑOServicio Técnico CCLFTeléfono 0800-1-2150 / 241-5753 / 243-2242/ 241-5746Contáctenosvíaweb:******************************************************Inspiramos el cambio en tu hogar INSTALACION Y MATENIMIENTODE INODOROS Y MUEBLES DE BAÑO。

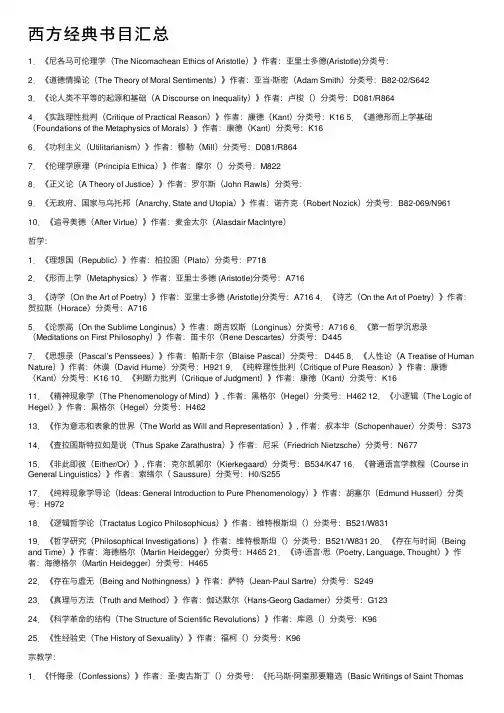

西⽅经典书⽬汇总1.《尼各马可伦理学(The Nicomachean Ethics of Aristotle)》作者:亚⾥⼠多德(Aristotle)分类号:2.《道德情操论(The Theory of Moral Sentiments)》作者:亚当·斯密(Adam Smith)分类号:B82-02/S6423.《论⼈类不平等的起源和基础(A Discourse on Inequality)》作者:卢梭()分类号:D081/R8644.《实践理性批判(Critique of Practical Reason)》作者:康德(Kant)分类号:K16 5.《道德形⽽上学基础(Foundations of the Metaphysics of Morals)》作者:康德(Kant)分类号:K166.《功利主义(Utilitarianism)》作者:穆勒(Mill)分类号:D081/R8647.《伦理学原理(Principia Ethica)》作者:摩尔()分类号:M8228.《正义论(A Theory of Justice)》作者:罗尔斯(John Rawls)分类号:9.《⽆政府、国家与乌托邦(Anarchy, State and Utopia)》作者:诺齐克(Robert Nozick)分类号:B82-069/N961 10.《追寻美德(After Virtue)》作者:麦⾦太尔(Alasdair MacIntyre)哲学:1.《理想国(Republic)》作者:柏拉图(Plato)分类号:P7182.《形⽽上学(Metaphysics)》作者:亚⾥⼠多德 (Aristotle)分类号:A7163.《诗学(On the Art of Poetry)》作者:亚⾥⼠多德 (Aristotle)分类号:A716 4.《诗艺(On the Art of Poetry)》作者:贺拉斯(Horace)分类号:A7165.《论崇⾼(On the Sublime Longinus)》作者:朗吉奴斯(Longinus)分类号:A716 6.《第⼀哲学沉思录(Meditations on First Philosophy)》作者:笛卡尔(Rene Descartes)分类号:D4457.《思想录(Pascal’s Penssees)》作者:帕斯卡尔(Blaise Pascal)分类号: D445 8.《⼈性论(A Treatise of Human Nature)》作者:休谟(David Hume)分类号:H921 9.《纯粹理性批判(Critique of Pure Reason)》作者:康德(Kant)分类号:K16 10.《判断⼒批判(Critique of Judgment)》作者:康德(Kant)分类号:K1611.《精神现象学(The Phenomenology of Mind)》, 作者:⿊格尔(Hegel)分类号:H462 12.《⼩逻辑(The Logic of Hegel)》作者:⿊格尔(Hegel)分类号:H46213.《作为意志和表象的世界(The World as Will and Representation)》, 作者:叔本华(Schopenhauer)分类号:S373 14.《查拉图斯特拉如是说(Thus Spake Zarathustra)》作者:尼采(Friedrich Nietzsche)分类号:N67715.《⾮此即彼(Either/Or)》, 作者:克尔凯郭尔(Kierkegaard)分类号:B534/K47 16.《普通语⾔学教程(Course in General Linguistics)》作者:索绪尔( Saussure)分类号:H0/S25517.《纯粹现象学导论(Ideas: General Introduction to Pure Phenomenology)》作者:胡塞尔(Edmund Husserl)分类号:H97218.《逻辑哲学论(Tractatus Logico Philosophicus)》作者:维特根斯坦()分类号:B521/W83119.《哲学研究(Philosophical Investigations)》作者:维特根斯坦()分类号:B521/W831 20.《存在与时间(Being and Time)》作者:海德格尔(Martin Heidegger)分类号:H465 21.《诗·语⾔·思(Poetry, Language, Thought)》作者:海德格尔(Martin Heidegger)分类号:H46522.《存在与虚⽆(Being and Nothingness)》作者:萨特(Jean-Paul Sartre)分类号:S24923.《真理与⽅法(Truth and Method)》作者:伽达默尔(Hans-Georg Gadamer)分类号:G12324.《科学⾰命的结构(The Structure of Scientific Revolutions)》作者:库恩()分类号:K9625.《性经验史(The History of Sexuality)》作者:福柯()分类号:K96宗教学:1.《忏悔录(Confessions)》作者:圣·奥古斯丁()分类号:《托马斯·阿奎那要籍选(Basic Writings of Saint ThomasAquinas)》, 作者:阿奎那( Aquinas)分类号:A6473.《迷途指津(The Guide for the Perplexed)》作者:马蒙尼德(Maimonides)分类号:B985/M2234.《路德基本著作选(Basic Theological Writings)》作者:马丁·路德(Martin Luther)分类号:L9735.《论宗教(On Religion)》作者:施莱尔马赫()分类号:B972/S3416.《我与你(I and Thou)》作者:马丁·布伯(Martin Buber)分类号:B972/S341 7.《⼈的本性及其命运(The Nature and Destiny of Man)》作者:尼布尔()分类号:B972/N6658.《神圣者的观念(The Idea of the Holy)》作者:奥托(Rudolf Otto)分类号:B972/O89 9.《存在的勇⽓(The Courage to Be)》作者:梯利希(Paul Tillich)分类号:B972/O89 10.《教会教义学(Church Dogmatics)》作者:卡尔·巴特(Karl Barth)分类号:B921/B284政治学:1.《政治学(The Politics of Aristotle)》作者:亚⾥⼠多德 (Aristotle)分类号:A7162.《君主论(The Prince)》作者:马基雅维⾥(Niccolo Machiavelli)分类号:D033/M149 3.《社会契约论(The Social Contract)》作者:卢梭()分类号:D033/M1494.《利维坦(Leviathan)》作者:霍布斯(Thomas Hobbes)分类号:D033/H682 5.《政府论(Two Treatises of Government)作者:洛克(John Locke)分类号:L814 6.《论法的精神(The Spirit of the Laws)》, 作者:孟德斯鸠(Montesquieu)分类号:M7797.《论美国民主(Democracy in America)》, 作者:托克维尔(Alexis de Tocqueville)分类号:T6328.《代议制政府(Considerations on RepresentativeGovernment)》作者:穆勒(Mill)分类号:D033/M6459.《联邦党⼈⽂集(The Federalist Papers)》作者:汉密尔顿(Alexander Hamilton)分类号:H21710.《⾃由秩序原理(The Constitution of Liberty)》作者:哈耶克()分类号:D089/H417经济学:1.《国民财富的性质和原因的研究(An Inquiry into the Nature and Causes of the Wealth of Nations)》, 作者:亚当·斯密(Adam Smith)分类号:S6422.《经济学原理(Principles of Economics)》, 作者:马歇尔(Alfred Marshall)分类号:M3673.《福利经济学(The Economics of Welfare)》, 作者:庇古()分类号:P633 4.《就业、利息与货币的⼀般理论(The General Theory of Employment Interest and Money)》作者:凯恩斯()分类号:K445.《经济发展理论(The Theory of Economic Development)》作者:熊彼特(Schumpeter)分类号:K446.《⼈类⾏为(Human Action: A Treatise on Economics)》, 作者:⽶塞斯(Mises)分类号:M6787.《经济分析的基础(Foundations of Economic Analysis)》作者:萨缪尔森(Samuelson)分类号:《货币数量理论研究(Studies in the Quantity Theory of Money)》作者:弗⾥德曼(Friedman)分类号:F8999.《集体选择与社会福利(Collective Choice and Social Welfare)》作者:阿玛蒂亚·森()分类号:F89910.《资本主义经济制度(The Economic Institutions of Capitalism)》作者:威廉姆森(Williamson)分类号:W729社会学:1.《论⾃杀(Suicide: A Study in Sociology)》作者:杜克海姆(Emilc Durkheim)分类号:D9472.《新教伦理与资本主义精神(The Protestant Ethic and the Spirit of Capitalism)》作者:韦伯(Max Weber)分类号:B920/W3753.《货币哲学(The Philosophy of Money)》作者:席美尔(Georg Simmel)分类号:C91-03/S5924.《⼀般社会学论集(A Treatise on General Sociology)》, 作者:帕累托(Vilfredo Pareto)分类号:C91-06/P227 5.《意识形态与乌托邦(Ideology and Utopia)》作者:曼海姆()分类号: M281⼈类学:1.《⾦枝(The Golden Bough)》作者:弗雷泽(James )分类号:B1/F8482.《西太平洋上的航海者(Argonauts of the Western Pacific)》作者:马林诺夫斯基()分类号:M2153.《原始思维(The Savage Mind)》作者:列维-斯特劳斯(Claude Levi-Strauss)分类号:B80/L6644.《原始社会的结构和功能(Structure and Function in Primitive Society)》作者:拉迪克⾥夫-布郎(Brown)分类号:B80/L6645.《种族、语⾔、⽂化(Race, Language and Culture)》作者:鲍斯(Franz Boas)分类号:C95/B662⼼理学:1.《⼼理学原理(The Principles of Psychology)》, 作者:威廉·詹姆⼠(William James)分类号:B84/J272.《⽣理⼼理学原理(Principles of Physiological Psychology)》作者:冯特()分类号:B845/W9653.《梦的解析(The Interpretation of Dreams)》作者:弗洛伊德(Sigmund Freud)分类号:B84-065/F8894.《⼉童智慧的起源(The Origin of Intelligence in the Child)》作者:⽪亚杰(Jean Piaget)分类号:P5795.《科学与⼈类⾏为(Science and Human behavior)》作者:斯⾦纳()分类号:B84-063/S628 6.《原型与集体⽆意识(The Archetypes and the Collective Unconscious)》作者:荣格()分类号:B84-065/J957.《动机与⼈格(Motivation and Personality)》作者:马斯洛()分类号:B84-067/M394法学:1.《古代法(Ancient Law)》作者:梅因()分类号:M2252.《英国法与⽂艺复兴(English Law and the Renaissance)》作者:梅特兰()分类号:M2253.《法理学讲演录(Lectures on Jurisprudence)》, 作者:奥斯丁()分类号:D90/A936 4.《法律的社会学理论(A Sociological Theory of Law)》作者:卢曼()分类号:D90-052/L9265.《法律社会学之基本原理(Fundamental Principles of the Sociology of Law)》作者:埃利希()分类号:D90-052/E33 6.《法律、宪法与⾃由(Law, Legislation and Liberty)》作者:哈耶克()分类号:7.《纯粹法学理论(Pure Theory of Law)》作者:凯尔森()分类号:D90/K298.《法律之概念(The Concept of Law)》作者:哈特()分类号:D90/K299.《法律之帝国(Law’s Empire)》作者:德沃⾦()分类号:D90/D98910.《法律的经济学分析(Economic Analysis of Law)》作者:波斯纳(Richard )分类号:D90-059/P855历史学:1.《历史(The Histories)》作者:希罗多德(Herodotus)分类号:K125/H5592.《伯罗奔尼撒战争史(The Peloponnesian War)》作者:修昔底德(Thucydides)分类号:K125/T5323.《编年史(The Annals of Imperial Rome)》作者:塔西陀(Tacitus)分类号:K126/T118 4.《上帝之城(The City of God)》, 作者:圣·奥古斯丁()分类号:B972/A923 5.《历史学:理论和实践(History: its Theory and Practice)》作者:克罗齐(Benedetto Croce)分类号:K01/C9376.《历史的观念(The Idea of History)》作者:柯林伍德()分类号:K01/C9377.《腓⼒普⼆世时代的地中海与地中海世界(The Mediterranean and the Mediterranean World in the Age of Philip II)》, 作者:布罗代尔()分类号:K503/B8258.《历史研究(A Study of History)》, 作者:汤因⽐()分类号:K01/T756商业经典书⽬:In Search of Excellence: Lessons from America's Best-Run Companies《追求卓越》:美国优秀企业的成功秘诀Built to Last: Successful Habits of Visionary Companies《基业长青》/《公司长寿秘诀》:⾼瞻远瞩公司长⽣不⽼的秘诀Reengineering the Corporation: A Manifesto for Business Revolution《公司再造》/《企业重组》:企业管理⾰命的宣⾔Barbarians at the Gate: The Fall of RJR Nabisco《⼤收购》/《门⼝的野蛮⼈》:华尔街股市兼并风潮Competitive Advantage: Creating and Sustaining Superior Performance《竞争优势》:寻找成功的⽀点The Tipping Point: How Little Things Can Make a Big Difference《引爆流⾏》:改变思维的佳作Crossing the Chasm: Marketing and Selling Technology Products to Mainstream Customers《跨越鸿沟》:⾼科技创新成功之道The House of Morgan《摩根财团》:美国⼀代银⾏王朝和现代⾦融业的崛起The Six Sigma Way《6σ管理法》:追求卓越的阶梯Seven Habits of Highly Effective People: Powerful Lessons in Personal Change 《强⼈的七种习性》:让你成为新强⼈Liar's Poker《说谎者的牌术》/《骗⼦游戏》:⼀幅扭曲的罪恶图景The Innovator's Dilemma: When New Technologies Cause Great Firms to Fail《创新者的窘境》:⼤公司⾯对突破性技术时引发的失败Japan Inc.《Japan Inc.》:漫画⽇本经济Den of Thieves《股市⼤盗》/《贼巢》:华尔街最⼤内幕交易案始末The Essential Drucker《德鲁克精华》:⼤师中的⼤师精华中的精华Competing for the Future《竞争⼤未来》The Buffett Way: Investment Strategies of the World's Greatest Investor《沃伦?巴菲特之路》/《快餐式投资》:投资之王的理念与策略Jack: Straight from the Gut《杰克?韦尔奇⾃传》:⼀部CEO的圣经Good to Great: Why Some Companies Make the Leap... and Others Don't 《从优秀到卓越》:迈向成功的巅峰The New New Thing: A Silicon Valley Story《新新事物:硅⾕的故事》经济学经典书⽬:第1部《经济表》弗朗斯⽡·魁奈(法国1694—1774)第2部《国富论》亚当·斯密(英国1723—1790)第3部《⼈⼝原理》托马斯·罗伯特·马尔萨斯(英国1766—1834)第4部《政治经济学概论》让·巴蒂斯特·萨伊(法国1767—1832)第5部《政治经济学及赋税原理》⼤卫·李嘉图(英国1772—1823)第6部《政治经济学新原理》西蒙·德·西斯蒙第(法国1773—1842)第7部《政治经济学的国民体系》弗⾥德利希·李斯特(德国1789—1846)第8部《政治经济学原理》约翰·斯图亚特·穆勒(英国1806—1873)第9部《资本论》卡尔·马克思(德国1818—1883)第10部《政治经济学理论》威廉·斯坦利·杰⽂斯(英国1835—1882)第11部《国民经济学原理》卡尔·门格尔(奥地利1840—1921)第12部《纯粹政治经济学纲要》⾥昂·⽡尔拉斯(法国1834—1910)第13部《资本与利息》欧根·冯·庞巴维克(奥地利185l⼀1914)第14部《经济学原理》阿弗⾥德·马歇尔(英国1842—1924)第15部《利息与价格》克努特·维克塞尔(瑞典1851—1926)第16部《财富的分配》约翰·贝茨·克拉克(美国1847—1938)第17部《有闲阶级论》托尔斯坦·本德·凡勃伦(美国1857—1929)第18部《经济发展理论》约瑟夫·阿罗斯·熊彼特(奥地利1883—1950)第19部《福利经济学》阿瑟·赛西尔·庇古(英国1877—1959)第20部《不完全竞争经济学》琼·罗宾逊(英国1903—1983)第21部《就业、利息和货币通论》约翰·梅纳德·凯恩斯(英国1883—1946)第22部《价值与资本》约翰·理查德·希克斯(英国1904—1989)第23部《通往奴役之路》哈耶克(奥地利1899—1992)第24部《经济学》保罗·萨缪尔森(美国1915⼀)第25部《丰裕社会》约翰·肯尼斯·加尔布雷斯(美国1908—)第26部《经济成长的阶段》沃尔特·罗斯托(美国1916—)第27部《⼈⼒资本投资》西奥多·威廉·舒尔茨(美国1902—1998)第28部《资本上义与⾃由》⽶尔顿·弗⾥德曼(美国1912—)第29部《经济学》约瑟夫·斯蒂格利茨(美国1943—)第30部《经济学原理》格⾥⾼利·曼昆(美国1958—)第31部《商道》谋略经典:第1部《道德经》第2部《⿁⾕⼦》第3部《管⼦》第4部《论语》第5部《孙⼦兵法》第6部《荀⼦》第7部《韩⾮⼦》第8部《战国策》第9部《⼈物志》第10部《贞观政要》第11部《反经》第12部《资治通鉴》第13部《三国演义》第14部《菜根谭》第15部《智囊》第16部《三⼗六计》第17部《曾国藩家书》第18部《厚⿊学》第19部《君主论》第20部《战争论》管理类经典:第1部《科学管理原理》弗雷德⾥克·温斯洛·泰罗(美国1856—1915) 第2部《社会组织和经济组织理论》马克思·韦伯(德国1864—1920) 第3部《经理⼈员的职能》切斯特·巴纳德(美国1886—1961) 第4部《⼯业管理和⼀般管理》亨利·法约尔(法国1841-1925) 第5部《⼯业⽂明的社会问题》埃尔顿·梅奥(美国1880—1949) 第6部《企业中⼈的⽅⾯》道格拉斯·麦格雷⼽(美国1906—1964) 第7部《个性与组织》克⾥斯·阿吉⾥斯(美国1923—) 第8部《如何选样领导模式》罗伯特·坦南鲍姆(美国1915—2003) 第9部《管理决策新科学》赫伯特·西蒙(美国1916—2001) 第10部《伟⼤的组织者》欧内斯特·戴尔(美国1914—) 第11部《管理的新模式》伦西斯·利克特(美国1903—1981) 第12部《营销管理》菲利普·科特勒(美国1931—) 第13部《让⼯作适合管理者》弗雷德·菲德勒(美国1922—) 第14部《组织效能评价标准》斯坦利·E·西肖尔(美国1915—1999) 第15部《再论如何激励员⼯》弗雷德⾥克·赫茨伯格(美国1923—2000) 第16部《组织与管理系统⽅法与权变⽅法》弗⾥蒙特·卡斯特(美国1924—) 第17部《经理⼯作的性质》亨利·明茨伯格(加拿⼤1939—) 第18部《管理任务、责任、实践》彼得·杜拉克(美国1909—) 第19部《再论管理理论的丛林》哈罗德·孔茨(美国1908—1984) 第20部《杰克·韦尔奇⾃传》杰克·韦尔奇(美国1935—) 第21部《竞争战略》迈克尔·波特(美国1947—) 第22部《Z理论》威廉·⼤内(美国1943—) 第23部《转危为安》爱德华兹·戴明(美国1900—1993) 第24部《总经理》约翰·科特(美国1947—) 第25部《追求卓越》托马斯·彼得斯(美国1942—) 第26部《领导者成功谋略》沃伦·本尼斯(美国1925—) 第27部《巨⼈学舞》罗莎贝丝·摩丝·坎特(美国1943—) 第28部《第五项修炼》彼得·圣吉(美国1947—) 第29部《企业再造》迈克尔·汉默(美国1948—) 第30部《基业长青》詹姆斯·柯林斯(美国1958—) 第31部《杜拉克论管理》第32部《⾼效能⼈⼠的七个习惯》。

卢卡诺伯爵Pró1ogoEn el nombre de Dios.Amén.*Entre las maravillosas cosas que Dios ha hecho,está la cara del hombre.No hay dos caras similares en el mundo.Asimismo,º no hay dos hombres que tengan la misma voluntadº o inclinación.Sin embargo,hay una cosa en la que los hombres sí son similares,y es que aprenden mejor lo que más les interesa.De esta manera, el que quiera enseñar a otro alguna cosa deberá presentarla de una manera que le sea agradable para el que la aprende.Por esto yo,don Juan,hijo del principe don Manuel,escribí este libro con las palabras más hermosas que pude.Esto hice, siguiendo el ejemplo de los médicos,quienes ponen dulceº a las medicinas para que el dulzor arrastreºconsigo la medicina que beneficia.Así,el lector se deleitaráconºsus enseñanzas,y aunque no quiera,aprenderá su instrucción.Si por acasoºlos lectores encuentran algo mal expresado, no echen la culpaºsino a la falta de entendimiento de don Juan Manuel;y si por el contrario encuentran algo provechoso,agradézcanleº a Dios,el inspirador de los buenos dichos y las buenas obras.Y pues,ya terminado el pró1ogo,de aquí en adelanteº comienzan los cuentos.Hay que suponer que un gran sefior,el conde Lucanor,habla con Patronio,su conseiero.ºasimismo同样地,照样地voluntad意愿,意志ponen dulce加糖arrastre带来se deleitará con高兴;喜爱:取乐por acaso偶然,偶尔echen la culpa责备,指责agradézcanle感谢de...adelante从这里往后su consejero他的顾问En el nombre de Dios.Amén:以上帝的名义,阿门。

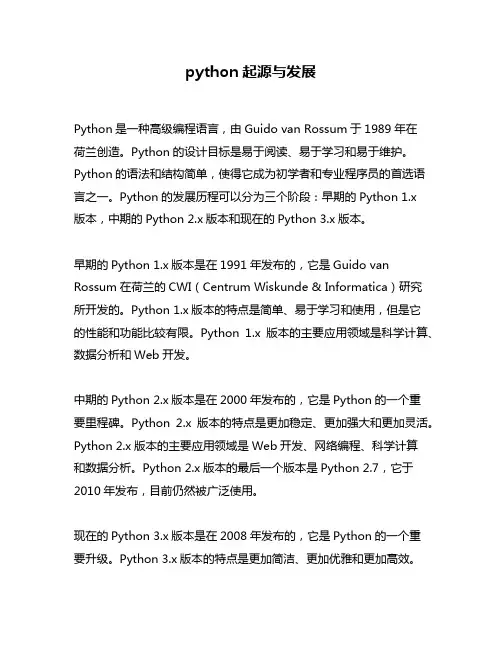

python起源与发展Python是一种高级编程语言,由Guido van Rossum于1989年在荷兰创造。

Python的设计目标是易于阅读、易于学习和易于维护。

Python的语法和结构简单,使得它成为初学者和专业程序员的首选语言之一。

Python的发展历程可以分为三个阶段:早期的Python 1.x版本,中期的Python 2.x版本和现在的Python 3.x版本。

早期的Python 1.x版本是在1991年发布的,它是Guido van Rossum在荷兰的CWI(Centrum Wiskunde & Informatica)研究所开发的。

Python 1.x版本的特点是简单、易于学习和使用,但是它的性能和功能比较有限。

Python 1.x版本的主要应用领域是科学计算、数据分析和Web开发。

中期的Python 2.x版本是在2000年发布的,它是Python的一个重要里程碑。

Python 2.x版本的特点是更加稳定、更加强大和更加灵活。

Python 2.x版本的主要应用领域是Web开发、网络编程、科学计算和数据分析。

Python 2.x版本的最后一个版本是Python 2.7,它于2010年发布,目前仍然被广泛使用。

现在的Python 3.x版本是在2008年发布的,它是Python的一个重要升级。

Python 3.x版本的特点是更加简洁、更加优雅和更加高效。

Python 3.x版本的主要应用领域是Web开发、数据分析、人工智能和机器学习。

Python 3.x版本的最新版本是Python 3.9,它于2020年10月发布。

Python的发展受到了许多因素的影响,其中最重要的因素是开源社区的支持。

Python的开源社区是一个庞大的社区,由全球各地的程序员组成。

这个社区不仅为Python的发展提供了技术支持和贡献,还为Python的应用提供了丰富的资源和工具。

Python的开源社区是Python成功的关键之一。

逢坂大河英语写法Onodera Oogawa is a captivating figure whose life story has captured the imagination of many. Born into a family with a rich cultural heritage, Onodera's journey has been one of resilience, determination, and a unwavering pursuit of personal growth.From a young age, Onodera demonstrated a keen intellect and a thirst for knowledge that set them apart from their peers. Encouraged by their supportive family, Onodera immersed themselves in a wide range of academic disciplines, excelling in subjects such as literature, history, and philosophy. This insatiable curiosity would later shape the course of their life, leading them down a path of self-discovery and a deep appreciation for the complexities of the human experience.As Onodera came of age, they found themselves drawn to the world of creative expression. Inspired by the works of literary giants and the timeless wisdom of ancient philosophers, Onodera began to hone their own writing skills, crafting poetic verses and insightful essays that captivated all who encountered them. This passion forthe written word would become a defining aspect of their identity, a means through which they could share their unique perspective on the human condition.Onodera's intellectual prowess and artistic talents did not go unnoticed, and they soon found themselves at the forefront of a burgeoning literary movement. Their works were praised for their depth of insight, their lyrical beauty, and their ability to challenge the status quo. Onodera's name became synonymous with a new generation of thinkers and creatives who were redefining the boundaries of what was possible in the realm of written expression.But Onodera's journey was not without its challenges. As they navigated the complexities of the literary world, they also grappled with the personal demons that lurked within. Onodera's introspective nature and their deep sensitivity to the world around them often led them to confront the darker aspects of the human experience – the pain, the sorrow, and the existential questions that plague the human condition.Yet, it was precisely these struggles that fueled Onodera's creative fire, inspiring them to delve deeper into the human psyche and to explore the universal truths that bind us all together. Their writing became a conduit for their own personal growth, a means of processing the complexities of life and finding solace in the power oflanguage.As Onodera's reputation grew, so too did their influence. They became a beacon of inspiration for aspiring writers and thinkers, their words resonating with audiences across the globe. Their works were translated into multiple languages, and their name became synonymous with a new era of literary excellence.But Onodera's impact extended far beyond the realm of writing. They were also a passionate advocate for social justice, using their platform to shed light on the struggles of marginalized communities and to champion the causes of equality and human rights. Their unwavering commitment to these principles earned them the respect and admiration of countless individuals who saw in Onodera a kindred spirit – a visionary who was not afraid to challenge the status quo and to fight for a more just and equitable world.Throughout their life, Onodera continued to push the boundaries of what was possible, constantly reinventing themselves and exploring new avenues of creative expression. Whether they were penning a poetic masterpiece or delivering a rousing speech, Onodera's words always carried a profound weight, a sense of purpose that transcended the confines of the page or the stage.And as the years passed, Onodera's legacy only grew stronger. Theirworks were studied in classrooms around the world, inspiring new generations of thinkers and writers to follow in their footsteps. Their name became synonymous with a new era of literary excellence, a testament to the power of the written word to transform lives and to shape the course of human history.Today, Onodera Oogawa's legacy lives on, a shining example of what can be achieved through a relentless pursuit of knowledge, a deep commitment to social justice, and an unwavering belief in the transformative power of the written word. Their story is one of inspiration, of resilience, and of the enduring human spirit – a testament to the remarkable potential that lies within each and every one of us.。

Europe,with its rich and diverse history,has been the cradle of many significant events and developments that have shaped the world as we know it today.Here are some key characteristics of European history that are worth noting:1.Ancient Civilizations:Europes history is marked by the rise and fall of various ancient civilizations,such as the Greeks and Romans,who laid the foundations for Western philosophy,science,and political systems.2.Feudalism:During the Middle Ages,Europe was predominantly feudal,with a social hierarchy that included kings,nobles,knights,and serfs.This system was based on land ownership and the exchange of protection for labor.3.The Renaissance:The period from the14th to the17th century marked a cultural rebirth in Europe,known as the Renaissance.This era saw a revival in art,science,and learning,with figures like Leonardo da Vinci and Michelangelo making significant contributions.4.The Age of Exploration:Europeans began to explore the world in search of new trade routes and territories,leading to the discovery of the Americas by Christopher Columbus and the establishment of colonies around the globe.5.Religious Reformation:The16th century was a time of religious upheaval,with the Protestant Reformation challenging the authority of the Catholic Church and leading to the formation of various Protestant denominations.6.The Enlightenment:The18th century was characterized by the Enlightenment,an intellectual movement that emphasized reason,individualism,and skepticism of traditional authority,which influenced the development of modern democracy and human rights.7.Industrial Revolution:Europe was the birthplace of the Industrial Revolution in the 18th and19th centuries,which transformed economies and societies through the introduction of machinery,mass production,and urbanization.8.Napoleonic Wars:The period of the Napoleonic Wars18031815saw France,under the leadership of Napoleon Bonaparte,seeking to dominate Europe,leading to a series of conflicts that reshaped the continents political landscape.9.World Wars:The20th century was marked by two devastating global conflicts,World War I and World War II,both of which originated in Europe and had profound impactson the world order.10.European Union:In the aftermath of World War II,Europe sought to prevent future conflicts by promoting cooperation and integration among its nations.The European Union,established in the late20th century,is a political and economic union that aims to foster peace and stability.11.Cultural Diversity:Europe is known for its cultural diversity,with each country and region having its own languages,traditions,and customs,which have contributed to a rich tapestry of European identity.12.Scientific and Technological Advancements:Europe has been at the forefront of many scientific and technological advancements,from the development of the printing press to the discovery of the structure of DNA.13.Colonialism and Imperialism:European powers played a significant role in the colonization of other parts of the world,which had lasting effects on global politics and economies.14.The Cold War:The division of Europe into Eastern and Western blocs during the Cold War19471991was a defining feature of the continents20thcentury history,with the Iron Curtain symbolizing the ideological and physical separation.15.PostWar Recovery and Integration:Europes postWorld War II recovery and the process of integration have been remarkable,with the continent becoming a leader in social welfare,environmental protection,and human rights.These characteristics only scratch the surface of Europes complex and multifaceted history,which continues to evolve and influence the world in myriad ways.。

Observation is a fundamental skill across various disciplines,from science to social studies,and it plays a crucial role in the learning process. In this essay,I will delve into the significance of observation,its application in different fields,and the impact it has on our understanding of the world.Observation is the act of carefully watching and noting the details of a situation,person,or event.It is more than just looking it involves a deeper level of engagement where one must be attentive to the subtleties and nuances of what is being observed.This skill is essential for gathering information and making informed decisions.In the field of natural sciences,observation is the cornerstone of discovery. For instance,Charles Darwins meticulous observations of the Galapagos finches led to his theory of natural selection.Similarly,astronomers rely on observation to study celestial bodies and phenomena,which has led to the understanding of the universes vastness and complexity.In social sciences,observation is vital for understanding human behavior and societal patterns.Sociologists and anthropologists often immerse themselves in different cultures to observe and document social norms, rituals,and interactions.This approach helps them to draw conclusions about the structure and dynamics of societies.Moreover,observation is an indispensable tool in the field of education. Teachers use observation to assess students understanding,engagement, and learning styles.This allows them to tailor their teaching methods to meet the individual needs of their students,fostering a more inclusive andeffective learning environment.Observation also plays a significant role in the arts.Artists observe the world around them to capture its essence in their work.Whether its a painter capturing the play of light on a landscape or a writer observing human emotions to create a compelling narrative,the act of observation is central to their creative process.In addition to its practical applications,observation also enriches our personal lives.It enhances our appreciation of the worlds beauty,from the intricate details of a butterflys wing to the aweinspiring sight of a starry night sky.By observing,we become more attuned to the world around us and develop a deeper connection with it.However,effective observation requires practice and skill.One must learn to be patient,as observations can take time to yield meaningful insights.It also requires an open mind,as preconceived notions can cloud ones perception and lead to inaccurate conclusions.In conclusion,observation is a multifaceted skill that is crucial in various aspects of life.It is the foundation of knowledge and understanding, enabling us to explore the world around us and make informed decisions. By cultivating the habit of observation,we can deepen our appreciation of the world and enrich our lives.。