CircularTrip An Effective Algorithm for Continuous kNN Queries

- 格式:pdf

- 大小:468.14 KB

- 文档页数:7

基于Spark的高维K近邻连接算法纪佳琪;郑永基【摘要】为解决数据量日益增长和数据维度不断增高,通过单机运行K近邻连接算法得出结果时间过长无法满足时效要求的问题,提出一种基于Spark的使用位置敏感哈希函数对数据预处理后再进行查询的算法.利用位置敏感哈希函数对训练集数据降维并进行分桶索引,进行近邻查找,有效利用Spark基于内存的高性能并行计算能力.实验结果表明,该算法对高维大数据具有较高的准确性和查询效率.【期刊名称】《计算机工程与设计》【年(卷),期】2018(039)008【总页数】6页(P2544-2549)【关键词】K近邻连接;高维;大数据;哈希函数;并行计算【作者】纪佳琪;郑永基【作者单位】河北民族师范学院信息中心,河北承德067000;圆光大学计算机工学院,韩国益山54538【正文语种】中文【中图分类】TP3010 引言K近邻连接(k nearest neighbor join),简称KNN连接,是一种简单有效的惰性数据挖掘算法[1],可广泛用于相似图像匹配[2]、相似声音匹配[3]、相似文本匹配[4]等领域。

为了解决KNN连接计算量过大问题,许多学者提出了近似KNN连接算法[5,6]。

然而,随着应用数据量急剧增加、数据维度不断增高,使用单机运算无法在可以接受的时间内计算出结果。

为处理大规模高维度数据,使用Hadoop[7]分布式计算是一种有效的解决方案,因此很多新算法也相继提出[8-10]。

文献[11]中提出了一种称为H-BNLJ的KNN连接精确算法,该算法的主要思想是首先把数据集R和S分别划分成子集,如R={R1,R2}和S={S1,S2},然后R中的每一个子集和S中的每一个子集结合形成新集合{R1S1,R1S2,R2S1,R2S2},最后发送新集合中的元素至不同的计算结点进行距离计算后再进行结果的合并,但该方法会产生大量的网络数据传输,当数据量增加或数据维度增高时,性能下降明显。

一个高效的连续k近邻查询改进算法

孙圣力;林硕

【期刊名称】《计算机研究与发展》

【年(卷),期】2013(050)0z1

【摘要】连续k近邻查询是空间数据库一直以来的热点问题.但大多数研究成果都是在欧式空间上的.IMA/GMA算法是少有的几种基于道路网的连续k近邻查询算法之一,同时也是比较优秀的算法.但是IMA算法仍然存在不足之处.在针对IMA算法的不足进行充分讨论后,提出了内结构迭代变更法和数据对象树,分别弥补了IMA 在数据更新频繁和扩展树生成时表现出的性能缺陷.内结构迭代变更法在数据更新后对扩展树内结构进行快速调整,避免了对树的大规模剪枝以提高扩展树的利用率,从而提高在数据频繁更新时的性能.数据对象树用于快速获取子树上所有数据对象的有序集合,以辅助新查询利用已有查询的扩展子树结构.理论分析和仿真实验都证明了改进的IMA算法比原IMA算法更能适应多种情况,性能表现更为优异.

【总页数】10页(P80-89)

【作者】孙圣力;林硕

【作者单位】北京大学软件与微电子学院北京 102600;北京大学软微学院无锡基地江苏无锡 214125

【正文语种】中文

【中图分类】TP392

【相关文献】

1.高度动态环境下移动对象连续K近邻查询算法 [J], 牛剑光;陈荦;赵亮;谭洁

2.不确定图上的高效top-k近邻查询处理算法 [J], 张海杰;姜守旭;邹兆年

3.基于道路网的连续k近邻查询算法 [J], 刘德高;李晓宇

4.MOQ-QR:基于QR-树的连续K近邻查询算法研究 [J], 邹永贵;宋强;杨富平

5.面向不确定移动对象的连续K近邻查询算法 [J], 于彦伟;齐建鹏;宋鹏;张永刚因版权原因,仅展示原文概要,查看原文内容请购买。

第27卷第11期2021年11月计算机集成制造系统Vol.27No.11 Computer Integrated Manufacturing Systems Nov.2021DOI:10.13196/j.cims.2021.11.016基于Kriging模型的自适应多阶段并行代理优化算法乐春宇,马义中+(南京理工大学经济管理学院,江苏南京210094)摘要:为了充分利用计算资源,减少迭代次数,提出一种可以批量加点的代理优化算法。

该算法分别采用期望改进准则和WB2(Watson and Barnes)准则探索存在的最优解并开发已存在最优解的区域,利用可行性概率和多目标优化框架刻画约束边界。

在探索和开发阶段,设计了两种对应的多点填充算法,并根据新样本点和已知样本点的距离关系,设计了两个阶段的自适应切换策略。

通过3个不同类型算例和一个工程实例验证算法性能,结果表明,该算法收敛更快,其结果具有较好的精确性和稳健性。

关键词:Kriging模型;代理优化;加点准则;可行性概率;多点填充中图分类号:O212.6文献标识码:AParallel surrogate-based optimization algorithm based on Kriging model usingadaptive multi-phases strategyYUE Chunyu,MA Yizhong+(School o£Economics and Management,Nanjing University of Science and Technology,Nanjing210094,China) Abstract:To make full use of computing resources and reduce the number of iterations,a surrogate-based optimization algorithm which could add batch points was proposed.To explore the optimum solution and to exploit its area, the expected improvement and the WB2criterion were used correspondingly.The constraint boundary was characterized by using the probability of feasibility and the multi-objective optimization framework.Two corresponding multi-points infilling algorithms were designed in the exploration and exploitation phases and an adaptive switching strategy for this two phases was designed according to the distance between new sample points and known sample points.The performance of the algorithm was verified by three different types of numerical and one engineering benchmarks.The results showed that the proposed algorithm was more efficient in convergence and the solution was more precise and robust.Keywords:Kriging model;surrogate-based optimization;infill sampling criteria;probabil让y of feasibility;multipoints infill0引言现代工程优化设计中,常采用高精度仿真模型获取数据,如有限元分析和流体动力学等E,如何在优化过程中尽可能少地调用高精度仿真模型,以提高优化效率,显得尤为重要。

机器学习之KNN算法原理及Python实现⽅法详解本⽂实例讲述了机器学习之KNN算法原理及Python实现⽅法。

分享给⼤家供⼤家参考,具体如下:⽂中代码出⾃《机器学习实战》CH02,可参考本站:KNN算法介绍KNN是⼀种监督学习算法,通过计算新数据与训练数据特征值之间的距离,然后选取K(K>=1)个距离最近的邻居进⾏分类判(投票法)或者回归。

若K=1,新数据被简单分配给其近邻的类。

KNN算法实现过程(1)选择⼀种距离计算⽅式, 通过数据所有的特征计算新数据与已知类别数据集中的数据点的距离;(2)按照距离递增次序进⾏排序,选取与当前距离最⼩的k个点;(3)对于离散分类,返回k个点出现频率最多的类别作预测分类;对于回归则返回k个点的加权值作为预测值;算法关键(1)数据的所有特征都要做可⽐较的量化若是数据特征中存在⾮数值的类型,必须采取⼿段将其量化为数值。

例如样本特征中包含颜⾊,可通过将颜⾊转换为灰度值来实现距离计算。

(2)样本特征要做归⼀化处理样本有多个参数,每⼀个参数都有⾃⼰的定义域和取值范围,他们对距离计算的影响不⼀样,如取值较⼤的影响⼒会盖过取值较⼩的参数。

所以样本参数必须做⼀些scale处理,最简单的⽅式就是所有特征的数值都采取归⼀化处置。

(3)需要⼀个距离函数以计算两个样本之间的距离距离的定义:欧⽒距离、余弦距离、汉明距离、曼哈顿距离等,⼀般选欧⽒距离作为距离度量,但是这是只适⽤于连续变量。

在⽂本分类这种⾮连续变量情况下,汉明距离可以⽤来作为度量。

通常情况下,如果运⽤⼀些特殊的算法来计算度量的话,K 近邻分类精度可显著提⾼,如运⽤⼤边缘最近邻法或者近邻成分分析法。

(4)确定K的值K值选的太⼤易引起⽋拟合,太⼩容易过拟合。

交叉验证确定K值。

KNN分类分类算法常采⽤多数表决决定。

⼀个缺点是出现频率较多的样本将会主导测试点的预测结果。

解决这个缺点的⽅法之⼀是在进⾏分类时将K个邻居到测试点的距离考虑进去。

寻找数据密集区域的算法数据密集区域是指在一个数据集中,某些区域包含了大量的数据点。

寻找数据密集区域的算法在数据挖掘和机器学习领域中具有重要意义,它能够帮助我们发现数据中的模式、聚类、异常点等信息。

在数据挖掘领域,目前存在许多寻找数据密集区域的算法。

其中最著名的算法之一是K-means聚类算法。

这个算法通过迭代地将数据点分配到离其最近的聚类中心,从而实现数据的聚类。

K-means算法将数据集划分为K个簇,其中每个簇都由一个聚类中心代表。

通过计算数据点与聚类中心之间的距离,可以将数据点划分到合适的簇中,从而形成数据密集的区域。

除了K-means算法,DBSCAN算法也是一种常用的寻找数据密集区域的算法。

DBSCAN算法是一种基于密度的聚类算法,它能够自动发现具有足够高密度的数据点所形成的区域。

该算法将数据点分为核心对象、边界对象和噪声点三个类别,从而区分出数据点的稠密程度。

通过基于密度的聚类过程,DBSCAN能够找到数据中的密集区域,并识别出异常点。

另一个常用的寻找数据密集区域的算法是OPTICS算法。

OPTICS算法是在DBSCAN算法的基础上进行改进的一种算法。

与DBSCAN算法不同的是,OPTICS算法通过计算数据点的可达距离,将数据点排序成一个较为连续的序列。

根据这个序列,我们可以从中找到密集区域。

通过调整算法中的参数,OPTICS算法能够适应不同密集度的数据集,从而更好地寻找数据中的密集区域。

除了上述算法之外,还有许多其他的寻找数据密集区域的算法,如Mean Shift、Gaussian Mixture Models等。

这些算法都有各自的优点和适用范围。

因此,在实际应用中,我们可以根据具体的场景和数据集的特点来选择合适的算法。

综上所述,寻找数据密集区域的算法在数据挖掘和机器学习领域中具有重要意义。

通过这些算法,我们能够发现数据中的模式、聚类和异常点等信息。

在实际应用中,我们可以根据具体的需求和数据特点选择合适的算法,并通过调参等方法来获取更好的结果。

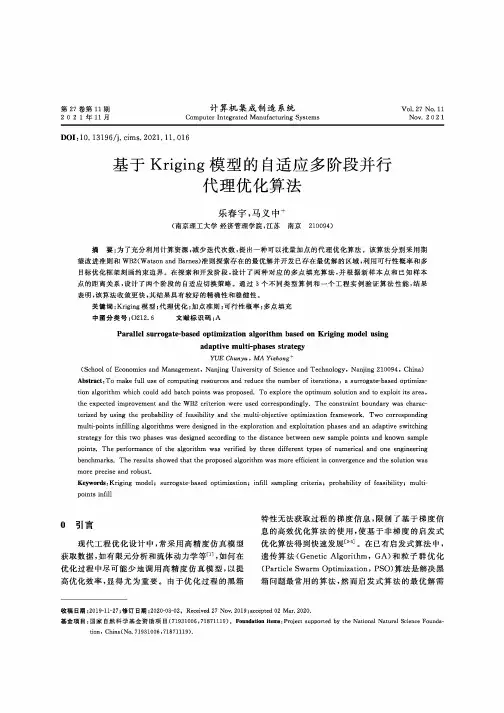

CircularTrip:An Effective Algorithm forContinuous k NN QueriesMuhammad Aamir Cheema,Yidong Yuan,and Xuemin LinThe University of New South Wales,Australia{macheema,yyidong,lxue}@.auAbstract.Continuously monitoring k NN queries in a highly dynamicenvironment has become a necessity to many recent location-based ap-plications.In this paper,we study the problem of continuous k NN queryon the dataset with an in-memory grid index.Wefirst present a noveldata access method–CircularTrip.Then,an efficient CircularTrip-basedcontinuous k NN algorithm is pared with the existing al-gorithms,our algorithm is both space and time efficient.1IntroductionContinuously monitoring k nearest neighbors over moving data objects has be-come a necessity to many recent location-based applications.This is mainly due to the increasing availability of wireless networks and inexpensive mobile devices. Consequently,a number of techniques[1,2,3,4,5,6,7,8,9]have been developed to process continuous k NN queries.Different from a conventional k NN query,continuous k NN queries are issued once and run continuously to generate results in real-time along with the up-dates of the underlying datasets.Therefore,it is crucial to develop in-memory techniques to continuously process k NN queries due to frequent location updates of data points and query points.In many applications[6,7,9],it is also crucial to support the processing of a number of continuous k NN queries simultaneously; consequently,scalability is a key issue.To address the scalability,in this paper we focus on two issues:(1)minimiza-tion of computation costs;and(2)minimization of the memory requirements. We study continuous k NN queries against the data points that move around in an arbitrary way.To effectively monitor k NN queries,we develop a novel data access method–pared with the most advanced algorithm, CPM[9],our CircularTrip-based continuous k NN algorithm has the following advantages.(1)time efficient:although both algorithms access the minimum number of cells for initial computation,less cells are accessed during continuous monitoring in our algorithm.(2)space efficient:our algorithm does not employ any book-keeping information used in CPM(i.e.,visit list and search heap for each query).Our experimental study demonstrates that CircularTrip-based con-tinuous k NN algorithm is2to4times faster than CPM,while its memory usage is only50%to85%of CPM.R.Kotagiri et al.(Eds.):DASFAA2007,LNCS4443,pp.863–869,2007.c Springer-Verlag Berlin Heidelberg2007864M.A.Cheema,Y.Yuan,and X.LinOur contributions in this paper can be summarized as follows:(1)We developa novel data access method–CircularTrip which returns the cells intersectinga given circle with the minimum number of cells examined;(2)Based on Circu-larTrip,a time-and space-efficient continuous k NN algorithm is developed.The rest of the paper is organized as follows:Section2gives the problem defi-nition and presents the related work.We present our continuous k NN algorithm in Section3.Experimental study and remarks are reported in Section4.2Background InformationSuppose that P is a set of2D1moving data points and data points changetheir locations frequently in an unpredictable fashion.Each data point p∈P isrepresented by(p.x,p.y).At each time stamp,the move of a data point p from p pre to p cur is recorded as a location update p.id,p pre,p cur and the moves of query points are recorded similarly.The problem of continuous k NN query isformally defined below.Continuous k NN Query.Given a set of moving data points,a moving querypoint q,and an integer k,the continuous k NN query is tofind k closest datapoints to q at each time stamp.Grid Index.In this paper,we assume that the dataset is indexed by an in-memory grid index which evenly partitions the space into cells.The extent ofeach cell on each dimension isδ.Cell c[i,j]indicates the cell at column i and row j and the lower-left corner cell is c[0,0].Clearly,point p falls into the cell c[ p.x/δ , p.y/δ ].In the grid index,each cell is associated with an object list and an influence list. Object list contains all the data points in this cell.Influence list of cell c maintains the references of all the queries q such that mindist(c,q)≤q.dist k where q.dist k is the distance of k th nearest neighbor from q.Specially,mindist(c q,q)=0 where c q is the cell containing q.Note that both object list and influence list are implemented as hash tables so that lookup,insertion,update,and deletion of entries take constant time.Accessing and encountering are two basic operations on cells.Specifically,accessing a cell is to evaluate all data points in this cells against queries and encountering a cell only computes its minimum distance to queries.Clearly,cost of encountering a cell is neglected when compared with accessing a cell.SEA-CNN[6],YPK-CNN[7],and CPM[9]are the most related existing work to the study in this paper.Due to space limitation,we omit the details of these techniques here.Interested readers canfind them in[6,7,9],respectively.3Continuous k NN AlgorithmOur CircularTrip-based continuous k NN algorithm consists of two phases.In phase1,the initial results of each new continuous k NN query is computed.Then, 1In this paper,we focus on2D space only.But the proposed techniques can be applied to higher dimensional space immediately.CircularTrip:An Effective Algorithm for Continuous k NN Queries865+Fig.1.An NN Query qcc uc r upper-leftupper-right lower-left lower-rightc start Fig.2.CircularTripthe results are incrementally updated by continuous monitoring module at each time stamp upon the moves of query points and data points (i.e.,phase 2).Both phases take advantages of CircularTrip algorithm.Section 3.1and Section 3.2present phase 1and phase 2,respectively.3.1Initial k NN ComputationThe basic idea of k NN computation algorithm is to access the cells around query point q round by round.A round C i contains all the cells that intersect the cir-cle of radius r i =r 0+iδcentered at q .Formally,C i ={∀c |mindist (c,q )<r i ≤maxdist (c,q )}.r 0is the the first circle’s radius.Obviously,r 0is at most maxdist (c q ,q );otherwise cell c q will not be accessed by the algorithm.Examples of round are shown as the shaded cells in Fig.1.In each round,the algorithm accesses the cells in ascending order of their mindist (c,q ).The algorithm termi-nates when the next cell to be accessed has mindist (c,q )≥q.dist k .The following theorem proves the correctness and optimality of this algorithm.Lemma 1.In a grid consisting of cells with size δ×δ,given a cell c and a querypoint q where c does not contain q ,δ≤maxdist (c,q )−mindist (c,q )≤√2δ.Theorem 1.Given a query q ,in our initial k NN algorithm,the minimal set of cells are all accessed and only these cells are accessed. According to Lemma 1,a cell is intersected by at most two consecutive circles (e.g.,the dark shaded cells in Fig.1).Although these cells are encountered twice during k NN computation (i.e.,these cells appear in two rounds),they are accessed once only.This is because for a query q (1)our k NN algorithm only accesses the cells where q is not in their influence lists;and (2)q will be inserted into its influence list after a cell is processed.In fact,Theorem 2proves the upper bound of the total number of times the cells are encountered in our algorithm.Theorem 2.In k NN algorithm,the total number of times the cells are encoun-tered is at most 1.27times of the number cells in the minimum set of cells. The detailed k NN computation algorithm is shown in Algorithm 1.We use the following example to present its details.866M.A.Cheema,Y.Yuan,and X.LinputeNN(G,q,k)Input:G:the grid index;q:query point;k:an integer;Output:the k NN of q;1:q.dist k:=∞;q.kNN:=∅;H:=∅;r:=r0:=maxdist(c q,q);2:insert the cells returned by CircularTrip(G,q,r)into H;3:while H=∅and mindist(e H,q)<q.dist k do4:insert q into the influence list of e H;5:∀p∈e H,compute dist(p,q)and update q.dist k and q.kNN;6:remove e H from H;7:if H=∅and r<q.dist k then8:r:=min{r+δ,q.dist k};9:cells C:=CircularTrip(G,q,r);10:∀c∈C,insert c into H if q∈the influence list of c;11:return q.kNN;Example1.Fig.1illustrates a concrete example of an NN query.As no data point is found in thefirst round,the algorithm continues to process the cells in the next round with radius(r0+δ).In this round,p1is found and q.dist k is updated to be dist(p1,q).Then,a third round with radius q.dist k(as dist(p1,q)<r0+2δ) is processed because the previous radius is smaller than q.dist k.In round3, q.kNN and q.dist k are updated after p2is putation stops when q.dist k(=dist(p2,q))is less than mindist(e H,q)of the top entry e H. CircularTrip Algorithm.To collect a round of cells,CircularTrip starts from one cell intersected by the given circle and checks the cells along the circle. Without loss of generality,consider cell c intersected by the circle which locates in the upper-left quadrant as shown in Fig.2.The key fact is that the next cell intersected by the circle(i.e.,the cell in which the arc is connected to one in c) is the adjacent cell either above c(i.e.,c u)or right to c(i.e.,c r).This is because the outgoing circle crosses either the upper boundary or the right boundary of c.These two adjacent cells,c u and c r,are called candidate adjacent cells of c. Clearly,to collect the next cell intersected by the circle,CircularTrip only needs to examine one of the candidate adjacent cells(i.e.,check its mindist(c,q)with the given radius r).As a result,the total cost of CircularTrip to collect a round C of cells is to compute mindist(c,q)of|C|cells,where|C|is the number of cells in round C.Algorithm2presents the implementation of CircularTrip algorithm.It always starts from the left most cell of the round c start(as shown in Fig.2)and examines the cells clockwise along the given circle until c start is encountered again.When the quadrant of the current cell being examined is changed,the directions to find its candidate adjacent cells are updated accordingly(i.e.,lines9–10). 3.2Continuous MonitoringSame as in CPM,when the query moves,we simply re-issue the query on the new location.So,continuous monitoring only concerns update of data points.CircularTrip:An Effective Algorithm for Continuous k NN Queries867Algorithm2.CircularTrip(G,q,r)Input:G:the grid index;q:query point;r:the radius;Output:all the cells which intersect the circle with center q and radius r;1:C:=∅;c:=c start:=c[i,j](i:= (q.x−r)/δ ,j:= q.y/δ );2:D cur:=Up;/*clockwise fashion:Up→Right→Down→Left→Up*/ 3:repeat4:insert c into C;5:c :=the adjacent cell to c in D cur direction;6:if c does not intersect the circle then7:c :=the adjacent cell to c in the next direction of D cur;8:c:=c ;9:if(c.i=c q.i and c.j= (q.y±r)/δ )or(c.i= (q.x±r)/δ and c.j=c q.j)then 10:D cur:=the next direction of D cur;11:until c=c start12:return C;Regarding a query q,the update of data point p, p.id,p pre,p cur ,can be classi-fied into3cases:•internal update:p cur∈q.kNN and p pre∈q.kNN;clearly,only the order of q.kNN is affected so we update the order of data points in q.kNN accordingly.•incoming update:p cur∈q.kNN and p pre∈q.kNN;p is inserted in q.kNN.•outgoing update:p cur∈q.kNN and p pre∈q.kNN;p is deleted from q.kNN.It is immediately verified that only the queries recorded in the influence lists of cell c p pre or cell c p cur may be affected by the update p.id,p pre,p cur ,where c p pre(c p cur)is the cell containing p pre(p cur).Therefore,after receiving an update p.id,p pre,p cur ,continuous monitoring module checks these queries q only.If dist(p cur,q)≤q.dist k,it is treated as an incoming update(if p pre∈q.kNN) or an internal update(if p pre∈q.kNN).On the other hand,If dist(p pre,q)≤q.dist k and dist(p cur,q)>q.dist k,it is handled as an outgoing update.After all the updates of data points are handled as described above,we update the results of affected queries.For each query q,if|q.kNN|≥k,we keep the k closest points and delete all other.For any query q where|q.kNN|<k,we update its result in a similar way to Algorithm1.Note that here the starting radius r0is set as q.dist k.The intuition is the fact that any update within this distance has already been handled.4Experimental Study and RemarksIn accordance with the experimental study of previous work[6,9],we use the same spatio-temporal data generator[10].Data points with slow speed move 1/250of the extent of space per time stamp.Medium and fast speed are5 and25times faster than slow speed,respectively.Continuous k NN queries are868M.A.Cheema,Y.Yuan,and X.Lin 0 200400600 8001000256641641T i m e (s )(a)Time0 1020 30256641641M e m o r y (M B )(b)SpaceFig.3.Effect of k 0 100 200 300 400 200 150 100 70 50 30T im e (s )(a)Varying N (×1K ) 0 100 200 300 400 10 7 5 31T i m e (s )(b)Varying n (×1K )Fig.4.Effect of N and n 0 100 200 300 7050 30 10T i m e (s )(a)Varying Agility (%) 0 50 100 150 200250fast medium slow T i m e (s )(b)Varying Speed Fig.5.Data Movement generated in the similar way.All queries are evaluated at each time stamp and the length of evaluation is 100time stamps.The grid index has 256×256cells.We evaluate our CircularTrip-based continuous k NN technique against various parameters:number of NNs (k ),number of data points (N ),number of queries (n ),and data point agility and moving speed.In our experiments,their default values are 16,100K ,5K ,50%,and medium ,respectively.The experimental results are reported in Fig.3,4,and 5.In this paper,we develop an efficient CircularTrip-based continuous k NN pared with the existing algorithm,our technique accesses the min-imum set of cells for initial computation and significantly reduces the continuous monitoring cost,while less memory space is required.References1.Song,Z.,Roussopoulos,N.:K-nearest neighbor search for moving query point.In:SSTD.(2001)79–962.Tao,Y.,Papadias,D.:Time-parameterized queries in spatio-temporal databases.In:SIGMOD Conference.(2002)334–3453.Tao,Y.,Papadias,D.,Shen,Q.:Continuous nearest neighbor search.In:VLDB.(2002)287–2984.Zhang,J.,Zhu,M.,Papadias,D.,Tao,Y.,Lee,D.L.:Location-based spatial queries.In:SIGMOD.(2003)443–4545.Iwerks,G.S.,Samet,H.,Smith,K.P.:Continuous k-nearest neighbor queries for continuously moving points with updates.In:VLDB.(2003)512–5236.Xiong,X.,Mokbel,M.F.,Aref,W.G.:Sea-cnn:Scalable processing of continuous k-nearest neighbor queries in spatio-temporal databases.In:ICDE.(2005)643–6547.Yu,X.,Pu,K.Q.,Koudas,N.:Monitoring k-nearest neighbor queries over moving objects.In:ICDE.(2005)631–642CircularTrip:An Effective Algorithm for Continuous k NN Queries869 8.Hu,H.,Xu,J.,Lee,D.L.:A generic framework for monitoring continuous spatialqueries over moving objects.In:SIGMOD.(2005)479–4909.Mouratidis,K.,Hadjieleftheriou,M.,Papadias,D.:Conceptual partitioning:Anefficient method for continuous nearest neighbor monitoring.In:SIGMOD.(2005) 634–64510.Brinkhoff,T.:A framework for generating network-based moving objects.GeoIn-formatica6(2)(2002)153–180。