Temporal and Spectral Characteristics of Short Bursts from the Soft Gamma Repeaters 1806-20

- 格式:pdf

- 大小:413.80 KB

- 文档页数:24

自相关仪光路原理The principle of autocorrelation in an optical path refers to the phenomenon where the light, after being emitted from a source and experienced reflection or refraction within the same system, overlaps with its original path. 自相关仪光路原理指的是光线从光源发出后经过系统内的反射或折射,与原始光路发生重叠的现象。

This phenomenon is of great significance in various fields, from physics to engineering, as it can be used to measure the coherence of light, determine the distance between objects, and even assess the quality of optical components. 这种现象在各个领域都具有重要意义,从物理学到工程学都有着广泛的应用,可以用来测量光的相干性,确定物体之间的距离,甚至评估光学元件的质量。

In the field of interferometry, the principle of autocorrelation is used to analyze the interference pattern produced by a beam of light split into two paths and recombined. This allows for precise measurements of small displacements, vibrations, and deformations in the objects being examined. 在干涉仪领域,自相关原理被用来分析光束分裂成两个路径并重新组合产生的干涉图案,从而可以精确测量被检测物体的微小位移、振动和变形。

美国加州Ridgecrest地震的地震动特性分析作者:张琪陈希郑向远来源:《湖南大学学报·自然科学版》2021年第01期摘要:為研究同一地震序列中两场震源相近,发震时间间隔较短的主要地震活动中获取的地震动时频特性的异同,选取了2019年7月美国加州Ridgecrest地震序列中震级分别为MW 6.4和MW7.1的两场地震,对比研究了两次地震中地震动参数随着震中距的衰减趋势,并与俞言祥模型进行了对比;讨论了这两个相近事件中地震动三要素(峰值加速度、反应谱和持时)的异同,重点分析了两条不利地震动的反应谱;通过希尔伯特-黄变换(HHT)获得地震动的HHT谱,分析了地震动能量在时间和频率成分上的分布特征. 结果表明:大部分地震动参数的衰减趋势与俞言祥模型吻合较好;两场地震的反应谱基本相似;地震动HHT谱最大能量所对应的瞬时频率和地震动时程峰值加速度所在循环的峰-谷频率很接近;两次大地震相继发生可能会对结构造成更大的损坏.关键词:地震序列;地震动特性;衰减关系;地震动三要素;希尔伯特-黄变换中图分类号:P315.9 文献标志码:A文章编号:1674—2974(2021)01—0108—09Abstract:In order to figure out the different temporal and spectral characteristics between two earthquakes with close hypocenter and short time interval in the same seismic sequence, two main shocks,MW 6.4 and MW 7.1 among the 2019 Ridgecrest earthquakes at California USA in July are selected. The attenuation trend of ground motions parameters with the change of epicentral distance for these two earthquakes is compared with Yu's model. The difference of three key elements of ground motions between these two earthquakes,including peak ground acceleration, response spectrum and duration,are analyzed. The potential seismic damage to structures is discussed by the response spectrum analysis of the selected two severest ground motions. The Hilbert-Huang transform (HHT) is adopted to obtain the HHT spectrum of ground motions for identifying the energy distribution in temporal and spectral domains. It shows that the attenuation trend of most of data agrees with Yu’s model. The response spectra of the two earthquake events are similar. The instantaneous frequency corresponding to the largest energy of HHT spectrum is close to peak-trough frequency corresponding to the time series cycle with peak ground acceleration. Structures may be severely damaged subjected to two earthquakes occurred successively.Key words:seismic sequence;ground motion characteristics;attenuation trend;three key elements of a seismic motion;Hilbert-Huang transform基于地震动工程特性,选取适当的地震动进行结构物的动力响应分析,对于土木工程结构抗震设计和安全评估具有重要意义. 目前世界范围内各地震活跃国家或地区正逐渐建立起覆盖整个区域的强震观测台网,这些观测台网的布设为地震动研究提供了丰富的数据来源. 长期以来,地震动的研究主要集中在研究地震动三要素(峰值加速度、反应谱和持时)等时频域工程特性,以及基于地震动数据研究不同场地条件下的地震动衰减关系等.近年来,基于实际地震动数据,冀昆等人[1]结合震害调查,对云南鲁甸MS 6.5地震从幅值特征、衰减关系等方面对地震动参数加以分析. 在此基础上,戴嘉伟等[2]将云南鲁甸MS 6.5和云南景谷MS 6.6地震进行对比,发现鲁甸地震地震动参数衰减快于景谷地震,该现象可能与Q值(介质品质因子)的区域差异性相关. 王恒知等[3]采用H/V单台谱比法分析了地震的场地放大效应,表明台站场地对地震动存在明显的放大现象. 夏坤等[4]对汶川地震部分台站记录进行分析,研究了传播距离和场地条件对远场地震动的影响. 国内外多年来涌现出与上述成果类似的研究[5-6],不一一列出.2019年7月4日和7月6日,在美国加州同一地点相隔不到34 h相继发生6.4级和7.1级强震,是一个较为特殊的事件. 这两次强震同属于一个地震断裂带,且震源相近,属于同一个地震序列. 因此,对这两个事件的地震动特征及其影响进行梳理和分析研究具有重要意义. 本文基于美国工程强震数据中心(CESMD)获取的地震动信息,首先对地震动衰减关系进行研究;其次,鉴于它们震源相近,本文还对比研究了两次地震动PGA、反应谱和持时等时频域特性的差异,并利用希尔伯特-黄变换研究了地震动能量在时-频域的分布特征.1 数据1.1 地震信息2019年7月4日10时33分,美国加利福尼亚州南部科恩县里奇克莱斯特(Ridgecrest)附近发生6.4级地震,震中位于瑟尔斯谷西南12 km处,震源深度10.7 km. 相隔不到34 h,该地区再次发生强震,震级达7.1级,震源深度8.0 km,震中与早前发生的6.4级地震震中十分接近,仅相距17 km. 虽然二者震级仅相差0.7级,但基本上不属于双震型事件. 目前一致的看法是,后者为主震,前者为前震[7]. 事实上,在二者之间还发生过一个5.4级前震.这是加州近20年来破坏性最强的两次地震,均发生在长约45 km、宽约15 km的利特尔莱克断裂带上,震中附近地表出现大量裂痕及偏移,房屋倒塌,道路损毁,甚至引起火灾等次生灾害,造成1人死亡,数十人受伤,及超过50亿美元的较严重经济损失[8-9]. 图1[10]和图2[11]展示了由7.1级地震造成的地表开裂和房屋损坏情况. 同时,距离震中约300 km的洛杉矶和周边城市以及内华达州拉斯维加斯都有明显震感.1.2 数据选取本文中强震动记录数据来源于美国工程强震数据中心(CESMD). CESMD自7月4日至7月11日,包括主震及次大地震(7.1级和6.4级)在内,共记录到105次地震(≥MW 3),剔除震源深度小于等于0(可认为是地面塌陷或人为引起的震动)的数据后,得到94次地震. 各地震震中分布如图3所示. 图4所示为各次地震震级随时间变化曲线,从图中可看出,在6.4级的次大地震和7.1级的主震之间发生的地震,震级主要集中在MW 3 ~ 4.5,余震震级主要集中在MW 4 ~ 5.5. 图5为地震震级与相应的震源深度分布散点图,从图中可知,震源深度主要集中于0~13 km,均属于浅源地震(震源深度小于70 km),因此对地面建筑物带来的破坏较为严重.2 衰减关系6.4级和7.1级地震震级均较大且相差仅0.7级,在同一地震序列中能量较接近,因此以这两次地震动记录为例,进行地震动参数衰减关系的讨论. 在给定震级、震中距等条件下,利用地震动参数的衰减曲线能够对地震动进行参数估计,从而用这些估计参数作为结构抗震和安全评估计算的输入[12-15].鉴于这两次地震在加州的台站均有记录,本文分别选取了两次地震动中峰值加速度(PGA)和峰值速度(PGV)较大的地震动记录,7.1级地震中选取前49条水平地震动记录,6.4级地震中选取前34条水平地震动记录,并分别与已有模型对比. 该模型为俞言祥[16]基于美国西部NGA 强震数据库建立的基岩场地水平衰减关系模型,结果如图6所示.从图6中可看出,对于7.1级和6.4级地震的PGA和PGV的实际记录值,俞言祥模型曲线基本从这些散点中间穿过. 这些记录数据能够较好地反映出PGA和PGV随震中距的增大而衰减的趋势. 其中,6.4级地震对应的PGA、PGV的残差平方和(SSR)均小于7.1级地震,如表1所示. 这说明该模型对6.4级地震的地震动参数预测更加准确. 此外,值得注意的是,在7.1级地震中,震中距R=34.5 km的台站CLC,其PGA和PGV均远远高于俞言祥模型的预测值,造成此差异的原因有二:1)台站CLC虽然震中距较大,但是其断层距Rrup = 2.8 km远远小于其余臺站,说明该台站与发震断层十分接近,地震波传播路径较短,因而地震动参数较大;2)台站CLC所处场地条件为C类(软基岩),不满足俞言祥模型的基岩场地条件,可能存在较大程度的场地放大效应,因而地震动参数较大.3 地震动三要素为了研究该地震活动中地震动的时频域特性,本文从6.4级和7.1级两次较大地震中分别选择了137条和126条地震动记录,并按照震中距的大小分为<100 km,100~200 km以及>200 km三类(见表2),研究两次主要地震中震中距所造成的差异.3.1 PGA和持时表3给出了6.4级和7.1级地震中不同震中距下水平和竖向地震动峰值加速度(PGA)平均值,同时也给出了竖向和水平PGA的比值关系. 从表3中可看出在同一次地震中,随着震中距的扩大,无论是水平还是竖向地震动PGA都减小,这与PGA随震中距变化的一般规律相同[17]. 同时,竖向和水平PGA的比值PGAV /PGAH也随着震中距变大而减小. 对于不同震级(MW 6.4 vs. 7.1)同一震中距分类地震动而言,震级越大则PGA越大;另外,震级增大时,PGAV/PGAH也会增大.表4列出了6.4和7.1级地震中不同震中距下水平和竖向地震动持时的平均值. 本文采用90%能量持时[18-20]来确定地震动持续时间(一般又称为强震持时),是由于这种方法能够更充分地反映地震动的原始特征. 该持时定义为地震动能量从总能量的5%累积到95%所经历的时间,见式(1)和式(2).式中:T为总持时,其分为3段,分别是0~T1,T1~T2和T2 ~ T,T1、T2分别是总能量的5%和95%所对应的时间点,Td表示90%能量持时. 从表4中可以看出,随着震中距增加,地震动加速度持时也相应增加,尤其在震中距大于200 km时,无论是水平向还是竖向地震动,加速度持时增幅最大. 另外,对于竖向和水平持时比值TV /TH,震中距在100 ~ 200 km内最大,震中距小于100 km最小. 值得注意的是,与PGA水平和竖向数值大小关系不同的是,对于地震动90%能量持时而言,竖向持时始终大于水平向持时. 不同的震级也会影响持时大小,在本地震事件中,震级大地震动持时也变大,但是TV /TH却略有减小.2019年7月4日和7月6日,在美国加州同一地点相隔不到34 h相继发生6.4级和7.1级强震,是一个较为特殊的事件. 这两次强震同属于一个地震断裂带,且震源相近,属于同一个地震序列. 因此,对这两个事件的地震动特征及其影响进行梳理和分析研究具有重要意义. 本文基于美国工程强震数据中心(CESMD)获取的地震动信息,首先对地震动衰减关系进行研究;其次,鉴于它们震源相近,本文还对比研究了两次地震动PGA、反应谱和持时等时频域特性的差异,并利用希尔伯特-黄变换研究了地震动能量在时-频域的分布特征.1 数据1.1 地震信息2019年7月4日10时33分,美国加利福尼亚州南部科恩县里奇克莱斯特(Ridgecrest)附近发生6.4级地震,震中位于瑟尔斯谷西南12 km处,震源深度10.7 km. 相隔不到34 h,该地区再次发生强震,震级达7.1级,震源深度8.0 km,震中与早前发生的6.4级地震震中十分接近,仅相距17 km. 虽然二者震级仅相差0.7级,但基本上不属于双震型事件. 目前一致的看法是,后者為主震,前者为前震[7]. 事实上,在二者之间还发生过一个5.4级前震.这是加州近20年来破坏性最强的两次地震,均发生在长约45 km、宽约15 km的利特尔莱克断裂带上,震中附近地表出现大量裂痕及偏移,房屋倒塌,道路损毁,甚至引起火灾等次生灾害,造成1人死亡,数十人受伤,及超过50亿美元的较严重经济损失[8-9]. 图1[10]和图2[11]展示了由7.1级地震造成的地表开裂和房屋损坏情况. 同时,距离震中约300 km的洛杉矶和周边城市以及内华达州拉斯维加斯都有明显震感.1.2 数据选取本文中强震动记录数据来源于美国工程强震数据中心(CESMD). CESMD自7月4日至7月11日,包括主震及次大地震(7.1级和6.4级)在内,共记录到105次地震(≥MW 3),剔除震源深度小于等于0(可认为是地面塌陷或人为引起的震动)的数据后,得到94次地震. 各地震震中分布如图3所示. 图4所示为各次地震震级随时间变化曲线,从图中可看出,在6.4级的次大地震和7.1级的主震之间发生的地震,震级主要集中在MW 3 ~ 4.5,余震震级主要集中在MW 4 ~ 5.5. 图5为地震震级与相应的震源深度分布散点图,从图中可知,震源深度主要集中于0~13 km,均属于浅源地震(震源深度小于70 km),因此对地面建筑物带来的破坏较为严重.2 衰减关系6.4级和7.1级地震震级均较大且相差仅0.7级,在同一地震序列中能量较接近,因此以这两次地震动记录为例,进行地震动参数衰减关系的讨论. 在给定震级、震中距等条件下,利用地震动参数的衰减曲线能够对地震动进行参数估计,从而用这些估计参数作为结构抗震和安全评估计算的输入[12-15].鉴于这两次地震在加州的台站均有记录,本文分别选取了两次地震动中峰值加速度(PGA)和峰值速度(PGV)较大的地震动记录,7.1级地震中选取前49条水平地震动记录,6.4级地震中选取前34条水平地震动记录,并分别与已有模型对比. 该模型为俞言祥[16]基于美国西部NGA 强震数据库建立的基岩场地水平衰减关系模型,结果如图6所示.从图6中可看出,对于7.1级和6.4级地震的PGA和PGV的实际记录值,俞言祥模型曲线基本从这些散点中间穿过. 这些记录数据能够较好地反映出PGA和PGV随震中距的增大而衰减的趋势. 其中,6.4级地震对应的PGA、PGV的残差平方和(SSR)均小于7.1级地震,如表1所示. 这说明该模型对6.4级地震的地震动参数预测更加准确. 此外,值得注意的是,在7.1级地震中,震中距R=34.5 km的台站CLC,其PGA和PGV均远远高于俞言祥模型的预测值,造成此差异的原因有二:1)台站CLC虽然震中距较大,但是其断层距Rrup = 2.8 km远远小于其余台站,说明该台站与发震断层十分接近,地震波传播路径较短,因而地震动参数较大;2)台站CLC所处场地条件为C类(软基岩),不满足俞言祥模型的基岩场地条件,可能存在较大程度的场地放大效应,因而地震动参数较大.3 地震动三要素为了研究该地震活动中地震动的时频域特性,本文从6.4级和7.1级两次较大地震中分别选择了137条和126条地震动记录,并按照震中距的大小分为<100 km,100~200 km以及>200 km三类(见表2),研究两次主要地震中震中距所造成的差异.3.1 PGA和持时表3给出了6.4级和7.1级地震中不同震中距下水平和竖向地震动峰值加速度(PGA)平均值,同时也给出了竖向和水平PGA的比值关系. 从表3中可看出在同一次地震中,随着震中距的扩大,无论是水平还是竖向地震动PGA都减小,这与PGA随震中距变化的一般规律相同[17]. 同时,竖向和水平PGA的比值PGAV /PGAH也随着震中距变大而减小. 对于不同震级(MW 6.4 vs. 7.1)同一震中距分类地震动而言,震级越大则PGA越大;另外,震级增大时,PGAV/PGAH也会增大.表4列出了6.4和7.1级地震中不同震中距下水平和竖向地震动持时的平均值. 本文采用90%能量持时[18-20]来确定地震动持续时间(一般又称为强震持时),是由于这种方法能够更充分地反映地震动的原始特征. 该持时定义为地震动能量从总能量的5%累积到95%所经历的时间,见式(1)和式(2).式中:T为总持时,其分为3段,分别是0~T1,T1~T2和T2 ~ T,T1、T2分别是总能量的5%和95%所对应的时间点,Td表示90%能量持时. 从表4中可以看出,随着震中距增加,地震动加速度持时也相应增加,尤其在震中距大于200 km时,无论是水平向还是竖向地震动,加速度持时增幅最大. 另外,对于竖向和水平持时比值TV /TH,震中距在100 ~ 200 km内最大,震中距小于100 km最小. 值得注意的是,与PGA水平和竖向数值大小关系不同的是,对于地震动90%能量持时而言,竖向持时始终大于水平向持时. 不同的震级也会影响持时大小,在本地震事件中,震级大地震动持时也变大,但是TV /TH却略有减小.2019年7月4日和7月6日,在美国加州同一地点相隔不到34 h相继发生6.4级和7.1级强震,是一个较为特殊的事件. 这两次强震同属于一个地震断裂带,且震源相近,属于同一个地震序列. 因此,对这两个事件的地震动特征及其影响进行梳理和分析研究具有重要意义. 本文基于美国工程强震数据中心(CESMD)获取的地震动信息,首先对地震动衰减关系进行研究;其次,鉴于它们震源相近,本文还对比研究了两次地震动PGA、反应谱和持时等时频域特性的差异,并利用希尔伯特-黄变换研究了地震动能量在时-频域的分布特征.1 数据1.1 地震信息2019年7月4日10时33分,美国加利福尼亚州南部科恩县里奇克莱斯特(Ridgecrest)附近发生6.4级地震,震中位于瑟尔斯谷西南12 km处,震源深度10.7 km. 相隔不到34 h,该地区再次发生强震,震级达7.1级,震源深度8.0 km,震中与早前发生的6.4级地震震中十分接近,仅相距17 km. 虽然二者震级仅相差0.7级,但基本上不属于双震型事件. 目前一致的看法是,后者为主震,前者为前震[7]. 事实上,在二者之间还发生过一个5.4级前震.这是加州近20年来破坏性最强的两次地震,均发生在长约45 km、宽约15 km的利特尔莱克断裂带上,震中附近地表出现大量裂痕及偏移,房屋倒塌,道路损毁,甚至引起火灾等次生灾害,造成1人死亡,数十人受伤,及超过50亿美元的较严重经济损失[8-9]. 图1[10]和图2[11]展示了由7.1级地震造成的地表开裂和房屋损坏情况. 同时,距离震中约300 km的洛杉矶和周边城市以及内华达州拉斯维加斯都有明显震感.1.2 数据选取本文中强震动记录数据来源于美国工程强震数据中心(CESMD). CESMD自7月4日至7月11日,包括主震及次大地震(7.1级和6.4级)在内,共记录到105次地震(≥MW 3),剔除震源深度小于等于0(可认为是地面塌陷或人为引起的震动)的数据后,得到94次地震. 各地震震中分布如图3所示. 图4所示为各次地震震级随时间变化曲线,从图中可看出,在6.4级的次大地震和7.1级的主震之间发生的地震,震级主要集中在MW 3 ~ 4.5,余震震级主要集中在MW 4 ~ 5.5. 图5为地震震级与相应的震源深度分布散点图,从图中可知,震源深度主要集中于0~13 km,均属于浅源地震(震源深度小于70 km),因此对地面建筑物带来的破坏较为严重.2 衰减关系6.4级和7.1级地震震级均较大且相差仅0.7级,在同一地震序列中能量较接近,因此以这两次地震动记录为例,进行地震动参数衰减关系的讨论. 在给定震级、震中距等条件下,利用地震动参数的衰减曲线能够对地震动进行参数估计,从而用这些估计参数作为结构抗震和安全评估计算的输入[12-15].鉴于这两次地震在加州的台站均有记录,本文分别选取了两次地震动中峰值加速度(PGA)和峰值速度(PGV)较大的地震动记录,7.1级地震中选取前49条水平地震动记录,6.4级地震中选取前34条水平地震动记录,并分别与已有模型对比. 该模型为俞言祥[16]基于美国西部NGA 强震数据库建立的基岩场地水平衰减关系模型,结果如图6所示.从图6中可看出,对于7.1级和6.4级地震的PGA和PGV的实际记录值,俞言祥模型曲线基本从这些散点中间穿过. 这些记录数据能够较好地反映出PGA和PGV随震中距的增大而衰减的趋势. 其中,6.4级地震对应的PGA、PGV的残差平方和(SSR)均小于7.1級地震,如表1所示. 这说明该模型对6.4级地震的地震动参数预测更加准确. 此外,值得注意的是,在7.1级地震中,震中距R=34.5 km的台站CLC,其PGA和PGV均远远高于俞言祥模型的预测值,造成此差异的原因有二:1)台站CLC虽然震中距较大,但是其断层距Rrup = 2.8 km远远小于其余台站,说明该台站与发震断层十分接近,地震波传播路径较短,因而地震动参数较大;2)台站CLC所处场地条件为C类(软基岩),不满足俞言祥模型的基岩场地条件,可能存在较大程度的场地放大效应,因而地震动参数较大.3 地震动三要素为了研究该地震活动中地震动的时频域特性,本文从6.4级和7.1级两次较大地震中分别选择了137条和126条地震动记录,并按照震中距的大小分为<100 km,100~200 km以及>200 km三类(见表2),研究两次主要地震中震中距所造成的差异.3.1 PGA和持时表3给出了6.4级和7.1级地震中不同震中距下水平和竖向地震动峰值加速度(PGA)平均值,同时也给出了竖向和水平PGA的比值关系. 从表3中可看出在同一次地震中,随着震中距的扩大,无论是水平还是竖向地震动PGA都减小,这与PGA随震中距变化的一般规律相同[17]. 同时,竖向和水平PGA的比值PGAV /PGAH也随着震中距变大而减小. 对于不同震级(MW 6.4 vs. 7.1)同一震中距分类地震动而言,震级越大则PGA越大;另外,震级增大时,PGAV/PGAH也会增大.表4列出了6.4和7.1级地震中不同震中距下水平和竖向地震动持时的平均值. 本文采用90%能量持时[18-20]来确定地震动持续时间(一般又称为强震持时),是由于这种方法能够更充分地反映地震动的原始特征. 该持时定义为地震动能量从总能量的5%累积到95%所经历的时间,见式(1)和式(2).式中:T为总持时,其分为3段,分别是0~T1,T1~T2和T2 ~ T,T1、T2分别是总能量的5%和95%所对应的时间点,Td表示90%能量持时. 从表4中可以看出,随着震中距增加,地震动加速度持时也相应增加,尤其在震中距大于200 km时,无论是水平向还是竖向地震动,加速度持时增幅最大. 另外,对于竖向和水平持时比值TV /TH,震中距在100 ~ 200 km内最大,震中距小于100 km最小. 值得注意的是,与PGA水平和竖向数值大小关系不同的是,对于地震动90%能量持时而言,竖向持时始终大于水平向持时. 不同的震级也会影响持时大小,在本地震事件中,震级大地震动持时也变大,但是TV /TH却略有减小.2019年7月4日和7月6日,在美国加州同一地点相隔不到34 h相继发生6.4级和7.1级强震,是一个较为特殊的事件. 这两次强震同属于一个地震断裂带,且震源相近,属于同一个地震序列. 因此,对这两个事件的地震动特征及其影响进行梳理和分析研究具有重要意义. 本文基于美国工程强震数据中心(CESMD)获取的地震动信息,首先对地震动衰减关系进行研究;其次,鉴于它们震源相近,本文还对比研究了两次地震动PGA、反应谱和持时等时频域特性的差异,并利用希尔伯特-黄变换研究了地震动能量在时-频域的分布特征.1 数据1.1 地震信息2019年7月4日10时33分,美国加利福尼亚州南部科恩县里奇克莱斯特(Ridgecrest)附近發生6.4级地震,震中位于瑟尔斯谷西南12 km处,震源深度10.7 km. 相隔不到34 h,该地区再次发生强震,震级达7.1级,震源深度8.0 km,震中与早前发生的6.4级地震震中十分接近,仅相距17 km. 虽然二者震级仅相差0.7级,但基本上不属于双震型事件. 目前一致的看法是,后者为主震,前者为前震[7]. 事实上,在二者之间还发生过一个5.4级前震.这是加州近20年来破坏性最强的两次地震,均发生在长约45 km、宽约15 km的利特尔莱克断裂带上,震中附近地表出现大量裂痕及偏移,房屋倒塌,道路损毁,甚至引起火灾等次生灾害,造成1人死亡,数十人受伤,及超过50亿美元的较严重经济损失[8-9]. 图1[10]和图2[11]展示了由7.1级地震造成的地表开裂和房屋损坏情况. 同时,距离震中约300 km的洛杉矶和周边城市以及内华达州拉斯维加斯都有明显震感.1.2 数据选取本文中强震动记录数据来源于美国工程强震数据中心(CESMD). CESMD自7月4日至7月11日,包括主震及次大地震(7.1级和6.4级)在内,共记录到105次地震(≥MW 3),剔除震源深度小于等于0(可认为是地面塌陷或人为引起的震动)的数据后,得到94次地震. 各地震震中分布如图3所示. 图4所示为各次地震震级随时间变化曲线,从图中可看出,在6.4级的次大地震和7.1级的主震之间发生的地震,震级主要集中在MW 3 ~ 4.5,余震震级主要集中在MW 4 ~ 5.5. 图5为地震震级与相应的震源深度分布散点图,从图中可知,震源深度主要集中于0~13 km,均属于浅源地震(震源深度小于70 km),因此对地面建筑物带来的破坏较为严重.2 衰减关系6.4级和7.1级地震震级均较大且相差仅0.7级,在同一地震序列中能量较接近,因此以这两次地震动记录为例,进行地震动参数衰减关系的讨论. 在给定震级、震中距等条件下,利用地震动参数的衰减曲线能够对地震动进行参数估计,从而用这些估计参数作为结构抗震和安全评估计算的输入[12-15].鉴于这两次地震在加州的台站均有记录,本文分别选取了两次地震动中峰值加速度(PGA)和峰值速度(PGV)较大的地震动记录,7.1级地震中选取前49条水平地震动记录,6.4级地震中选取前34条水平地震动记录,并分别与已有模型对比. 该模型为俞言祥[16]基于美国西部NGA 强震数据库建立的基岩场地水平衰减关系模型,结果如图6所示.从图6中可看出,对于7.1级和6.4级地震的PGA和PGV的实际记录值,俞言祥模型曲线基本从这些散点中间穿过. 这些记录数据能够较好地反映出PGA和PGV随震中距的增大而衰减的趋势. 其中,6.4级地震对应的PGA、PGV的残差平方和(SSR)均小于7.1级地震,如表1所示. 这说明该模型对6.4级地震的地震动参数预测更加准确. 此外,值得注意的是,在7.1级地震中,震中距R=34.5 km的台站CLC,其PGA和PGV均远远高于俞言祥模型的预测值,造成此差异的原因有二:1)台站CLC虽然震中距较大,但是其断层距Rrup = 2.8 km远远小于其余台站,说明该台站与发震断层十分接近,地震波传播路径较短,因而地震动参数较大;2)台站CLC所处场地条件为C类(软基岩),不满足俞言祥模型的基岩场地条件,可能存在较大程度的场地放大效应,因而地震动参数较大.3 地震动三要素。

黑体:黑体概念是理解热辐射的基础。

黑体被定义为完全的吸收体和发射体。

它吸收和重新发射它所接收到的所有能量(没有反射)。

它的吸收率和发射率均为1。

也就是说,在任何温度下,对各种波长的电磁辐射能的吸收系数恒等于1的物体称为黑体。

灰体:太阳辐射:太阳是一个电磁辐射源,是遥感的主要能源。

作为一个炽热气体球的太阳.其中心温度15 x 106K,表而温度约6000 K。

太阳辐射的总功率为3.826 x lO26W,太阳表而的辐射出射度为6.284 x 10W m-2。

太阳的辐射波谱从X 射线一直延伸到无线电波,是个综合波谱。

单位时间内,垂直于太阳射线的单位面积上,所接收到的全部太阳辐射能。

其数值为1.36x 2护w.m-z。

此值实际为大气圈外太阳光的光谱辐照度在全波段范围内的积分值。

D是以日地平均距离为单位的日地之间的距离o B是太阳天顶角(与法线的夹角)。

当B为某地正午时分太阳天顶角时,.E为到达某地的最大地面辐照度Em。

二。

地面接收的太阳辐照度与太阳夭顶角有关。

在忽略大气损失的情况下,可近似认为地面辐照度E与cosB成正比。

之n}oosB 式中;£。

是太阳常数,一个描述太阳辐射能流密度的物理量。

地球辐射:地球辐射可分为短波辐射(0.3一2. Sam)及长波辐射(6}m以上)。

图1.7显示地球的短波辐射以地球表面对太阳的反射为主,地球自身的热辐射可忽略不计。

地球的长波辐射只考虑地表物体自身的热辐射,在这区域内太阳辐照的影响极小。

介子两者之间的中红外波段(2.5---6}em)太阳辐射和热辐射的影响均有,不能忽略。

对于地球的短波辐射的反射辐射而言,其辐射亮度与太阳辐照度及地物反射率有关。

黑体辐射:电磁波谱:电磁波谱是按电磁波在真空中的波长或频率来划分的。

它包括从无线电波、微波、红外光、可见光、紫外光、X射线、Y射线、宇宙射线等。

波谱区的划分没有明确的物理定义,因而界线并非严格、固定,是一种相互渗透的过渡关系。

外文资料与中文翻译Metrics of scale in remote sensing and GISMichael F Goodchild(National Center for Geographic Information and Analysis, Department of Geography, University of California, Santa Barbara)ABSTRACT: The term scale has many meanings, some of which survive the transition from analog to digital representations of information better than others. Specifically, the primary metric of scale in traditional cartography, the representative fraction, has no well-defined meaning for digital data. Spatial extent and spatial resolution are both meaningful for digital data, and their ratio, symbolized as US, is dimensionless. US appears confined in practice to a narrow range. The implications of this observation are explored in the context of Digital Earth, a vision for an integrated geographic information system. It is shown that despite the very large data volumes potentially involved, Digital Earth is nevertheless technically feasible with today‟s technology. KEYWORDS: Scale, Geographic Information System , Remote Sensing, Spatial ResolutionINTRODUCTION: Scale is a heavily overloaded term in English, with abundant definitions attributable to many different and often independent roots, such that meaning is strongly dependent on context. Its meanings in “the scales of justice” or “scales over ones eyes” have little connection to each other, or to its meaning in a discussion of remote sensing and GIS. But meaning is often ambiguous even in that latter context. For example, scale to a cartographer most likely relates to the representative fraction, or the scaling ratio between the real world and a map representation on a flat, two-dimensional surface such as paper, whereas scale to an environmental scientist likely relates either tospatial resolution (the representatio n‟s level of spatial detail) or to spatial extent (the representation‟s spatial coverage). As a result, a simple phrase like “large scale” can send quite the wrong message when communities and disciplines interact - to a cartographer it implies fine detail, whereas to an environmental scientist it implies coarse detail. A computer scientist might say that in this respect the two disciplines were not interoperable.In this paper I examine the current meanings of scale, with particular reference to the digital world, and the metrics associated with each meaning. The concern throughout is with spatial meanings, although temporal and spectral meanings are also important. I suggest that certain metrics survive the transition to digital technology better than others.The main purpose of this paper is to propose a dimensionless ratio of two such metrics that appears to have interesting and useful properties. I show how this ratio is relevant to a specific vision for the future of geographic information technologies termed Digital Earth. Finally, I discuss how scale might be defined in ways that are accessible to a much wider range of users than cartographers and environmental scientists.FOUR MEANINGS OF SCALE LEVEL OF SPATIAL DETAIL REPRESENTATIVE FRACTIONA paper map is an analog representation of geographic variation, rather than a digital representation. All features on the Earth‟s surface are scaled using an approximately uniform ratio known as the representative fraction (it is impossible to use a perfectly unif orm ratio because of the curvature of the Earth‟s surface). The power of the representative fraction stems from the many different properties that are related to it in mapping practice. First, paper maps impose an effective limit on the positional accuracy of features, because of instability in the material used to make maps, limited ability to control the location of the pen as the map is drawn, and many other practicalconsiderations. Because positional accuracy on the map is limited, effective positional accuracy on the ground is determined by the representative fraction. A typical (and comparatively generous) map accuracy standard is 0.5 mm, and thus positional accuracy is 0.5 mm divided by the representative fraction (eg, 12.5 m for a map at 1:25,000). Second, practical limits on the widths of lines and the sizes of symbols create a similar link between spatial resolution and representative fraction: it is difficult to show features much less than 0.5 mm across with adequate clarity. Finally, representative fraction serves as a surrogate for the features depicted on maps, in part because of this limit to spatial resolution, and in part because of the formal specifications adopted by mapping agencies, that are in turn related to spatial resolution. In summary, representative fraction characterizes many important properties of paper maps.In the digital world these multiple associations are not necessarily linked. Features can be represented as points or lines, so the physical limitations to the minimum sizes of symbols that are characteristic of paper maps no longer apply. For example, a database may contain some features associated with 1:25,000 map specifications, but not all; and may include representations of features smaller than 12.5 m on the ground. Positional accuracy is also no longer necessarily tied to representative fraction, since points can be located to any precision, up to the limits imposed by internal representations of numbers (eg, single precision is limited to roughly 7 significant digits, double precision to 15). Thus the three properties that were conveniently summarized by representative fraction - positional accuracy, spatial resolution, and feature content - are now potentially independent.Unfortunately this has led to a complex system of conventions in an effort to preserve representative fraction as a universal defining characteristic of digital databases. When such databases are created directly from paper maps, by digitizing or scanning, itis possible for all three properties to remain correlated. But in other cases the representative fraction cited for a digital database is the one implied by its positional accuracy (eg, a database has representative fraction 1: 12,000 because its positional accuracy is 6 m); and in other cases it is the feature content or spatial resolution that defines the conventional representative fraction (eg, a database has representative fraction 1:12,000 because features at least 6 m across are included). Moreover, these conventions are typically not understood by novice users - the general public, or children - who may consequently be very confused by the use of a fraction to characterize spatial data, despite its familiarity to specialists.SPATIAL EXTENTThe term scale is often used to refer to the extent or scope of a study or project, and spatial extent is an obvious metric. It can be defined in area measure, but for the purposes of this discussion a length measure is preferred, and the symbol L will be used. For a square project area it can be set to the width of the area, but for rectangular or oddly shaped project areas the square root of area provides a convenient metric. Spatial extent defines the total amount of information relevant to a project, which rises with the square of a length measure.PROCESS SCALEThe term process refers here to a computational model or representation of a landscape-modifying process, such as erosion or runoff. From a computational perspective,a process is a transformation that takes a landscape from its existing state to some new state, and in this sense processes are a subset of the entire range of transformations that can be applied to spatial data.Define a process as a mapping b (x ,2t )=f ( a (x ,1t )) where a is a vector of input fields, b is a vector of output fields, f is a function, t is time, 2t is later in time thant, and x denotes location. Processes vary according to how they modify the spatial 1characteristics of their inputs, and these are best expressed in terms of contributions tot) based only on the the spatial spectrum. For example, some processes determine b(x, ,2t), and thus have minimal effect on spatial spectra. inputs at the same location a(x,1Other processes produce outputs that are smoother than their inputs, through processes of averaging or convolution, and thus act as low-pass filters. Less commonly, processes produce outputs that are more rugged than their inputs, by sharpening rather than smoothing gradients, and thus act as high-pass filters.The scale of a process can be defined by examining the effects of spectral components on outputs. If some wavelength s exists such that components with wavelengths shorter than s have negligible influence on outputs, then the process is said to have a scale of s. It follows that if s is less than the spatial resolution S of the input data, the process will not be accurately modeled.While these conclusions have been expressed in terms of spectra, it is also possible to interpret them in terms of variograms and correlograms. A low-pass filter reduces variance over short distances, relative to variance over long distances. Thus the short-distance part of the variogram is lowered, and the short-distance part of the correlogram is increased. Similarly a high-pass filter increases variance over short distances relative to variance over long distances.L/S RATIOWhile scaling ratios make sense for analog representations, the representative fraction is clearly problematic for digital representations. But spatial resolution and spatial extent both appear to be meaningful in both analog and digital contexts, despite the problems with spatial resolution for vector data. Both Sand L have dimensions oflength, so their ratio is dimensionless. Dimensionless ratios often play a fundamental role in science (eg, the Reynolds number in hydrodynamics), so it is possible that L/S might play a fundamental role in geographic information science. In this section I examine some instances of the L/S ratio, and possible interpretations that provide support for this speculation.- Today‟s computing industry seems to have settled on a screen standard of order 1 megapel, or 1 million picture elements. The first PCs had much coarser resolutions (eg, the CGA standard of the early 198Os), but improvements in display technology led to a series of more and more detailed standards. Today, however, there is little evidence of pressure to improve resolution further, and the industry seems to be content with an L/S ratio of order 103. Similar ratios characterize the current digital camera industry, although professional systems can be found with ratios as high as 4,000.- Remote sensing instruments use a range of spatial resolutions, from the 1 m of IKONOS to the 1 km of AVHRR. Because a complete coverage of the Earth‟s surface at 1 m requires on the order of 1015 pixels, data are commonly handled in more manageable tiles, or approximately rectangular arrays of cells. For years, Landsat TM imagery has been tiled in arrays of approximately 3,000 cells x 3,000 cells, for an L/S ratio of 3,000.- The value of S for a paper map is determined by the technology of map-making, and techniques of symbolization, and a value of 0.5 mm is not atypical. A map sheet 1 m across thus achieves an L/S ratio of 2,000.- Finally, the human eye‟s S can be defined as the size of a retinal cell, and the typical eye has order 108 retinal cells, implying an L/S ratio of 10,000. Interestingly, then, the screen resolution that users find generally satisfactory corresponds approximately to the parameters of the human visual system; it is somewhat larger, but the computer screentypically fills only a part of the visual field.These examples suggest that L/S ratios of between 103 and 104 are found across a wide range of technologies and settings, including the human eye. Two alternative explanations immediately suggest themselves: the narrow range may be the result of technological and economic constraints, and thus may expand as technology advances and becomes cheaper; or it may be due to cognitive constraints, and thus is likely to persist despite technological change.This tension between technological, economic, and cognitive constraints is well illustrated by the case of paper maps, which evolved under what from today‟s perspective were severe technological and economic constraints. For example, there are limits to the stability of paper and to the kinds of markings that can be made by hand-held pens. The costs of printing drop dramatically with the number of copies printed, because of strong economies of scale in the printing process, so maps must satisfy many users to be economically feasible. Goodchild [2000]has elaborated on these arguments. At the same time, maps serve cognitive purposes, and must be designed to convey information as effectively as possible. Any aspect of map design and production can thus be given two alternative interpretations: one, that it results from technological and economic constraints, and the other, that it results from the satisfaction of cognitive objectives. If the former is true, then changes in technologymay lead to changes in design and production; but if the latter is true, changes in technology may have no impact.The persistent narrow range of L/S from paper maps to digital databases to the human eye suggests an interesting speculation: That cognitive, not technological or economic objectives, confine L/S to this range. From this perspective, L/S ratios of more than 104 have no additional cognitive value, while L/S ratios of less than 103 areperceived as too coarse for most purposes. If this speculation is true, it leads to some useful and general conclusions about the design of geographic information handling systems. In the next section I illustrate this by examining the concept of Digital Earth. For simplicity, the discussion centers on the log to base 10 of the L/S ratio, denoted by log L/S, and the speculation that its effective range is between 3 and 4.This speculation also suggests a simple explanation for the fact that scale is used to refer both to L and to S in environmental science, without hopelessly confusing the listener. At first sight it seems counter~ntuitive that the same term should be used for two independent properties. But if the value of log L/S is effectively fixed, then spatial resolution and extent are strongly correlated: a coarse spatial resolution implies a large extent, and a detailed spatial resolution implies a small extent. If so, then the same term is able to satisfy both needs.THE VISION OF DIGITAL EARTHThe term Digital Earth was coined in 1992 by U.S. Vice President Al Gore [Gore, 19921, but it was in a speech written for delivery in 1998 that Gore fully elaborated the concept (www.d~~Pl9980131 .html): “Imagine, for example, a young child going to a Digital Earth exhibit at a local museum. After donning a headmounted display, she sees Earth as it appears from space. Using a data glove, she zooms in, using higher and higher levels of resolution, to see continents, then regions, countries, cities, and finally individual houses, trees, and other natural and man-made objects. Having found an area of the planet she is interested in exploring, she takes the equivalent of a …magic carpet ride‟ through a 3- D visualization of the terrain.”This vision of Digital Earth (DE) is a sophisticated graphics system, linked to a comprehensive database containing representations of many classes of phenomena. It implies specialized hardware in the form of an immersive environment (a head-mounteddisplay), with software capable of rendering the Earth‟s surface at high speed, and from any perspective. Its spatial resolution ranges down to 1 m or finer. On the face of it, then, the vision suggests data requirements and bandwidths that are well beyond today‟s capabilities. If each pixel of a 1 m resolution representation of the Earth‟s surface was allocated an average of 1 byte then a total of 1 Pb of storage would be required; storage of multiple themes could push this total much higher. In order to zoom smoothly down to 1 m it would be necessary to store the data in a consistent data structure that could be accessed at many levels of resolution. Many data types are not obviously renderable (eg, health, demographic, and economic data), suggesting a need for extensive research on visual representation.The bandwidth requirements of the vision are perhaps the most daunting problem. To send 1 Pb of data at 1 Mb per second would take roughly a human life time, and over 12,000 years at 56 Kbps. Such requirements dwarf those of speech and even full-motion video. But these calculations assume that the DE user would want to see the entire Earth at Im resolution. The previ ous analysis of log L/S suggested that for cognitive (and possibly technological and economic) reasons user requirements rarely stray outside the range of 3 to 4, whereas a full Earth at 1 m resolution implies a log L/S of approximately 7. A log L/S of 3 suggests that a user interested in the entire Earth would be satisfied with 10 km resolution; a user interested in California might expect 1 km resolution; and a user interested in Santa Barbara County might expect 100 m resolution. Moreover, these resolutions need apply only to the center of the current field of view.On this basis the bandwidth requirements of DE become much more manageable. Assuming an average of 1 byte per pixel, a megapel image requires order 107 bps if refreshed once per second. Every one-unit reduction in log L/S results in two orders of magnitude reduction in bandwidth requirements. Thus a Tl connection seems sufficientto support DE, based on reasonable expectations about compression, and reasonable refresh rates. On this basis DE appears to be feasible with today‟s communication technology.CONCLUDING COMMENTSI have argued that scale has many meanings, only some of which are well defined for digital data, and therefore useful in the digital world in which we increasingly find ourselves. The practice of establishing conventions which allow the measures of an earlier technology - the paper map - to survive in the digital world is appropriate for specialists, but is likely to make it impossible for non-specialists to identify their needs. Instead, I suggest that two measures, identified here as the large measure L and the small measure S, be used to characterize the scale properties of geographic data.The vector-based representations do not suggest simple bases for defining 5, because their spatial resolutions are either variable or arbitrary. On the other hand spatial variat;on in S makes good sense in many situations. In social applications, it appears that the processes that characterize human behavior are capable of operating at different scales, depending on whether people act in the intensive pedestrian-oriented spaces of the inner city or the extensive car-oriented spaces of the suburbs. In environmental applications, variation in apparent spatial resolution may be a logical sampling response to a phenomenon that is known to have more rapid variation in some areas than others; from a geostatistical perspective this might suggest a non-stationary variogram or correlogram (for examples of non-statjonary geostatistical analysis see Atkinson [2001]). This may be one factor in the spatial distribution of weather observation networks (though others, such as uneven accessibility, and uneven need for information are also clearly important).The primary purpose of this paper has been to offer a speculation on the significance of the dimensionless ratio L/S. The ratio is the major determinant of datavolume, and consequently processing speed, in digital systems. It also has cognitive significance because it can be defined for the human visual system. I suggest that there are few reasons in practice why log L/S should fall outside the range 3 - 4, and that this provides an important basis for designing systems for handling geographic data. Digital Earth was introduced as one such system. A constrained ratio also implies that L and S are strongly correlated in practice, as suggested by the common use of the same term scale to refer to both.ACKNOWLEDGMENTThe Alexandria Digital Library and its Alexandria Digital Earth Prototype, the source of much of the inspiration for this paper, are supported by the U.S. National Science Foundation.REFERENCESAtkinson, P.M., 2001. Geographical information science: Geocomputation and nonstationarity. Progress in Physical Geography 25(l): 111-122.Goodchild, M F 2000 Communicating geographic information in a digital age. Annals of the Association of American Geographers 90(2): 344-355.Goodchild, M.F. & J. Proctor, 1997. Scale in a digital geographic world. Geographical and Environmental Modelling l(1): 5-23.Gore, A., 1992. Earth in the Balance: Ecology and the Human Spirit. Houghton Mifflin, Boston, 407~~.Lam, N-S & D. Quattrochi, 1992. On the issues of scale, resolution, and fractal analysis in the mapping sciences. Professional Geographer 44(l): 88-98.Quattrochi D.A & M.F. Goodchild (Eds), 1997. Scale in Remote Sensing and GIS.Lewis Publishers, Boca Raton, 406~~.中文翻译:在遥感和地理信息系统的规模度量迈克尔·F古德柴尔德(美国国家地理信息和分析中心,加州大学圣巴巴拉分校地理系)摘要:长期的规模有多种含义,其中一些生存了从模拟到数字表示的信息比别人更好的过渡。

听觉的掩蔽效应及其应用摘要:听觉掩蔽现象是指一种声音对听觉系统感受另一种声音的影响,其在自然界中普遍存在。

听觉掩蔽现象不仅在人和动物对声音的感知和定位中起着重要的作用,其也越来越多地被应用于实际生活和临床治疗。

关键词:掩蔽效应;应用中图分类号:TN912.3 文献标识码:A人和动物都生活在充满声音的环境里,人类依靠声音进行交流,很多动物则靠声音进行通讯,寻找食物和配偶乃至感知外界环境。

有些有生物学意义的声音在自然界中并非孤立存在,它们之间相互作用相互影响会形成听觉的掩蔽现象。

在听觉研究中,掩蔽是指一种声音对听觉系统感受另一种声音的影响。

早期听觉心理物理学测试显示,当从不同位置呈现的两个声信号间隔时间足够短时,受试者将两个声信号辨知为一个融合声,且只能确认前导声的位置,即第一个声音(掩蔽声)对滞后声(探测声)存在前掩蔽效应;同时滞后声对前导声的感知也存在着一定程度的后掩蔽效应。

一般而言,掩蔽作用会削弱听觉系统对声音的辨别和感知,引起对探测声的反应下降,感受阈值升高,对探测声探测能力降低;而在有些情况下掩蔽声也可易化神经元对探测声的反应使其兴奋性增加。

自然界中存在的听觉掩蔽现象非常普遍,其在人和动物对声音的感知和定位中起着非常重要的作用。

随着人们对听觉掩蔽现象的了解,其也越来越多被应用于实际生活和临床有关疾病的治疗。

1 掩蔽现象在声音感知和定位中的作用1.1 声源定位当声音产生于一个回响的环境时,会向不同方向传播,并且随后从附近的表面反射回来,第一个声音和反射回来的声音之间会相互影响,从而产生掩蔽效应。

听觉系统因而面临着要分析发出去的第一个声音和反馈声之间相互作用的问题,并根据反馈声的不同特性进行声音的感知和定位,尽管这是一堆看似乱糟糟的信息,但我们仍然能对这些声源进行定位并能相当准确的分辨出其中的含义。

声源定位的能力相当重要,确定物体的方向有助于我们将注意转向或回避某种声源。

对于某些动物,尤其是声纳动物如蝙蝠等,声源定位有助于寻找捕猎对象或回避敌害,此为生存的必不可少的能力。

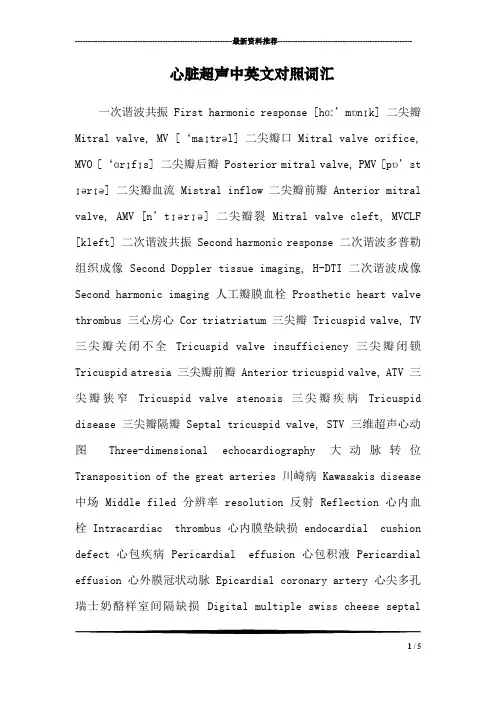

---------------------------------------------------------------最新资料推荐------------------------------------------------------心脏超声中英文对照词汇一次谐波共振 First harmonic response [hɑː’mɒnɪk] 二尖瓣Mitral valve, MV [‘maɪtrəl] 二尖瓣口 Mitral valve orifice, MVO [‘ɑrɪfɪs] 二尖瓣后瓣 Posterior mitral valve, PMV [pɒ’st ɪərɪə] 二尖瓣血流 Mistral inflow 二尖瓣前瓣 Anterior mitral valve, AMV [n’tɪərɪə] 二尖瓣裂 Mitral valve cleft, MVCLF [kleft] 二次谐波共振 Second harmonic response 二次谐波多普勒组织成像 Second Doppler tissue imaging, H-DTI 二次谐波成像Second harmonic imaging 人工瓣膜血栓 Prosthetic heart valve thrombus 三心房心 Cor triatriatum 三尖瓣 Tricuspid valve, TV 三尖瓣关闭不全Tricuspid valve insufficiency 三尖瓣闭锁Tricuspid atresia 三尖瓣前瓣 Anterior tricuspid valve, ATV 三尖瓣狭窄Tricuspid valve stenosis 三尖瓣疾病Tricuspid disease 三尖瓣隔瓣 Septal tricuspid valve, STV 三维超声心动图Three-dimensional echocardiography 大动脉转位Transposition of the great arteries 川崎病 Kawasakis disease 中场 Middle filed 分辨率 resolution 反射 Reflection 心内血栓 Intracardiac thrombus 心内膜垫缺损 endocardial cushion defect 心包疾病 Pericardial effusion 心包积液 Pericardial effusion 心外膜冠状动脉 Epicardial coronary artery 心尖多孔瑞士奶酪样室间隔缺损 Digital multiple swiss cheese septal1 / 5defect 心肌内冠状动脉 Intramyocardial coronary artery 心肌对比超声心动图 Myocardial contrast echocardiography, MCE 心肌梗塞 Myocardial infarction 心肌梗塞并发症 Complications of myocardial infarction 心房内血栓 Atria thrombus 心房黏液瘤Atria myxoma, MYX 心室内附壁血栓Intraventricular mural thrombus 心绞痛Angina pectoris 心脏声学造影Cardial acoustic contrast 心脏肿瘤Cardial tumor 心脏移植Heart transplantation 主动脉二叶瓣 Bicuspid aortic valve 主动脉瓣口Aortic valve orifice, AVO 主动脉瓣狭窄Aortic valves stenosis 主肺动脉 Main pulmonary artery, MPA 主瓣 Main lobe 功率谱 Power spectrum 右心房 Right atrium, RA 右心室 Right ventricle, RV 右心室收缩时间间期 Right ventricle systolic time intervals 右心室收缩前间期 Right ventricle pre-ejection period, RVPEP 右心室射血时间Right ventricle ejection time ,RVET 右冠状动脉起源于肺动脉 Anomalous origin of right coronary artery from pulmonary artery 右室双出口Double-outlet right ventricle 右心室双腔心 Double chambered right ventricle 右心室流出道 right ventricle outflow 对比造影谐波成像 Contrast agent harmonic imaging, CAHI 对比超声心动图学 Contrast echocardiography, CE 对数压缩 Logarithmic compensation 尼奎斯特频率极限 Nyquist frequency limit 左心耳 Left atrium apendge, LAA 左心房 Left atrium 左心左心室长---------------------------------------------------------------最新资料推荐------------------------------------------------------ 轴切面 Left ventricle, LV 左心室发育不全综合征 Hypoplastic left heart syndrome 左心室收缩末期内径 Left ventricle end systolic dimension, LVEDD 左心室流出道梗阻 Left ventricle outflow obstruction 左心室舒张末期内径 Left ventricle end diastolic dimension, LVSDD 左冠状动脉起源于肺动脉 Anomalous origin of left coronary artery from pulmonary artery 平行扫描 Parallel scanning 永存动脉干 Persistent arterious 电子相控阵扇型扫面 Phased array sector scan 皮肤黏膜淋巴结综合征Mucocutaneous lymph node syndrome, MCLS 节制束 Moderator band 伪像Artifacts 伪影处理技术Pseudo-color processing technique 先天性肺动脉口狭窄Congenital pulmonary artery fistula 先天性冠状动脉瘘 Congenital coronary artery fistula 共振Resonant 共振频率Resonant frenquency 压力半降时间Pressure half-time, PHT 回声失落 Echo drop-out 回声增强效应Effect of echo enhancement 团注 Bolus 多平面经食道超声心动图Multiplane transesophageal echocardiography 多点选通式多普勒Multigate Doppler 多普勒方程 Doppler equation 多普勒组织 M 型模式 Doppler tissue m-mode, DT-M-MODE 多普勒组织加速度图Doppler tissue acceleration, DAT 多普勒组织成像Doppler tissue imaging, DTI 多普勒组织脉冲频谱 Doppler tissue pulsed wave mode, DT-DTE 多普勒组织能量图 Doppler tissue energy, DTE3 / 5多普勒组织速度图 Doppler tissue velocity, DTV 多普勒效应Doppler effect 多普勒超声心动图 Doppler echocardiography 多普勒频移Doppler shift 导航装置Homing deveces 导管超声Catheter ultrasound 机械扇型扫描 Mechanical sector scan 纤维瘤Fibroma 自由扫查Free-hand scanning 自动边缘检测Automatic border detection 自然组织谐波成像 Native tissue harmonic imaging 色彩倒错 Color aliasing 血栓 Thrombus 血流彩色成像 Color flow mapping 血管肉瘤 Angiosarocama 血管腔内超声成像Intravascular ultrasound imaging 负荷超声心动图Stress echocardiography 体元模型 Voxel model 声束形成 Bean forming 声阻抗Acoustic impedance 声学定量Acoustic quantification 声学速度Acoustic velocity 声强Acoustic intensity 层流 Laminar flow 希阿利网 Chiari netok 快速富里叶变换Fast fourier transform 折射Refraction 时间分辨率Temporal resolution 时间增益补偿 Time gain compensation 时域法 Time domain method 纵向分辨率 Longitudinal resolution 纵波 Longitudinal wave 肛管超声 Anal endosonography 近场 Near filed 进入曲线 Wash in curves 远场 Far filed 连续式多普勒Continuous wave Doppler, CW Doppler 连续注射Continuous injection 乳头肌Papillary muscle, PM 单脉冲删除Single pulse concellation 取样容积 Sample volume 图像分辨率 Image resolution 实时频谱分析 Real-time spectral analysis 房间隔缺---------------------------------------------------------------最新资料推荐------------------------------------------------------ 损 Atrial septal defect, ASD 房间隔脂肪瘤样肥厚 Lipomatous hypertrophy of the atrial septum 房间隔瘤Atrial septal aneurysm 欧氏瓣Eustachian 空壳Hollow core 空间分辨率Spatial resolution 组织多普勒成像技术 Tissue Doppler imagine 组织多普勒超声心动图 Tissue Doppler echocardiography 经心腔内超声心动图 Intracardiac echocardiography 经阴道彩色多普勒超声 Trans-vaginal color Doppler ultrasound 经食道超声心动图Transesophageal echocardiography 限制性室间隔缺损Restrictive ventricular septal defect 非致密性心室心肌二维超声心动图Non-compaction of ventricular myocardium two dimensional echocardiography 肺动脉 Pulmonary artery 肺动脉狭窄肺动脉高压肺动脉瓣肺静脉异位引流顶端侧方扫描式顶端旋转扫描式临床基础冠心病冠状动脉内超声显像冠状动脉异常冠状动脉血流储备冠状动脉起源异常冠状静脉窦厚度分辨率室上嵴室间隔缺损室间隔膜部瘤界面相干对比造影成像相干图像形成技术类脂背向散射积分背景噪音脉冲反相谐波成像脉冲式多普勒脉冲重复频率衍射重叠房室瓣扇形扫描振幅捆扎型纤维蛋白分子旁瓣效应浦肯野纤维瘤消除曲线涡流特定定点造影剂留间隔器缺血性预适应胸骨旁短轴切面能量对比成像脂肪瘤5 / 5。

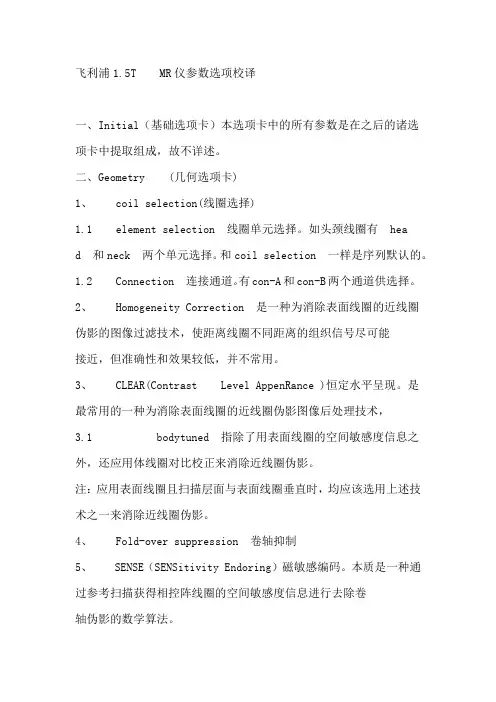

飞利浦1.5T MR仪参数选项校译一、Initial(基础选项卡)本选项卡中的所有参数是在之后的诸选项卡中提取组成,故不详述。

二、Geometry (几何选项卡)1、coil selection(线圈选择)1.1 element selection 线圈单元选择。

如头颈线圈有head 和neck 两个单元选择。

和coil selection 一样是序列默认的。

1.2 Connection 连接通道。

有con-A和con-B两个通道供选择。

2、Homogeneity Correction 是一种为消除表面线圈的近线圈伪影的图像过滤技术,使距离线圈不同距离的组织信号尽可能接近,但准确性和效果较低,并不常用。

3、CLEAR(Contrast Level AppenRance )恒定水平呈现。

是最常用的一种为消除表面线圈的近线圈伪影图像后处理技术,3.1 bodytuned 指除了用表面线圈的空间敏感度信息之外,还应用体线圈对比校正来消除近线圈伪影。

注:应用表面线圈且扫描层面与表面线圈垂直时,均应该选用上述技术之一来消除近线圈伪影。

4、Fold-over suppression 卷轴抑制5、SENSE(SENSitivity Endoring)磁敏感编码。

本质是一种通过参考扫描获得相控阵线圈的空间敏感度信息进行去除卷轴伪影的数学算法。

6、stacks 扫描框6.1 type方式。

有parallel(平行的)和radial(放射状的)两个选项。

6.2 Sliceorientation 层面定位。

6.3 Fold-overdirection 相位编码方向。

6.4 Fatshift direction 化学位移方向。

因为化学位移伪影的方向是和频率编码方向一致的,所以系统的备选项中,方向总是和相位编码方向垂直。

7、Minimum number of packages 最小采集组数。

它主要的意义体现在颅脑T2WI-FLAIR序列,为避免成像层面以外的脑脊液流入成像层面而影响抑水效果,所以激发层面数要大于成像层面数。

ManualContentsIntroductionFeature OverviewGUI OverviewFAST and Detailed ViewLearning and Automatic Parametrisation Fine Tune Your SoundStyle ModulesOutput MonitoringGlobal Control SectionSettings 3 4 5 6 7 8 9 11 12 13IntroductionFAST Limiter is an Artificial Intelligence (AI) powered true peak limiter plug-in that helps to add the right finishing touches to your audio tracks.Like all plug-ins of the FAST family, FAST Limiter has been designed with a simple goal in mind: Get great results, FAST!Feature OverviewAI Powered LimitingFAST Limiter uses AI technology to find the right limiter parameters for your audio material within seconds in order to get your tracks ready for publishing.FAST View and Detailed ViewThe user interface has two view modes: FAST View provides the customised controls you need to keep in the creative flow while Detailed View provides deeper control over parameters.Flavour ButtonsThree buttons allow you to choose between a Modern, Neutral or Aggressive limiting style.Style ModulesFour different Style Modules offer an easy way to tweak the spectral and temporal characteristics of your audio material at the touch of a button.ProfilesDifferent profiles allow you to tell FAST Limiter what kind of genre the plug-in is dealing with. This ensures a good adaption of the processing to your audio material.1122345Limiter DisplayLearn SectionGUI OverviewLearn ButtonStart the learning process.Waveform DisplayMonitor the Limiter’s impact on the signal.GainControl the input gain.Gain ReductionMonitor the applied gain reduction.MeterMonitor your input and output level and the applied gain reduction.Style ModulesTweak the spectral and temporal characteristics of your audio material.FAST/DetailedSwitch GUI between FAST View and Detailed View.Flavour Buttons Profile DropdownSelect a genre that bestmatches your audio material or choose a reference track.Q uality Indica tors Check the publishing quality of your track. Hover on the indicator icons to show some hints regarding the final publishing checks.1123323444566FAST and Detailed ViewYou can easily switch between FAST and Detailed View by clicking on the two buttons in the upper left corner of the interactive equaliser display.FAST View is designed to give you optimised controls to keep in the creative zone. In this mode, you only see the controls you need, so you can make quick tweaks and keep moving throughout your music-making process.This is the default View when opening the plug-in.Detailed View is designed for users who want to have maximum freedom in making changes as they see fit. All parameters can be freely modified and allow you to fine tune the results to your personal taste.FAST ViewDetailed ViewThe user interface of FAST Limiter has two view modes: FAST View provides the customised controls you need, based on the content of your material. Detailed View provides deeper control over parameters, to adjust settings to your own taste.Done! Once learning is completed, FAST L imiter sets well-balanced limiter parameters and activates the Style Modules. You can now see the gained input signal (light grey), the output signal (green) and the gain reductioncurve (red) in the interactive Waveform Display .If you want FAST Limiter to learn from a different section ofyour input signal, you can simply start the audio playback from there and click the Relearn button. Please note that you don’t have to click the Relearn button when switching between Flavours or Genre Profiles.Learning and Automatic ParametrisationThe heart of FAST Limiter is its ability to automatically find the most suitable limiter parameters for your signal. There-fore, choosing a profile and starting the learning process will typically be the first thing you want to do when working with the plug-in.The learning process will not only automatically set all limiter parameters, it will also set and activate the Style Modules that allow to tweak the spectral and temporal characteristics of your audio material.Choose a Genre Profile that best matches your inputsignal. If you don’t find a suitable profile, simply select “Universal”. You can also set a music file from your hard drive as a target by clicking ‘Reference Track’ in the dropdown menu. Start the playback in your DAW. Make sure to select a rela-tively loud segment of your track (e.g. the refrain).Press the L earn button to start the learning process.Now, a progress bars inside the L earn button and a learning animation inside the Style Modules indicate the progress of the learning process.112233Fine Tune Your SoundWorking in FAST ViewAdapt the GainYou can use the Gain handle to set the amount of input gain. Moving the handle up will raise the level of the input signal and more peaks will be limited. This leads to an increased loudness and reduces the dynamics of the output signal. You will instantly see the impact on the waveform of the limited output signal in the Waveform Display.Choose a FlavourYou can use three Flavour buttons to quickly change the character of your limited sound. Once you settled on a Flavour you can further fine-tune the results using the Gain handle and the basic Style Modules in FAST View or the additional parameters available in Detailed View. Read the section Style Modules on page 10 for more details.Change ProfilesYou can always change the selected Genre Profile , without needing to restart the learning process. Please note that manually made adaptions to parameters will not be adopted. Changing your profile will reset all parameters to their default value.Level MatchEnable to level-match the processed output with the dry input signal for an accurate A/B comparison. This helps to objectively compare the sound of the original signal and the processed signal without being (positively) biased by the louder level of the gained output signal.1 23 4LimitSet the maximum signal level that is allowed to pass through the limiter. This is a hard limit for the gained signal (all values larger than this value are limited) and represents the highest possible true peak level of the output signal.SpeedSet the speed for the limiter. The speed parame-ter controls the temporal characteristics (attack & release) of the limiter.fastThe gain reduction quickly returns to zero after the signal was limited. This setting preserves more transients and leads to a louder signal, but may cause audible distortion for heavily limited signals. slowThe gain reduction returns slowly to zero after the signal was limited. This setting leads to smooth limiting results, but may not be perfectly suited for highly tran-sient signals.autoIn auto mode, FAST L imiter will automatically adapt to the characteristics of the input signal. Auto mode ensures that the limiting process does not create audible distortion even for more extreme gain settings. This mode can be used for any type of signal.Working in Detailed View12Style ModulesBassEnhance the low end of your signal. This can be helpful ifthe bass feels muddy or weak.Set a cut off frequency to only apply the bass enhance-ment below this frequency.ResonancesImprove the spectral balance and tame resonances. This module is great for giving your track a final, subtle polish.Set the frequency resolution for the resonance processing.SaturationAdd saturation and increase the apparent loudness of your track without increasing peak level. This effect feels a bit like inflating the signal.Choose a character for the saturation effect.TransientsTweak transient components and preserve the punch of your signal.Select the sensitivity for the transient tweaking effect.The four different Style Modules offer an easy way to tweak the spectral and temporal characteristics of your audiomaterial. Each module comes with a main parameter to control its overall impact , an additional parameter to tweak theunderlying processing ( , only available in Detailed View) and a visualisation showing the current effect on the signal .All modules can be enabled and disabled or pinned to remain expanded .12233445LoudnessIntegrated loudness of the observed output signal in LUFS. Loudness RangeLoudness variation between the loudest and quietest sections of your track.Max. PeakMaximum true peak value of the observed output signal. Pause / Play IconStart or pause the loudness measurement.Restart IconRestart the measurement of loudness and peak. Restarting the measurement can be helpful if you have made significant changes to your mix. For the most precise results on a whole track, restart the measurement at the beginning of your track and let it run through until the end.Quality IndicatorsFAST Limiter constantly monitors the loudness, and peak level of your track. The large quality indicators in FAST View and the small quality indicators next to the actual measurement values in Detailed View indicate if your track is ready for publishing.This value is looking good for publishing. Every-thing is good to go!There could be a potential issue with your track. Hover over the icon to learn more about the poten-tial issue.FAST L imiter has not yet collected enough infor-mation about your track. Continue the playback and wait until the measurement becomes valid.Per default, FAST L imiter assumes that you are publishing your tracks to a streaming platform like Spotify, YouTube or Apple Music and there-fore uses a reference loudness level of -14 LUFS.If you want to select an alternative publishing target (CD or broadcasting), you can switch the reference loudness on the settings page (see 13).Output Monitoring123456Global Control SectionBypassUndo/RedoSave and Load PresetsTo save a preset (all parameter values), click the Save button in the Control Section. To load a saved preset, choose the respective preset name from the preset dropdown.If you want to delete a preset or change its name, please go to the preset folder with your local file explorer. You can also easily share your presets among different workstations. All presets are saved with the file exten-sion *.spr to the following folders:OSX: ~/Library/Audio/Presets/Focusrite/FASTLimiter Win: Documents\Focusrite\FASTLimiter\Presets\11223344SettingsClick the small cog wheel to access the settings page of FAST Limiter (e.g. to restart the Guide Tour or to check your subscription status).Settings11442233Show Detailed View on Start-up (Global Setting)If you prefer working in Detailed View, enable this setting. FAST Limiter will now start up in Detailed View by default.Show Tooltips (Global Setting)Disable this option, if you want to hide tooltips.Use OpenGL (Global Setting)If you are experiencing graphic problems (e.g. render-ing problems), you can try to disable the OpenGL graphics acceleration.Loudness TargetSelect a publishing target (Streaming, CD, Broadcast-ing). This target will be used for the quality indicators.Take Guided TourClick this button to restart the Guided Tour. Please note that all parameters will be reset to their default values when a new tour is started!551122License InformationThis section shows the license information for your plug-in.Help CenterVisit the Help Center to e.g. manage your subscriptions or download new plug-ins and the latest updates.License Management。

地物判绘样例英文English:In geographical information systems (GIS), land cover classification plays a crucial role in mapping and analyzing the Earth's surface. Land cover classification refers to the process of categorizing the different types of land surfaces such as forests, water bodies, urban areas, agricultural fields, etc., based on their spectral, spatial, and temporal characteristics. This classification is typically done using remotely sensed data acquired from satellites or aerial imagery. The process involves various steps including image preprocessing, feature extraction, and classification algorithm application. Image preprocessing involves tasks like radiometric and geometric correction to enhance the quality of the images. Feature extraction aims to identify relevant information from the images, such as texture, color, and shape, which are then used as input variables for the classification algorithm. Classification algorithms include supervised, unsupervised, and hybrid techniques, each with its strengths and weaknesses. Supervised classification requires training samples for each land cover class, while unsupervised classification clusters pixels based on their spectral properties without priorknowledge. Hybrid techniques combine aspects of both supervised and unsupervised methods for improved accuracy. Once classified, the results are validated using ground truth data to assess the accuracy of the classification. This process helps in generating land cover maps that are valuable for various applications including environmental monitoring, urban planning, natural resource management, and disaster response.中文翻译:在地理信息系统(GIS)中,地物覆盖分类在地表地图制作和分析中起着至关重要的作用。

2011年技术物理学院08级(激光方向)专业英语翻译重点!!!作者:邵晨宇Electromagnetic电磁的principle原则principal主要的macroscopic宏观的microscopic微观的differential微分vector矢量scalar标量permittivity介电常数photons光子oscillation振动density of states态密度dimensionality维数transverse wave横波dipole moment偶极矩diode 二极管mono-chromatic单色temporal时间的spatial空间的velocity速度wave packet波包be perpendicular to线垂直be nomal to线面垂直isotropic各向同性的anistropic各向异性的vacuum真空assumption假设semiconductor半导体nonmagnetic非磁性的considerable大量的ultraviolet紫外的diamagnetic抗磁的paramagnetic顺磁的antiparamagnetic反铁磁的ferro-magnetic铁磁的negligible可忽略的conductivity电导率intrinsic本征的inequality不等式infrared红外的weakly doped弱掺杂heavily doped重掺杂a second derivative in time对时间二阶导数vanish消失tensor张量refractive index折射率crucial主要的quantum mechanics 量子力学transition probability跃迁几率delve研究infinite无限的relevant相关的thermodynamic equilibrium热力学平衡(动态热平衡)fermions费米子bosons波色子potential barrier势垒standing wave驻波travelling wave行波degeneracy简并converge收敛diverge发散phonons声子singularity奇点(奇异值)vector potential向量式partical-wave dualism波粒二象性homogeneous均匀的elliptic椭圆的reasonable公平的合理的reflector反射器characteristic特性prerequisite必要条件quadratic二次的predominantly最重要的gaussian beams高斯光束azimuth方位角evolve推到spot size光斑尺寸radius of curvature曲率半径convention管理hyperbole双曲线hyperboloid双曲面radii半径asymptote渐近线apex顶点rigorous精确地manifestation体现表明wave diffraction波衍射aperture孔径complex beam radius复光束半径lenslike medium类透镜介质be adjacent to与之相邻confocal beam共焦光束a unity determinant单位行列式waveguide波导illustration说明induction归纳symmetric 对称的steady-state稳态be consistent with与之一致solid curves实线dashed curves虚线be identical to相同eigenvalue本征值noteworthy关注的counteract抵消reinforce加强the modal dispersion模式色散the group velocity dispersion群速度色散channel波段repetition rate重复率overlap重叠intuition直觉material dispersion材料色散information capacity信息量feed into 注入derive from由之产生semi-intuitive半直觉intermode mixing模式混合pulse duration脉宽mechanism原理dissipate损耗designate by命名为to a large extent在很大程度上etalon 标准具archetype圆形interferometer干涉计be attributed to归因于roundtrip一个往返infinite geometric progression无穷几何级数conservation of energy能量守恒free spectral range自由光谱区reflection coefficient(fraction of the intensity reflected)反射系数transmission coefficient(fraction of the intensity transmitted)透射系数optical resonator光学谐振腔unity 归一optical spectrum analyzer光谱分析grequency separations频率间隔scanning interferometer扫描干涉仪sweep移动replica复制品ambiguity不确定simultaneous同步的longitudinal laser mode纵模denominator分母finesse精细度the limiting resolution极限分辨率the width of a transmission bandpass透射带宽collimated beam线性光束noncollimated beam非线性光束transient condition瞬态情况spherical mirror 球面镜locus(loci)轨迹exponential factor指数因子radian弧度configuration不举intercept截断back and forth反复spatical mode空间模式algebra代数in practice在实际中symmetrical对称的a symmetrical conforal resonator对称共焦谐振腔criteria准则concentric同心的biperiodic lens sequence双周期透镜组序列stable solution稳态解equivalent lens等效透镜verge 边缘self-consistent自洽reference plane参考平面off-axis离轴shaded area阴影区clear area空白区perturbation扰动evolution渐变decay减弱unimodual matrix单位矩阵discrepancy相位差longitudinal mode index纵模指数resonance共振quantum electronics量子电子学phenomenon现象exploit利用spontaneous emission自发辐射initial初始的thermodynamic热力学inphase同相位的population inversion粒子数反转transparent透明的threshold阈值predominate over占主导地位的monochromaticity单色性spatical and temporal coherence时空相干性by virtue of利用directionality方向性superposition叠加pump rate泵浦速率shunt分流corona breakdown电晕击穿audacity畅通无阻versatile用途广泛的photoelectric effect光电效应quantum detector 量子探测器quantum efficiency量子效率vacuum photodiode真空光电二极管photoelectric work function光电功函数cathode阴极anode阳极formidable苛刻的恶光的irrespective无关的impinge撞击in turn依次capacitance电容photomultiplier光电信增管photoconductor光敏电阻junction photodiode结型光电二极管avalanche photodiode雪崩二极管shot noise 散粒噪声thermal noise热噪声1.In this chapter we consider Maxwell’s equations and what they reveal about the propagation of light in vacuum and in matter. We introduce the concept of photons and present their density of states.Since the density of states is a rather important property,not only for photons,we approach this quantity in a rather general way. We will use the density of states later also for other(quasi-) particles including systems of reduced dimensionality.In addition,we introduce the occupation probability of these states for various groups of particles.在本章中,我们讨论麦克斯韦方程和他们显示的有关光在真空中传播的问题。

中国生态农业学报(中英文) 2022年6月 第 30 卷 第 6 期Chinese Journal of Eco-Agriculture, Jun. 2022, 30(6): 875−888DOI: 10.12357/cjea.20210820刘伯顺, 黄立华, 黄金鑫, 黄广志, 蒋小曈. 我国农田氨挥发研究进展与减排对策[J]. 中国生态农业学报 (中英文), 2022, 30(6): 875−888LIU B S, HUANG L H, HUANG J X, HUANG G Z, JIANG X T. Research progress toward and emission reduction measures of am-monia volatilization from farmlands in China[J]. Chinese Journal of Eco-Agriculture, 2022, 30(6): 875−888我国农田氨挥发研究进展与减排对策*刘伯顺1,2, 黄立华1,3**, 黄金鑫1,3, 黄广志1,2, 蒋小曈1(1. 中国科学院东北地理与农业生态研究所 长春 130102; 2. 中国科学院大学 北京 100049; 3. 吉林大安农田生态系统国家野外科学观测研究站 大安 131317)摘 要: 氨挥发是我国农田氮肥损失的主要途径, 不仅降低了氮肥利用效率, 还会造成雾霾、大气干湿沉降和温室效应等生态环境问题。

本文简要分析了近10年(2011—2020年)我国农田氨挥发研究现状, 总体上呈迅速发展态势且国际化趋势显著, 但研究的影响力有待提升; 由于我国幅员辽阔, 农田氨挥发呈现较大的时空变异特点, 与作物种类、施肥、气候、土壤以及作物生长期等密切相关, 氨挥发的调控必须因地制宜对氮肥进行科学管理; 农田氨挥发测定方法历经200多年的发展, 由最初的间接估算逐渐发展为化学测量和光谱分析, 测量的精确性和范围都得到了大幅提升。

第一部分最基本的术语及英汉对照翻译1、时谱:time-spectrumIn this paper, the time-spectrum characteristics of temporal coherence on the double-modes He-Ne laser have been analyzed and studied mainly from the theory, and relative time-spectrum formulas and experimental results have been given. Finally, this article still discusses the possible application of TC time-spectrum on the double-mode He-Ne Iaser.本文重点从理论上分析研究了双纵模He-Ne激光时间相干度的时谱特性(以下简称TC 时谱特性),给出了相应的时谱公式与实验结果,并就双纵模He-Ne激光TC时谱特性的可能应用进行了初步的理论探讨。

2、光谱:SpectraStudy on the Applications of Resonance Rayleigh Scattering Spectra in Natural Medicine Analysis共振瑞利散射光谱在天然药物分析中的应用研究3、光谱仪:spectrometerStudy on Signal Processing and Analysing System of Micro Spectrometer微型光谱仪信号处理与分析系统的研究4、单帧:single frameComposition method of color stereo image based on single fram e image基于单帧图像的彩色立体图像的生成5、探测系统:Detection SystemResearch on Image Restoration Algorithms in Imaging Detection System成像探测系统图像复原算法研究6、超光谱:Hyper-SpectralResearch on Key Technology of Hyper-Spectral Remote Sensing Image Processing超光谱遥感图像处理关键技术研究7、多光谱:multispectral multi-spectral multi-spectrumSimple Method to Compose Multi spectral Remote Sensing Data Using BMP Image File用BMP 图像文件合成多光谱遥感图像的简单方法8、色散:dispersionResearches on Adaptive Technology of Compensation for Polarization Mode Dispersion偏振模色散动态补偿技术研究9、球差:spherical aberrationThe influence of thermal effects in a beam control system and spherical aberration on the laser beam quality光束控制系统热效应与球差对激光光束质量的影响10、慧差:comaThe maximum sensitivity of coma aberration evaluation is about λ/25;估值波面慧差的极限灵敏度为λ/25;11、焦距:focal distanceAbsolute errors of the measured output focal distance range from –120 to 120μm.利用轴向扫描法确定透镜出口焦距时的绝对误差在–120—120μm之间。

近50a三峡库区汛期极端降水事件的时空变化刘晓冉;程炳岩;杨茜;郭渠;张天宇【摘要】利用三峡库区35个台站1961-2010年汛期(5-9月)的逐日降水量资料,首先定义不同台站的极端降水量阈值,统计各站近50 a逐年汛期极端降水事件的发生频次,进而分析其时空变化特征.结果表明:三峡库区汛期极端降水事件发生频次的最主要空间模态是主体一致性,同时存在东西和南北相反变化的差异.三峡库区汛期极端降水事件发生频次具有较大的空间差异,可分为具有不同变化特点的5个主要异常区.滑动t检验表明,三峡库区西南部区代表站巴南的极端降水事件在1974年后发生了一次由偏多转为偏少的突变,北部区代表站北碚在1981年后和1993年后分别发生了由偏少转为偏多和由偏多到偏少的突变,中部区代表站武隆在1984年后发生了一次由偏多转为偏少的突变.结合最大熵谱和功率谱分析表明,近50 a来各分区汛期极端降水事件发生频次的周期振荡不太一致,三峡库区东北部区代表站宜昌、北部区代表站北碚和中部区代表站武隆分别存在5、2.4和8.3a的显著周期.%Based on the daily precipitation data in flood season(from May to September) in 1961 -2010 from 35 stations over Three Gorges Reservoir area, the extreme precipitation threshold value for all stations are determined firstly, then the frequency of extreme precipitation event in the flood season are counted and their temporal and spatial characteristics are analyzed. Consistent a-nomaly distribution is the main spatial model of extreme precipitation event frequency in flood season over Three Gorges Reservoir area. The spatial distribution of extreme precipitation event frequency is complex with anomaly difference between in the south and west, and in the east and west of Three Gorges Reservoir area, which canbe divided into five main regions. The moving t-test analysis shows that there are abrupt changes of Banan in 1974, Beibei in 1981 and 1993 , and Wulong in 1984 for the extreme precipitation event frequency. The Maximum Entropy Spectral and Estimation Power Spectral analysis shows that the periodic oscillations of these regions are not consistent in recent 50 a. The distinct periodic oscillation of the extreme precipitation event frequency of Yichang, Beibei and Wulong is 5 , 2.4 and 8.3 a, respectively.【期刊名称】《湖泊科学》【年(卷),期】2012(024)002【总页数】8页(P244-251)【关键词】三峡库区;极端降水事件;时空变化;旋转主成分【作者】刘晓冉;程炳岩;杨茜;郭渠;张天宇【作者单位】重庆市气候中心,重庆401147;中国科学院大气物理研究所,北京100029;重庆市气候中心,重庆401147;重庆市气象科学研究所,重庆401147;重庆市气候中心,重庆401147;重庆市气候中心,重庆401147【正文语种】中文近年来,高温、干旱、洪涝、台风、暴雪等极端气候事件对社会经济和生态环境造成影响和危害越来越大,全球变暖背景引发的极端气候事件增多增强趋势已成为各国政府和社会各界关注的焦点.尤其是极端降水事件,其频率和强度的变化是导致洪涝灾害的主要因素,往往造成严重的经济损失和社会危害,受到专家学者和社会的普遍关注[1-3].有研究表明强降水事件在欧洲[4]、美国[5]、印度[6]等国家和地区有所增加.Gao等[7]对东亚地区的气候模拟表明在二氧化碳倍增的情况下,暴雨雨日将增加[7].许多学者对近50 a中国的极端降水变化进行分析研究,认为中国的极端降水变化态势与全球的态势基本一致,但表现出明显的区域性[8-10]和季节变化[11]特点.在全球变暖背景下中国的微量雨日普遍减少,但大暴雨日事件增多[12-14].鲍名等[15]对中国暴雨年代际变化的研究发现,长江流域暴雨有增多的趋势,华北地区暴雨则有减少的趋势.翟盘茂等[16]提出了定义极端值和极端阈值的方法,研究中国北方近50年温度和降水极端事件的变化.翟盘茂等[17-18]还指出中国降水强度普遍趋于增强,长江流域及以南地区、西北地区的极端强降水事件增多趋势明显,华北地区虽然极端降水事件频数明显减少,但极端降水量占总降水量的比例仍有所增加.Tang等[19]研究了中国持续强降水事件的气候特征,对其频数、强度和雨带等进行归类.闵屾等[20]分析了中国极端降水事件区域性和持续性特征,发现长江以南地区夏季极端降水的区域性与持续性均较好,容易导致区域性洪涝灾害发生.王志福等[21]分析了中国不同持续时间的极端降水事件的变化特征,指出持续2 d以上极端降水事件在长江中下游流域、江南地区和高原东部有显著增多和增强的趋势,而在华北和西南地区有减少和减弱趋势.还有很多学者着重研究了我国各流域的极端降水事件的变化特征[22-26].三峡库区位于四川盆地与长江中下游平原的结合部,西起重庆江津,东至湖北宜昌,全长600 km,为跨长江两岸数公里的狭长区域.长江三峡水利枢纽工程举世瞩目,三峡库区是长江中下游地区的生态环境屏障和西部生态环境建设的重点,三峡库区的区域气候变化及其影响成为人们日益关注的科学问题[27].近些年在全球变暖背景下,三峡库区的气候变化规律方面研究取得了一些进展,学者们对三峡库区的基本气候特征[28-29]、气象灾害变化趋势[30]以及未来21世纪库区的气候变化预估[31-32]等方面进行了研究,这对开展三峡库区的气候变化适应措施具有重要的借鉴意义.但目前对三峡库区极端降水事件的变化规律还缺乏深入认识,本文利用百分位阈值定义三峡库区极端降水事件,并分析其发生频次的时空变化特征,这对于三峡库区防灾减灾和应对气候变化具有参考意义.在我国通常把日降水量超过50 mm的降水事件称为暴雨,日降水量超过25 mm 的降水事件称为大雨.事实上,不同地区气候的地域差异明显,这类绝对阈值在各个地区之间缺乏可比性.目前,国际上气候极值变化研究通常选择某个百分位作为阈值定义极端气候事件[2-6].本文利用三峡库区35个台站1961-2010年的逐日降水量资料,首先把各站逐年汛期5-9月的日降水量序列第95个百分位值的平均值定义为该站极端降水事件的阈值,当该站某日降水量超过这一阈值时,称该站发生极端降水事件[16].降水量第95个百分位值的计算方法采用Bonsal概率法[3],即把降水量序列按升序排列为:某个值小于或等于xm的概率为:式中,m为xm的序号,n为降水量序列(含无降水日)的长度,第95个百分位值就是指P=95%所对应xm的值.在确定各站极端降水事件阈值的基础上,计算三峡库区各站1961-2010年逐年的汛期极端降水事件发生频次,并采用主成分分析(PCA)和旋转主成分分析(RPCA)[33]法分析三峡库区汛期极端降水事件的整体异常空间分布和局地异常敏感区.利用滑动t检验、最大熵谱和功率谱等方法[33]分析各异常区极端降水事件的变化特征.三峡库区东、西部地区极端降水量阈值分布存在明显差异,库区东部地区的阈值相对较大,大部分地区在32.5 mm以上,最大值位于湖北的鹤峰,达40.7 mm;库区西部阈值相对较小,均在30 mm以下,最小值位于重庆的丰都,仅26.6mm(图1a).三峡库区各站极端降水量阈值均小于暴雨的标准阈值50 mm/d.三峡库区近50 a平均极端降水事件发生频次的空间分布表明,发生频次自西北向东增加,大体呈东多西少的分布特征,库区西北部极端降水事件发生频次在7.0d/a以下,最少为重庆的梁平,为6.8 d/a.库区东部极端降水事件发生频次较高在7.2 d/a以上,最多为湖北的秭归,达8.0 d/a(图1b).通过对三峡库区35个台站1961-2010年的汛期极端降水事件发生频次进行主成分和旋转主成分分析,得到的载荷向量(LV)和旋转载荷向量(RLV)能够较好地反映三峡库区极端降水事件的空间异常分布特征.旋转前后主成分和旋转主成分的方差贡献率表明,旋转后各分量的方差贡献比旋转前要均匀分散,除第1个分量的方差贡献减小外,其它分量旋转后均增加,同时某些分量方差贡献大小的顺序也发生了变化,这是因为旋转后各分量的物理意义着重表现空间的相关性分布特征,相应的方差贡献只集中在某一较小的区域,使其它区域的方差贡献尽量减小,着重把整个计算范围的方差贡献集中到前几个主成分上(表1).下面给出前3个载荷向量场,它们对应主成分的累积方差贡献率为53.40%,以揭示三峡库区汛期极端降水事件的整体空间异常结构.第一载荷向量场(图2a)在整个三峡库区为同一符号的正值区,而且这一空间异常分布对应的主成分对总体方差的贡献达35.48%,库区东南部为载荷向量的高值区,其中心最大值位于湖北的宣恩,达0.76,而库区西南部为低值区,其中心最小值位于重庆的南川,仅为0.35.这表明尽管三峡库区地形复杂,但汛期极端降水事件变化的空间分布还是具有较好的一致性,最主要的空间模态是主体一致性.第二载荷向量场(图2b)揭示了三峡库区极端降水事件在库区东、西部反位相变化的分布特征,108°30'E以东的库区东部为载荷向量正值区,中心位于湖北的长阳,为0.48;库区西部为负值区,中心位于重庆的巴南,为-0.65.这种分布突出反映了三峡库区东部极端降水事件偏多(少),则西部极端降水事件偏少(多)的特点.第三载荷向量场(图2c)反映了三峡库区的极端降水事件变化的南北差异.库区南部为正值区,中心位于重庆的黔江,为0.51;库区北部为负值区,中心位于重庆的云阳,为-0.43.这种分布突出反映了三峡库区的南部极端降水事件偏多(少),则北部极端降水事件偏少(多)的特点.从三峡库区极端降水事件发生频次的总体空间异常结构可以看出,库区极端降水变化比较复杂,存在着明显的东西或南北差异.为了进一步研究库区各地域极端降水事件的局地特点,在上述主成分分析的基础上,取前5个主成分(其累积方差贡献率达到63.21%)及对应的载荷向量进行旋转,按旋转载荷向量(RLV)绝对值>0.5的高载荷区基本布满全区来考虑,得到三峡库区极端降水事件的5个主要空间异常区.三峡库区东北部区,主要包括湖北的西北部,旋转载荷向量RLV1的中心在湖北的宜昌,为0.77(图3a);三峡库区西南部区,主要包括重庆的西南部,旋转载荷向量RLV2的中心在重庆的巴南,达-0.81(图3b);三峡库区东南部区,主要包括重庆的东南部和湖北的西南部,旋转载荷向量RLV3的中心在湖北的来凤,达0.83(图3c);三峡库区北部区,主要包括重庆的中北部和东北部,旋转载荷向量RLV4的中心在重庆的北碚,为-0.66(图3d);三峡库区中部区主要包括重庆的中南部,旋转载荷向量RLV5的中心在重庆的武隆,为0.74(图3e).旋转载荷大值区基本没有重叠之处,它们将三峡库区汛期极端降水发生频次基本分为5个区域(图3).这5个区是三峡库区极端降水事件异常最敏感的区域,做好上述几个类型区域极端降水事件的分析,就抓住了三峡库区极端降水事件长期变化的关键.某空间点的旋转载荷向量RLV值实际上表示对应的旋转主成分与该点要素之间的相关程度,同属于某一高载荷区内点具有较高的相关,而属于不同类型区的点之间的相关性则较差,因此可以取各异常区RLV值最大的站为代表站分析该异常型的时间变化特征.各代表站汛期极端降水事件发生频次的逐年变化表明(图4),三峡库区东北部区代表站宜昌的极端降水事件发生频次的年际波动明显,在1970s前期、1970s后期至1980s前期、1990s中期至2010s处于相对偏多期,其它时段整体处于相对偏少期,1990s以来有增加趋势.近50 a,宜昌汛期极端降水事件最多发生在1973 年达 14 d,1966、1977、1981、1991、2001 年相对较少,均为 2 d.三峡库区西南部区代表站巴南的极端降水事件在1970s前期、1980s中期和1990s中期处于相对偏多期,其他时段整体处于相对偏少期,尤其是1990s后期以来呈减少趋势,极端降水事件最多发生在1998年,达16 d,2006 年最少,为2 d.三峡库区东南部区代表站来凤的极端降水事件在1960s前期、1960s后期到1980s中期和1990s后期处于相对偏多期,其他时段整体处于相对偏少期,1990s后期以来呈减少趋势,极端降水事件最多发生在1983年达20 d,1961和1985年最少,均为2 d.三峡库区北部区代表站北碚的极端降水事件在1960s中期、1980s到1990s前期和2010s中期处于相对偏多期,其他时段整体处于相对偏少期,极端降水事件最多发生在1985年,为13 d,1961和1975年最少,均为2 d.三峡库区中部区代表站武隆的极端降水事件具有明显的年代际变化,1960s中期到1980s中期以及2010s中期处于相对偏多期,1980s后期以及2010s前期处于相对偏少期,极端降水事件最多发生在1975年,达16 d,1986、1993 和2002 年最少,均为2 d.为了进一步了解近50 a各分区代表站极端降水事件发生频次的长期变化趋势,分别计算其气候倾向率和趋势系数(表2).宜昌和北碚表现为增多趋势,增多的气候倾向率分别为0.32 d/10 a和0.23 d/10 a;巴南和来凤均表现为很弱的减少趋势,减少率分别为-0.08 d/10 a和-0.06 d/10 a,武隆减小趋势比较明显,减少率为0.41 d/10 a.由此可见,尽管各异常区极端降水事件发生频次的年际变化特征不太一致,从长期变化趋势来看,三峡库区东北部区代表站宜昌和北部区代表站北碚表现为很弱的增加趋势,三峡库区西南部区代表站巴南和东南部区代表站来凤表现为很弱的减少趋势,三峡库区中部区代表站武隆则表现为相对较大的减少趋势.利用滑动t检验法(子序列长度n1=n2=10 a)对各代表站汛期极端降水事件频次进行突变检验(图5),宜昌与来凤没有检测出突变点(图略),巴南极端降水事件t统计量在1974年的正值达到0.05显著性水平,说明1974年以前极端降水事件偏多,1974年以后偏少,在1974年后发生了一次由偏多转为偏少的突变.北碚极端降水事件t统计量在1981年的负值和1993年的正值达到了0.05显著性水平,说明1981年后发生了由偏少转为偏多的突变,在1993年后又发生了由偏多到偏少的突变.武隆极端降水事件t统计量在1984年的正值远超过0.05显著性水平,说明1984年后发生了一次由偏多转为偏少的突变.最大熵谱分析方法对时间序列的周期信号具有分辨率高的优点,尤其适用于短时间序列.下面采用最大熵谱结合功率谱分析的方法分析三峡库区各分区汛期极端降水事件发生频次的变化周期,当最大熵谱分析提取出的周期与功率谱方法分析的周期一致时,则认为该周期是其主要周期且是可信的.各代表站汛期极端降水事件的最大熵谱和功率谱密度变化曲线表明(图6),宜昌极端降水事件最大熵谱存在5.0 a 的主峰值和2.1 a的次峰值,功率谱的主周期为5 a且超过了0.05显著性水平的白噪声标准谱,这表明宜昌极端降水事件存在显著的5 a周期的变化,而功率谱的2.1 a的次周期没有通过显著性水平检验.巴南汛期极端降水事件最大熵谱存在2 a 的峰值,但功率谱没有通过显著性水平检验,说明周期不明显.来凤极端降水事件最大熵谱存在3.8 a的峰值,但也不显著.北碚极端降水事件最大熵谱存在5 a的主峰值和2.4 a的次峰值,而功率谱分析表明5 a的周期不显著,2.4 a的周期通过了0.05的显著性水平检验.武隆极端降水事件最大熵谱存在8.3 a的主峰值,以及4.2 a和2.4 a的次峰值,功率谱分析表明8.3 a周期通过了0.05显著性水平检验,其他周期不显著.因此,近50 a来各异常区极端降水事件发生频次的周期振荡不太一致,宜昌、北碚和武隆分别存在5、2.4和 8.3 a的显著周期.在全球变暖的大背景下,近50 a三峡库区极端降水事件的增减趋势不如长江中下游地区明显,但因其特殊的地理位置和复杂的地形特征,极端强降水事件的变化存在明显的区域差异.因此,今后仍需要探讨库区极端降水事件变化与气候变化的关联,研究流域极端降水变化的成因机制.【相关文献】[1]Meehl GA,Karl T,Easterling DR et al.An introduction to trends in extreme weather and climate events:Observations,socioeconomic impacts,terrestrial ecological impacts,and model projections.Bulletin of the American Meteorological Society,2000,81(3):413-416.[2]Frich P,Alexander LV,Della-Marta P et al.Observed coherent changes in climatic extremes during the second half of the twentieth century.Climate Research,2002,19:193-212.[3]Bonsal BR,Zhang X,Vincent LA et al.Characteristics of daily and extreme temperature over Canada.Journal of Cli mate,2001,14(9):1959-1976.[4]Tank AMGK,Konnen GP.Trends in indices of daily temperature and precipitation extremes in Europe,1946-99.Journal of Climate,2003,16(22):3665-3680.[5]Karl TR,Knight RW.Secular trends of precipitation amount,frequency and intensity in the United States.Bulletin of the American Meteorological Society,1998,79(2):231-241.[6]Goswami BN,Venugopal V,Sengupta D et al.Increasing trend of extreme rain events over India in a warming environment.Science,2006,314(5804):1442-1445.[7]Gao XJ,Zhao ZC,Giorgi F.Changes of extreme events in regional climate simulation over East Asia.Advances in Atmospheric Sciences,2002,19(5):927-942.[8]Han H,Gong DY.Extreme climate events over northern China during the last 50years.Geographical Sciences,2003,13(4):469-479.[9]Zhai PM,Sun AJ,Ren FM et al.Changes of climate extremes in China.Climatic Change,1999,42:203-218.[10]潘晓华,翟盘茂.气候极端事件的选取与分析.气象,2002,28(18):28-31.[11]陈海山,范苏丹,张新华.中国近50 a极端降水事件变化特征的季节性差异.大气科学学报,2009,32(6):744-751.[12]Qian WH,Lin X.Regional trends in recent precipitation indices inChina.Meteorology and Atmospheric Physics,2005,90:193-207.[13]Qian WH,Fu JL,Yan ZW.Decrease of light rain events in summer associated witha warming environment in China during 1961 -2005.Geophysical Research Letters,2007,34(11):L11705.[14]钱维宏,符娇兰,张玮玮等.近40年中国平均气候与极值气候变化的概述.地球科学进展,2007,22(7):673-683.[15]鲍名,黄荣辉.近40年我国暴雨的年代际变化特征.大气科学,2006,30(6):1057-1067. [16]翟盘茂,潘晓华.中国北方近50年温度和降水极端事件变化.地理学报,2003,58(增刊):1-10.[17]Zhai PM,Zhang XB,Wan H et al.Trends in total precipitation and frequency of daily precipitation extremes over China.Journal of Climate,2005,18(7):1096-1108. [18]翟盘茂,王萃萃,李威.极端降水事件变化的观测研究.气候变化研究进展,2007,3(3):144-148.[19]Tang YB,Gan JJ,Zhao L et al.On the climatology of persistent heavy rainfall events in China.Advances in Atmospheric Sciences,2006,23(5):678-692.[20]闵屾,钱永甫.中国极端降水事件的区域性和持续性研究.水科学进展,2008,19(6):763-771.[21]王志福,钱永甫.中国极端降水事件的频数和强度特征.水科学进展,2009,20(1):1-8. [22]Su BD,Xiao B,Zhu D et al.Trends in frequency of precipitation extremes in the Yangtze River Basin,China:1960 -2003.Hydrological Sciences Journal,2005,50(3):479-492.[23]Zhang Q,Xu CY,Zhang ZX et al.Spatial and temporal variability of precipitation maxima during 1960 -2005 in the Yangtze River basin and possible association withlarge-scale circulation.Journal of Hydrology,2008,353(3/4):215-227.[24]闵岫,刘建.鄱阳湖区域极端降水异常的特征及成因.湖泊科学,2011,23(3):435-444. [25]李斌,李丽娟,李海滨等.1960-2005年澜沧江流域极端降水变化特征.地理科学进展,2011,30(3):290-298.[26]余敦先,夏军,张永勇等.近50年来淮河流域极端降水的时空变化及统计特征.地理学报,2011,66(9):1200-1210.[27]蔡庆华,刘敏,何永坤等.长江三峡库区气候变化影响评估报告.北京:气象出版社,2010.[28]王梅华,刘莉红,张强.三峡地区气候特征.气象,2005,31(7):67-71.[29]陈鲜艳,张强,叶殿秀等.三峡库区局地气候变化.长江流域资源与环境,2009,18(1):47-51.[30]陈鲜艳,张强,邹旭恺等.近几十年三峡库区主要气象灾害变化趋势.长江流域资源与环境,2009,18(3):296-300.[31]刘晓冉,杨茜,程炳岩等.三峡库区21世纪气候变化的情景预估分析.长江流域资源与环境,2010,19(1):42-47.[32]张天宇,程炳岩,范莉等.21世纪三峡库区中雨以上日数的情景预估.长江流域资源与环境,2010,19(Z2):80-87.[33]魏凤英.现代气候统计诊断与预测技术:第2版.北京:气象出版社,2007:57-124.。